diff --git a/ch02/01_main-chapter-code/ch02.ipynb b/ch02/01_main-chapter-code/ch02.ipynb

index 902d604..0dfdf3b 100644

--- a/ch02/01_main-chapter-code/ch02.ipynb

+++ b/ch02/01_main-chapter-code/ch02.ipynb

@@ -705,7 +705,7 @@

" - `[BOS]` (beginning of sequence) marks the beginning of text\n",

" - `[EOS]` (end of sequence) marks where the text ends (this is usually used to concatenate multiple unrelated texts, e.g., two different Wikipedia articles or two different books, and so on)\n",

" - `[PAD]` (padding) if we train LLMs with a batch size greater than 1 (we may include multiple texts with different lengths; with the padding token we pad the shorter texts to the longest length so that all texts have an equal length)\n",

- "- `[UNK]` to represent works that are not included in the vocabulary\n",

+ "- `[UNK]` to represent words that are not included in the vocabulary\n",

"\n",

"- Note that GPT-2 does not need any of these tokens mentioned above but only uses an `<|endoftext|>` token to reduce complexity\n",

"- The `<|endoftext|>` is analogous to the `[EOS]` token mentioned above\n",

diff --git a/ch04/01_main-chapter-code/ch04.ipynb b/ch04/01_main-chapter-code/ch04.ipynb

index 6d4a0c3..b734330 100644

--- a/ch04/01_main-chapter-code/ch04.ipynb

+++ b/ch04/01_main-chapter-code/ch04.ipynb

@@ -1180,7 +1180,7 @@

"- In the original GPT-2 paper, the researchers applied weight tying, which means that they reused the token embedding layer (`tok_emb`) as the output layer, which means setting `self.out_head.weight = self.tok_emb.weight`\n",

"- The token embedding layer projects the 50,257-dimensional one-hot encoded input tokens to a 768-dimensional embedding representation\n",

"- The output layer projects 768-dimensional embeddings back into a 50,257-dimensional representation so that we can convert these back into words (more about that in the next section)\n",

- "- So, the embedding and output layer have the same number of weight parameters, as we can see based on the shape of their weight matrices: the next chapter\n",

+ "- So, the embedding and output layer have the same number of weight parameters, as we can see based on the shape of their weight matrices\n",

"- However, a quick note about its size: we previously referred to it as a 124M parameter model; we can double check this number as follows:"

]

},

diff --git a/setup/02_installing-python-libraries/README.md b/setup/02_installing-python-libraries/README.md

index 3eec247..c0df819 100644

--- a/setup/02_installing-python-libraries/README.md

+++ b/setup/02_installing-python-libraries/README.md

@@ -19,7 +19,7 @@ python python_environment_check.py

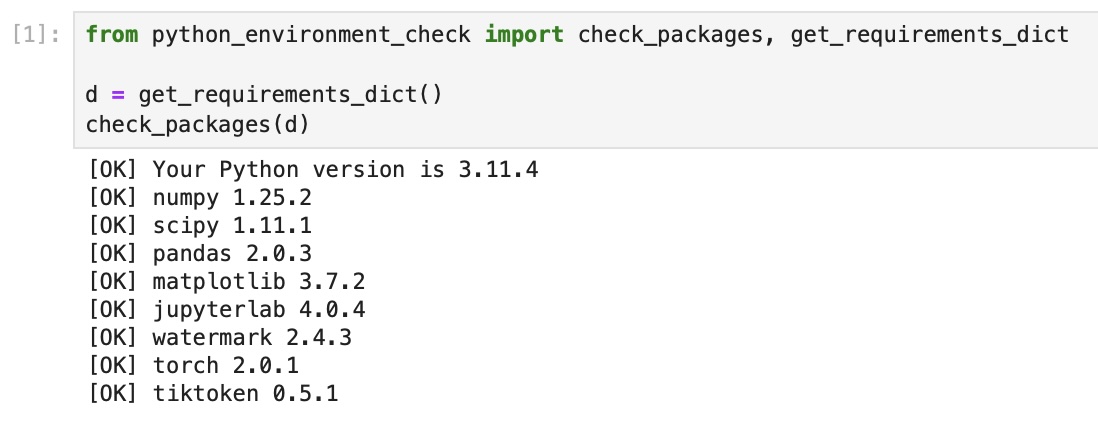

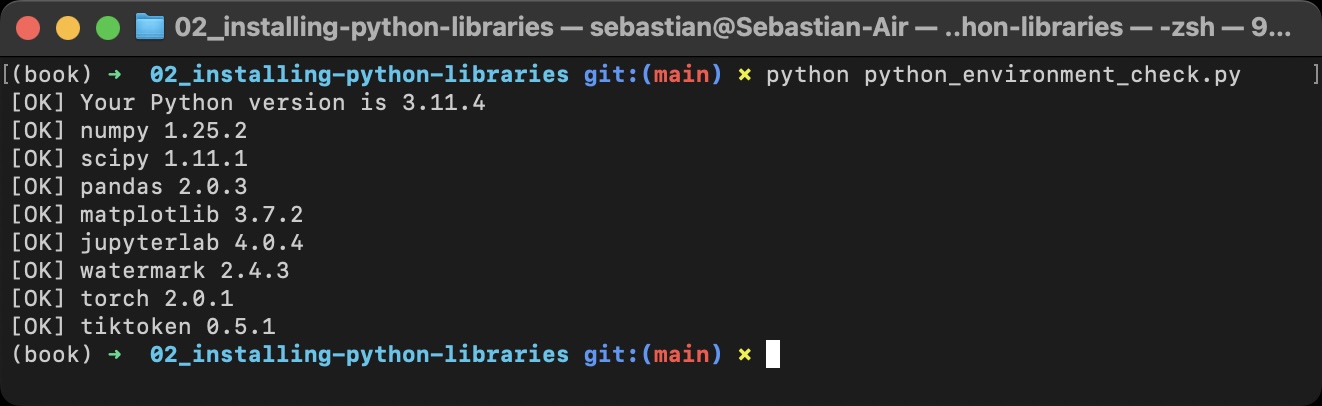

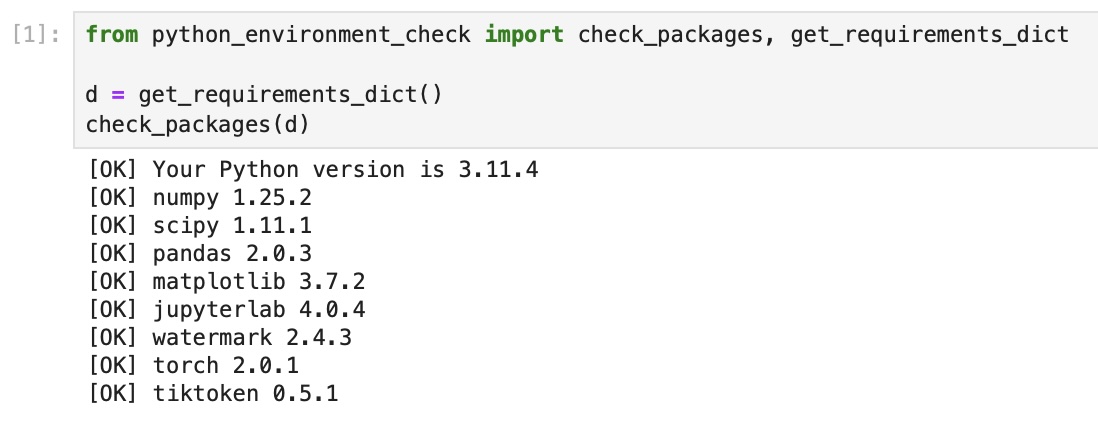

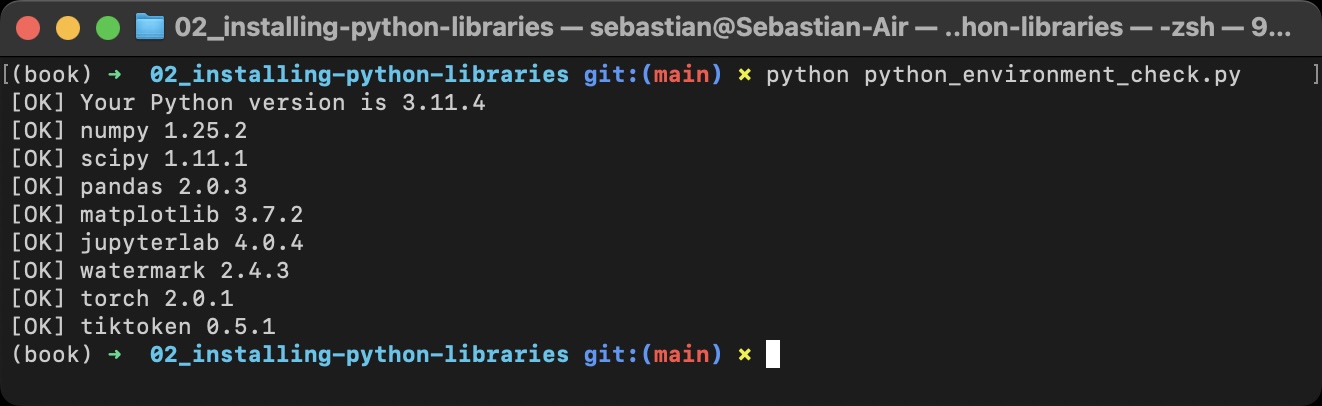

-It's also recommended to check the versions in JupyterLab by running the `jupyter_environment_check.ipynb` in this directory, which should ideally give you the same results as above.

+It's also recommended to check the versions in JupyterLab by running the `python_environment_check.ipynb` in this directory, which should ideally give you the same results as above.

-It's also recommended to check the versions in JupyterLab by running the `jupyter_environment_check.ipynb` in this directory, which should ideally give you the same results as above.

+It's also recommended to check the versions in JupyterLab by running the `python_environment_check.ipynb` in this directory, which should ideally give you the same results as above.

-It's also recommended to check the versions in JupyterLab by running the `jupyter_environment_check.ipynb` in this directory, which should ideally give you the same results as above.

+It's also recommended to check the versions in JupyterLab by running the `python_environment_check.ipynb` in this directory, which should ideally give you the same results as above.

-It's also recommended to check the versions in JupyterLab by running the `jupyter_environment_check.ipynb` in this directory, which should ideally give you the same results as above.

+It's also recommended to check the versions in JupyterLab by running the `python_environment_check.ipynb` in this directory, which should ideally give you the same results as above.