mirror of

https://github.com/rasbt/LLMs-from-scratch.git

synced 2025-10-27 15:59:49 +00:00

* fixed typos * fixed formatting * Update ch03/02_bonus_efficient-multihead-attention/mha-implementations.ipynb * del weights after load into model --------- Co-authored-by: Sebastian Raschka <mail@sebastianraschka.com>

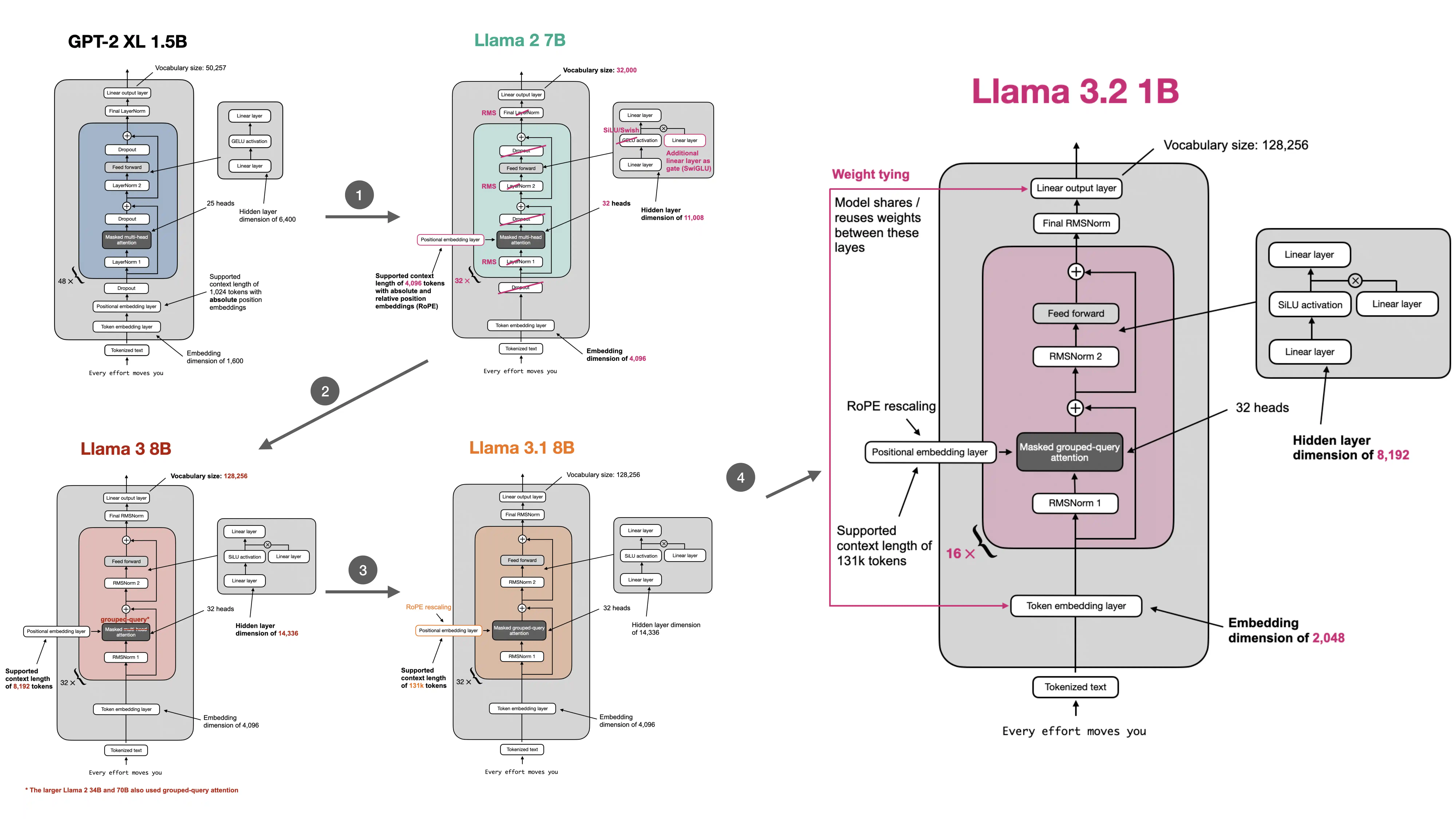

Converting GPT to Llama

This folder contains code for converting the GPT implementation from chapter 4 and 5 to Meta AI's Llama architecture in the following recommended reading order:

- converting-gpt-to-llama2.ipynb: contains code to convert GPT to Llama 2 7B step by step and loads pretrained weights from Meta AI

- converting-llama2-to-llama3.ipynb: contains code to convert the Llama 2 model to Llama 3, Llama 3.1, and Llama 3.2

- standalone-llama32.ipynb: a standalone notebook implementing Llama 3.2