mirror of

https://github.com/rasbt/LLMs-from-scratch.git

synced 2025-11-16 18:14:40 +00:00

* Added Apple Silicon GPU device * Added Apple Silicon GPU device * delete: remove unused model.pth file from understanding-buffers * update * update --------- Co-authored-by: missflash <missflash@gmail.com>

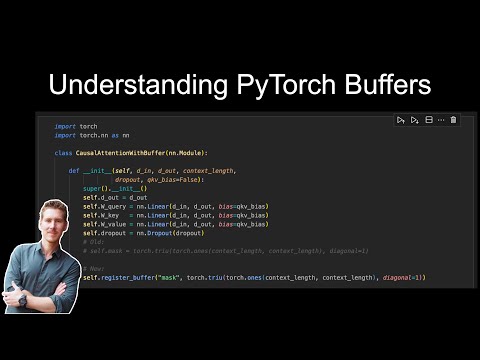

Understanding PyTorch Buffers

- understanding-buffers.ipynb explains the idea behind PyTorch buffers, which are used to implement the causal attention mechanism in chapter 3

Below is a hands-on video tutorial I recorded to explain the code: