| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-DocLayout_plus-L | Inference Model/Training Model | 83.2 | 53.03 / 17.23 | 634.62 / 378.32 | 126.01 | A higher-precision layout area localization model trained on a self-built dataset containing Chinese and English papers, PPT, multi-layout magazines, contracts, books, exams, ancient books and research reports using RT-DETR-L |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-DocBlockLayout | Inference Model/Training Model | 95.9 | 34.60 / 28.54 | 506.43 / 256.83 | 123.92 | A layout block localization model trained on a self-built dataset containing Chinese and English papers, PPT, multi-layout magazines, contracts, books, exams, ancient books and research reports using RT-DETR-L |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-DocLayout-L | Inference Model/Training Model | 90.4 | 33.59 / 33.59 | 503.01 / 251.08 | 123.76 | A high-precision layout area localization model trained on a self-built dataset containing Chinese and English papers, magazines, contracts, books, exams, and research reports using RT-DETR-L. |

| PP-DocLayout-M | Inference Model/Training Model | 75.2 | 13.03 / 4.72 | 43.39 / 24.44 | 22.578 | A layout area localization model with balanced precision and efficiency, trained on a self-built dataset containing Chinese and English papers, magazines, contracts, books, exams, and research reports using PicoDet-L. |

| PP-DocLayout-S | Inference Model/Training Model | 70.9 | 11.54 / 3.86 | 18.53 / 6.29 | 4.834 | A high-efficiency layout area localization model trained on a self-built dataset containing Chinese and English papers, magazines, contracts, books, exams, and research reports using PicoDet-S. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet_layout_1x_table | Inference Model/Training Model | 97.5 | 9.57 / 6.63 | 27.66 / 16.75 | 7.4 | A high-efficiency layout area localization model trained on a self-built dataset using PicoDet-1x, capable of detecting table regions. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet-S_layout_3cls | Inference Model/Training Model | 88.2 | 8.43 / 3.44 | 17.60 / 6.51 | 4.8 | A high-efficiency layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-S. |

| PicoDet-L_layout_3cls | Inference Model/Training Model | 89.0 | 12.80 / 9.57 | 45.04 / 23.86 | 22.6 | A balanced efficiency and precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-L. |

| RT-DETR-H_layout_3cls | Inference Model/Training Model | 95.8 | 114.80 / 25.65 | 924.38 / 924.38 | 470.1 | A high-precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using RT-DETR-H. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet_layout_1x | Inference Model/Training Model | 97.8 | 9.62 / 6.75 | 26.96 / 12.77 | 7.4 | A high-efficiency English document layout area localization model trained on the PubLayNet dataset using PicoDet-1x. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet-S_layout_17cls | Inference Model/Training Model | 87.4 | 8.80 / 3.62 | 17.51 / 6.35 | 4.8 | A high-efficiency layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-S. |

| PicoDet-L_layout_17cls | Inference Model/Training Model | 89.0 | 12.60 / 10.27 | 43.70 / 24.42 | 22.6 | A balanced efficiency and precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-L. |

| RT-DETR-H_layout_17cls | Inference Model/Training Model | 98.3 | 115.29 / 101.18 | 964.75 / 964.75 | 470.2 | A high-precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using RT-DETR-H. |

| Mode | GPU Configuration | CPU Configuration | Acceleration Technology Combination |

|---|---|---|---|

| Normal Mode | FP32 Precision / No TRT Acceleration | FP32 Precision / 8 Threads | PaddleInference |

| High-Performance Mode | Optimal combination of pre-selected precision types and acceleration strategies | FP32 Precision / 8 Threads | Pre-selected optimal backend (Paddle/OpenVINO/TRT, etc.) |

[xmin, ymin, xmax, ymax].

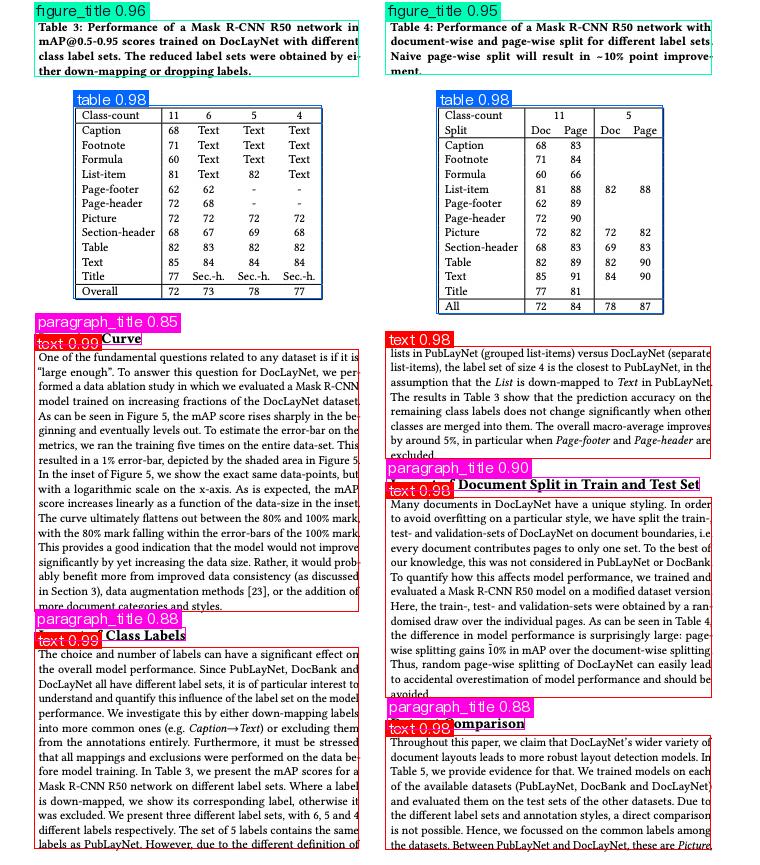

The visualized image is as follows:

Relevant methods, parameters, and explanations are as follows:

* `LayoutDetection` instantiates a target detection model (here, `PP-DocLayout_plus-L` is used as an example). The detailed explanation is as follows:

Relevant methods, parameters, and explanations are as follows:

* `LayoutDetection` instantiates a target detection model (here, `PP-DocLayout_plus-L` is used as an example). The detailed explanation is as follows:

| Parameter | Description | Type | Default |

|---|---|---|---|

model_name |

Model name. If set to None, PP-DocLayout-L will be used. |

str|None |

None |

model_dir |

Model storage path. | str|None |

None |

device |

Device for inference. For example: "cpu", "gpu", "npu", "gpu:0", "gpu:0,1".If multiple devices are specified, parallel inference will be performed. By default, GPU 0 is used if available; otherwise, CPU is used. |

str|None |

None |

enable_hpi |

Whether to enable high-performance inference. | bool |

False |

use_tensorrt |

Whether to use the Paddle Inference TensorRT subgraph engine. If the model does not support acceleration through TensorRT, setting this flag will not enable acceleration. For Paddle with CUDA version 11.8, the compatible TensorRT version is 8.x (x>=6), and it is recommended to install TensorRT 8.6.1.6. For Paddle with CUDA version 12.6, the compatible TensorRT version is 10.x (x>=5), and it is recommended to install TensorRT 10.5.0.18. |

bool |

False |

precision |

Computation precision when using the TensorRT subgraph engine in Paddle Inference. Options: "fp32", "fp16". |

str |

"fp32" |

enable_mkldnn |

Whether to enable MKL-DNN acceleration for inference. If MKL-DNN is unavailable or the model does not support it, acceleration will not be used even if this flag is set. | bool |

True |

mkldnn_cache_capacity |

MKL-DNN cache capacity. | int |

10 |

cpu_threads |

Number of threads to use for inference on CPUs. | int |

10 |

img_size |

Input image size.

|

int|list|None |

None |

threshold |

Threshold for filtering low-confidence predictions.

|

float|dict|None |

None |

layout_nms |

Whether to use NMS post-processing to filter overlapping boxes.

|

bool|None |

None |

layout_unclip_ratio |

Scaling factor for the side length of the detection box.

|

float|list|dict|None |

None |

layout_merge_bboxes_mode |

Merge mode for model output bounding boxes.

|

str|dict|None |

None |

| Parameter | Description | Type | Default |

|---|---|---|---|

input |

Input data to be predicted. Required. Supports multiple input types:

|

Python Var|str|list |

|

batch_size |

Batch size, positive integer. | int |

1 |

threshold |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|dict|None |

None |

layout_nms |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

bool|None |

None |

layout_unclip_ratio |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|list|dict|None |

None |

layout_merge_bboxes_mode |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

str|dict|None |

None |

| Method | Method Description | Parameters | Parameter type | Parameter Description | Default value |

|---|---|---|---|---|---|

print() |

Print the result to the terminal | format_json |

bool |

Do you want to use JSON indentation formatting for the output content |

True |

indent |

int |

Specify the indentation level to enhance the readability of the JSON data output, only valid when format_json is True |

4 | ||

ensure_ascii |

bool |

Control whether to escape non ASCII characters to Unicode characters. When set to True, all non ASCII characters will be escaped; False preserves the original characters and is only valid when format_json is True |

False |

||

save_to_json() |

Save the result as a JSON format file | save_path |

str |

The saved file path, when it is a directory, the name of the saved file is consistent with the name of the input file type | None |

indent |

int |

Specify the indentation level to enhance the readability of the JSON data output, only valid when format_json is True |

4 | ||

ensure_ascii |

bool |

Control whether to escape non ASCII characters to Unicode characters. When set to True, all non ASCII characters will be escaped; False preserves the original characters and is only valid whenformat_json is True |

False |

||

save_to_img() |

Save the results as an image format file | save_path |

str |

The saved file path, when it is a directory, the name of the saved file is consistent with the name of the input file type | None |

| Attribute | Description |

|---|---|

json |

Get the prediction result in json format |

img |

Get the visualized image in dict format |