| Model | Model Download Link | mAP(%) | GPU Inference Time (ms) [Regular Mode / High-Performance Mode] |

CPU Inference Time (ms) [Regular Mode / High-Performance Mode] |

Model Storage Size (M) | Description |

|---|---|---|---|---|---|---|

| RT-DETR-L_wired_table_cell_det | Inference Model/Training Model | 82.7 | 35.00 / 10.45 | 495.51 / 495.51 | 124M | RT-DETR is a real-time end-to-end object detection model. The Baidu PaddlePaddle Vision team pre-trained on a self-built table cell detection dataset based on the RT-DETR-L as the base model, achieving good performance in detecting both wired and wireless table cells. |

| RT-DETR-L_wireless_table_cell_det | Inference Model/Training Model |

| Mode | GPU Configuration | CPU Configuration | Acceleration Technology Combination |

|---|---|---|---|

| Regular Mode | FP32 Precision / No TRT Acceleration | FP32 Precision / 8 Threads | PaddleInference |

| High-Performance Mode | Optimal combination of prior precision type and acceleration strategy | FP32 Precision / 8 Threads | Choose the optimal prior backend (Paddle/OpenVINO/TRT, etc.) |

[xmin, ymin, xmax, ymax]

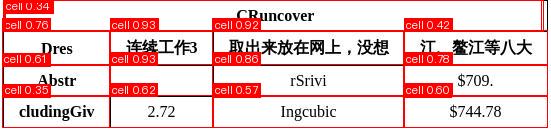

The visualized image is as follows:

The relevant methods, parameters, etc., are described as follows:

* `TableCellsDetection` instantiates the table cell detection model (taking `RT-DETR-L_wired_table_cell_det` as an example here), with specific explanations as follows:

The relevant methods, parameters, etc., are described as follows:

* `TableCellsDetection` instantiates the table cell detection model (taking `RT-DETR-L_wired_table_cell_det` as an example here), with specific explanations as follows:

| Parameter | Description | Type | Default |

|---|---|---|---|

model_name |

Model name | str |

PP-DocLayout-L |

model_dir |

Model storage path | str |

None |

device |

Device(s) to use for inference. Examples: cpu, gpu, npu, gpu:0, gpu:0,1.If multiple devices are specified, inference will be performed in parallel. Note that parallel inference is not always supported. By default, GPU 0 will be used if available; otherwise, the CPU will be used. |

str |

None |

enable_hpi |

Whether to use the high performance inference. | bool |

False |

use_tensorrt |

Whether to use the Paddle Inference TensorRT subgraph engine. For Paddle with CUDA version 11.8, the compatible TensorRT version is 8.x (x>=6), and it is recommended to install TensorRT 8.6.1.6. For Paddle with CUDA version 12.6, the compatible TensorRT version is 10.x (x>=5), and it is recommended to install TensorRT 10.5.0.18. | bool |

False |

min_subgraph_size |

Minimum subgraph size for TensorRT when using the Paddle Inference TensorRT subgraph engine. | int |

3 |

precision |

Precision for TensorRT when using the Paddle Inference TensorRT subgraph engine. Options: fp32, fp16, etc. |

str |

fp32 |

enable_mkldnn |

Whether to enable MKL-DNN acceleration for inference. If MKL-DNN is unavailable or the model does not support it, acceleration will not be used even if this flag is set. | bool |

True |

cpu_threads |

Number of threads to use for inference on CPUs. | int |

10 |

img_size |

Size of the input image;If not specified, the default configuration of the PaddleOCR official model will be used Examples:

|

int/list/None |

None |

threshold |

Threshold to filter out low-confidence predictions; In table cell detection tasks, lowering the threshold appropriately may help to obtain more accurate results. Examples:

|

float/dict/None |

None |

| Parameter | Description | Type | Default |

|---|---|---|---|

input |

Input data to be predicted. Required. Supports multiple input types:

|

Python Var|str|list |

|

batch_size |

Batch size, positive integer. | int |

1 |

threshold |

Threshold for filtering out low-confidence prediction results; Examples:

|

float/dict/None |

None |

| Method | Description | Parameter | Type | Parameter Description | Default Value |

|---|---|---|---|---|---|

print() |

Print result to terminal | format_json |

bool |

Whether to format the output content using JSON indentation |

True |

indent |

int |

Specifies the indentation level to beautify the output JSON data, making it more readable, effective only when format_json is True |

4 | ||

ensure_ascii |

bool |

Controls whether to escape non-ASCII characters into Unicode. When set to True, all non-ASCII characters will be escaped; False will retain the original characters, effective only when format_json is True |

False |

||

save_to_json() |

Save the result as a json format file | save_path |

str |

The path to save the file. When specified as a directory, the saved file is named consistent with the input file type. | None |

indent |

int |

Specifies the indentation level to beautify the output JSON data, making it more readable, effective only when format_json is True |

4 | ||

ensure_ascii |

bool |

Controls whether to escape non-ASCII characters into Unicode. When set to True, all non-ASCII characters will be escaped; False will retain the original characters, effective only when format_json is True |

False |

||

save_to_img() |

Save the result as an image format file | save_path |

str |

The path to save the file. When specified as a directory, the saved file is named consistent with the input file type. | None |

| Attribute | Description |

|---|---|

json |

Get the prediction result in json format |

img |

Get the visualized image |