diff --git a/docs-website/sidebars.js b/docs-website/sidebars.js

index 57faeec74c..a565dafcdd 100644

--- a/docs-website/sidebars.js

+++ b/docs-website/sidebars.js

@@ -105,6 +105,17 @@ module.exports = {

// "docs/wip/guide-enrich-your-metadata",

],

},

+ {

+ "Ingestion Quickstart Guides": [

+ {

+ BigQuery: [

+ "docs/quick-ingestion-guides/bigquery/overview",

+ "docs/quick-ingestion-guides/bigquery/setup",

+ "docs/quick-ingestion-guides/bigquery/configuration",

+ ],

+ },

+ ],

+ },

],

"Ingest Metadata": [

// The purpose of this section is to provide a deeper understanding of how ingestion works.

diff --git a/docs/quick-ingestion-guides/bigquery/configuration.md b/docs/quick-ingestion-guides/bigquery/configuration.md

new file mode 100644

index 0000000000..ed43f10ddf

--- /dev/null

+++ b/docs/quick-ingestion-guides/bigquery/configuration.md

@@ -0,0 +1,150 @@

+---

+title: Configuration

+---

+# Configuring Your BigQuery Connector to DataHub

+

+Now that you have created a Service Account and Service Account Key in BigQuery in [the prior step](setup.md), it's now time to set up a connection via the DataHub UI.

+

+## Configure Secrets

+

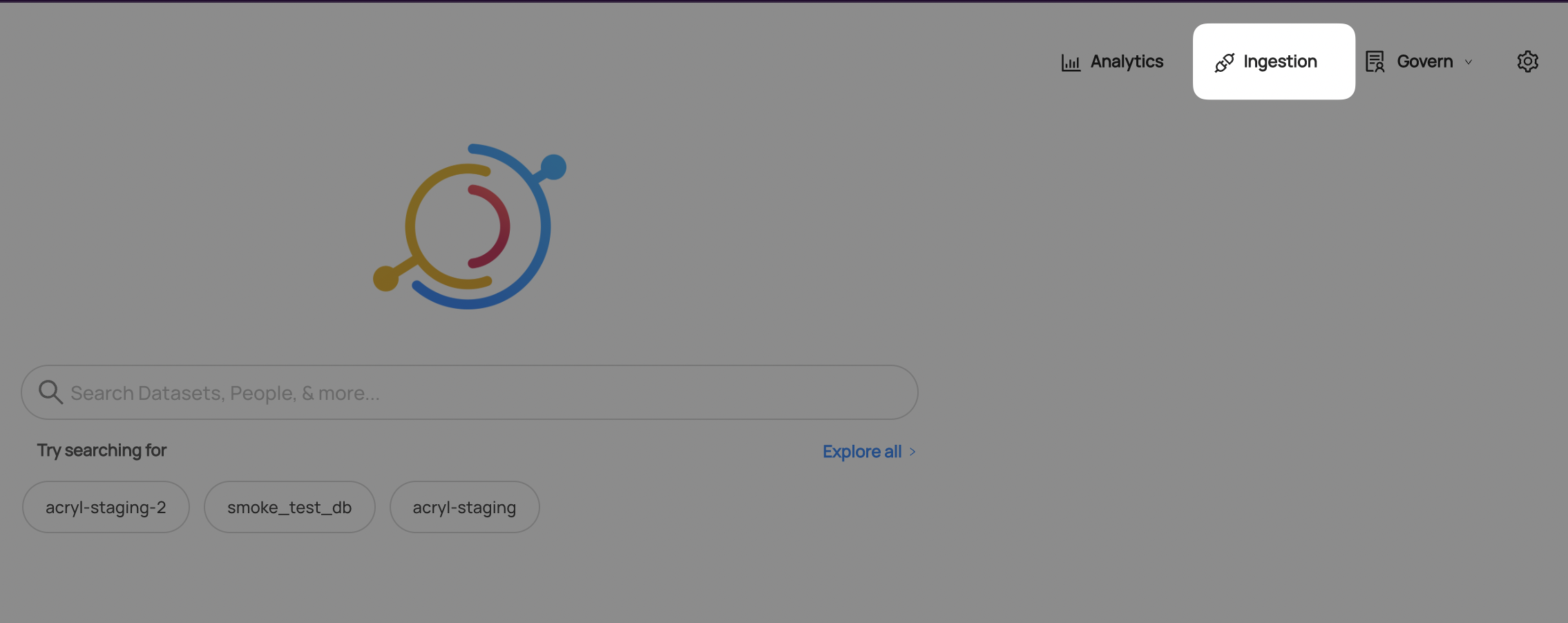

+1. Within DataHub, navigate to the **Ingestion** tab in the top, right corner of your screen

+

+

+  +

+

+

+:::note

+If you do not see the Ingestion tab, please contact your DataHub admin to grant you the correct permissions

+:::

+

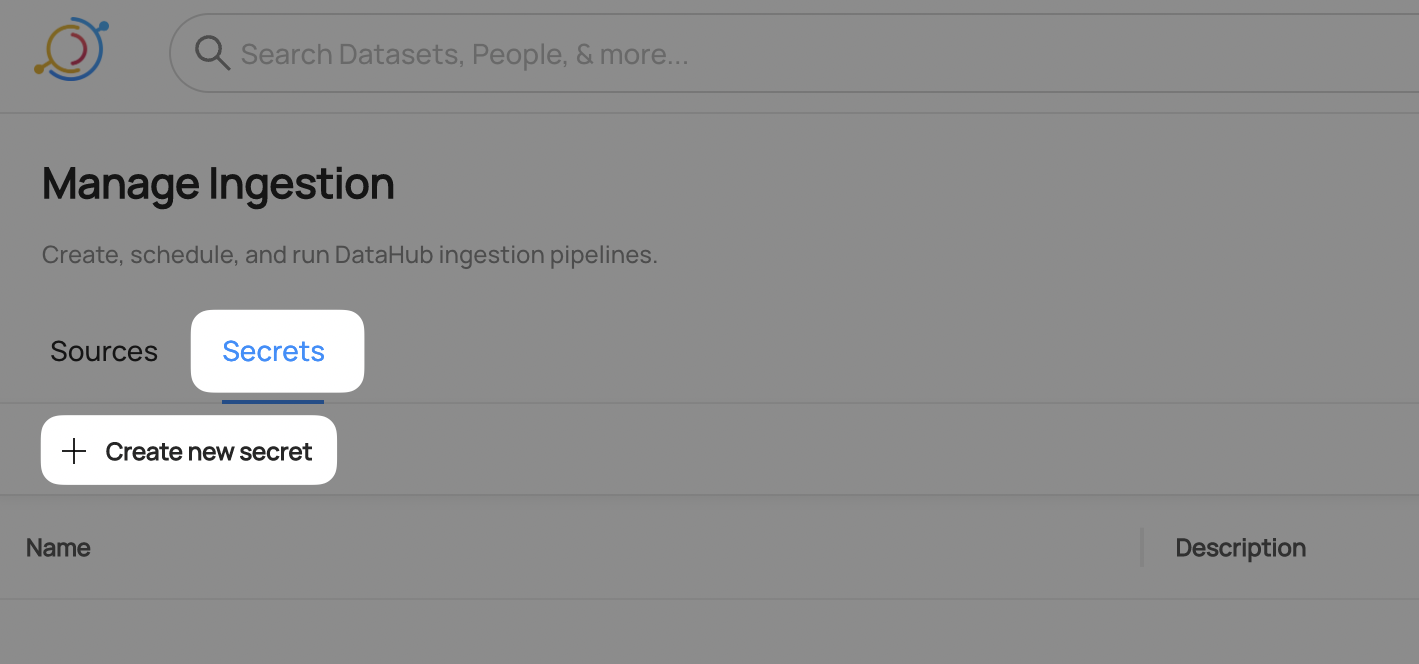

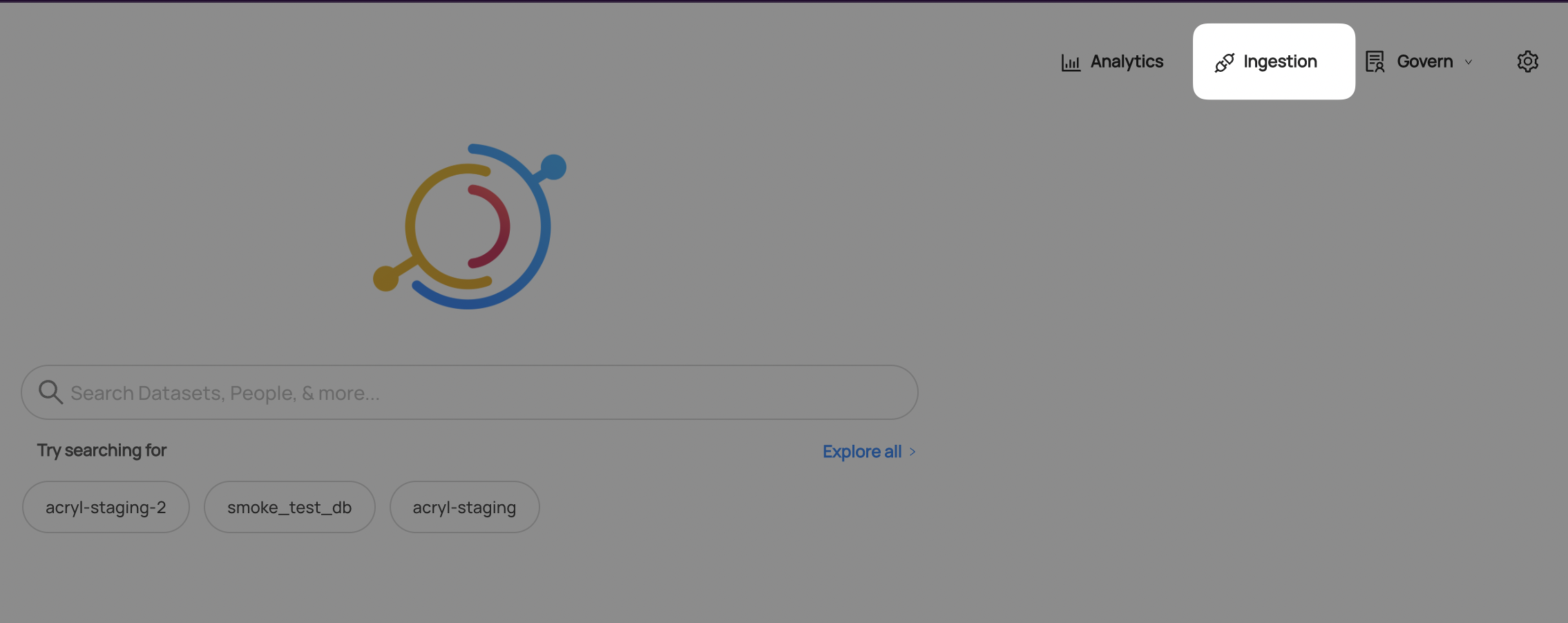

+2. Navigate to the **Secrets** tab and click **Create new secret**

+

+

+  +

+

+

+

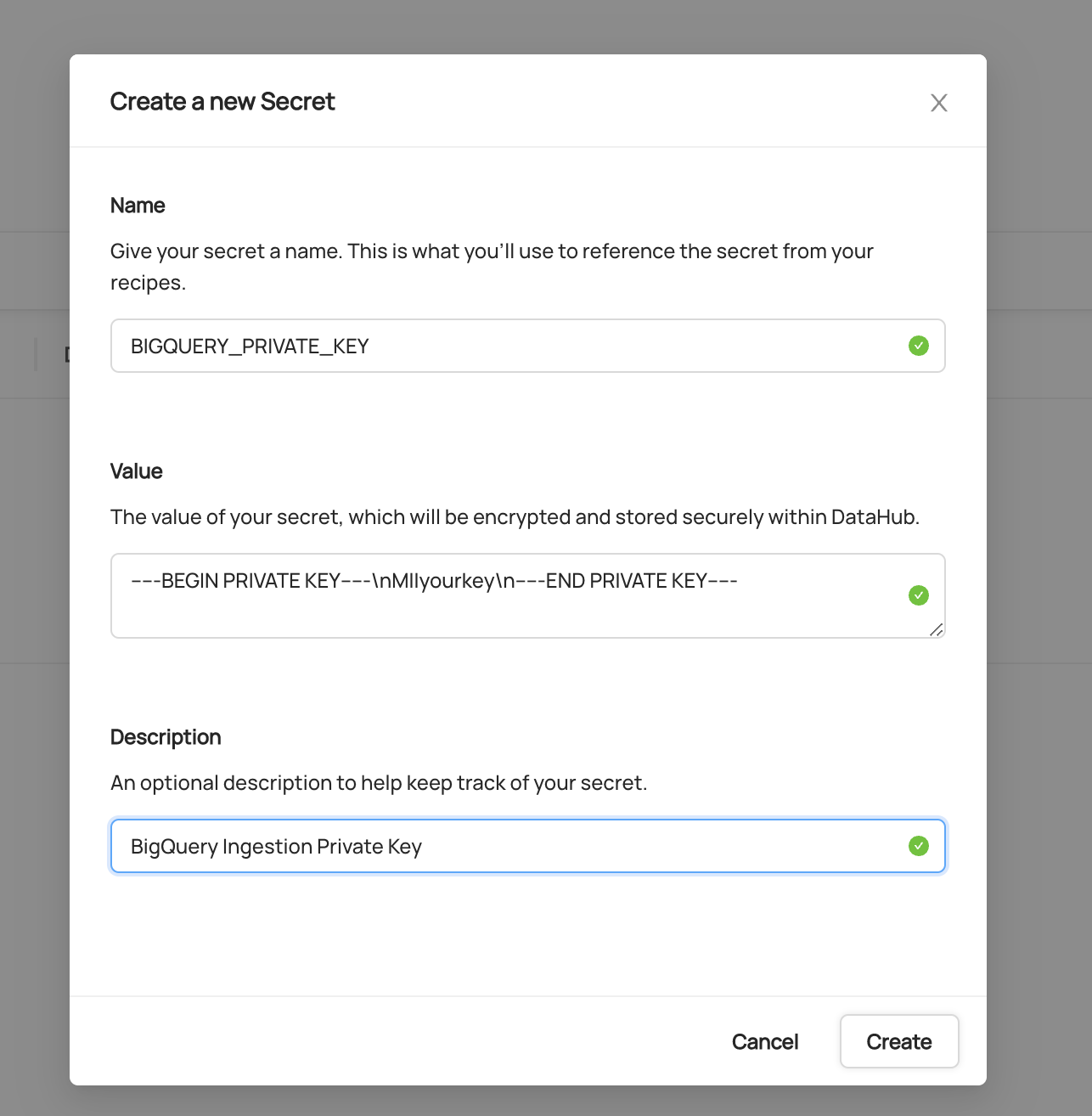

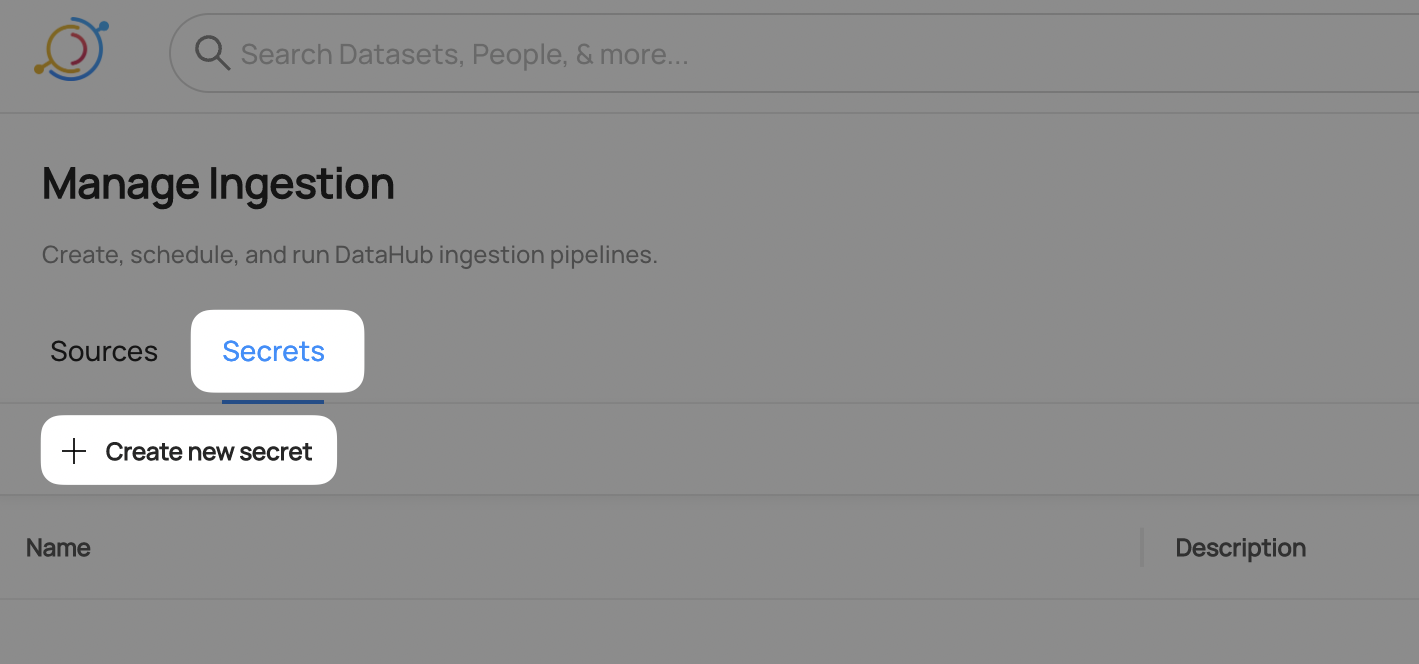

+3. Create a Private Key secret

+

+This will securely store your BigQuery Service Account Private Key within DataHub

+

+ * Enter a name like `BIGQUERY_PRIVATE_KEY` - we will use this later to refer to the secret

+ * Copy and paste the `private_key` value from your Service Account Key

+ * Optionally add a description

+ * Click **Create**

+

+

+  +

+

+

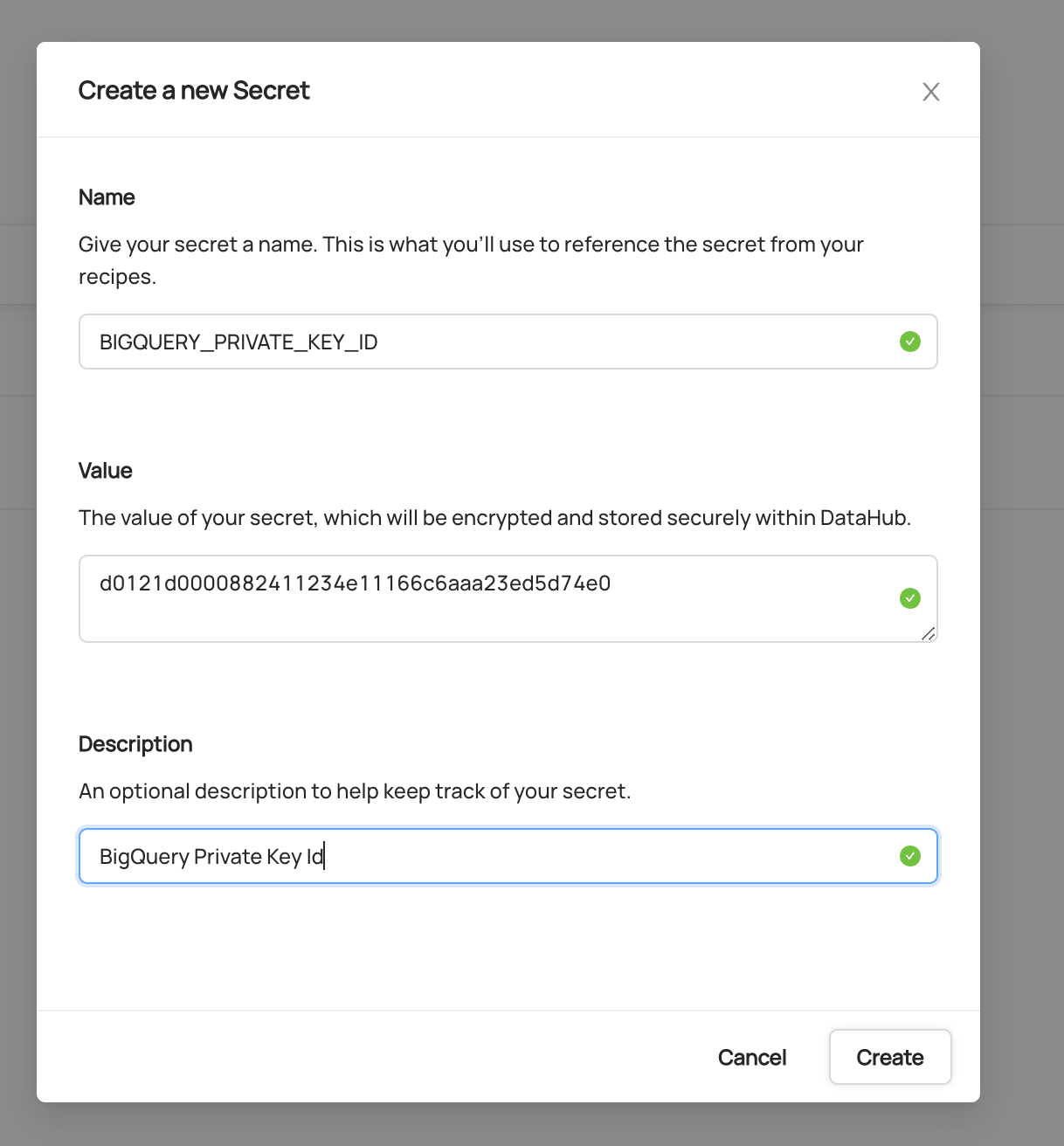

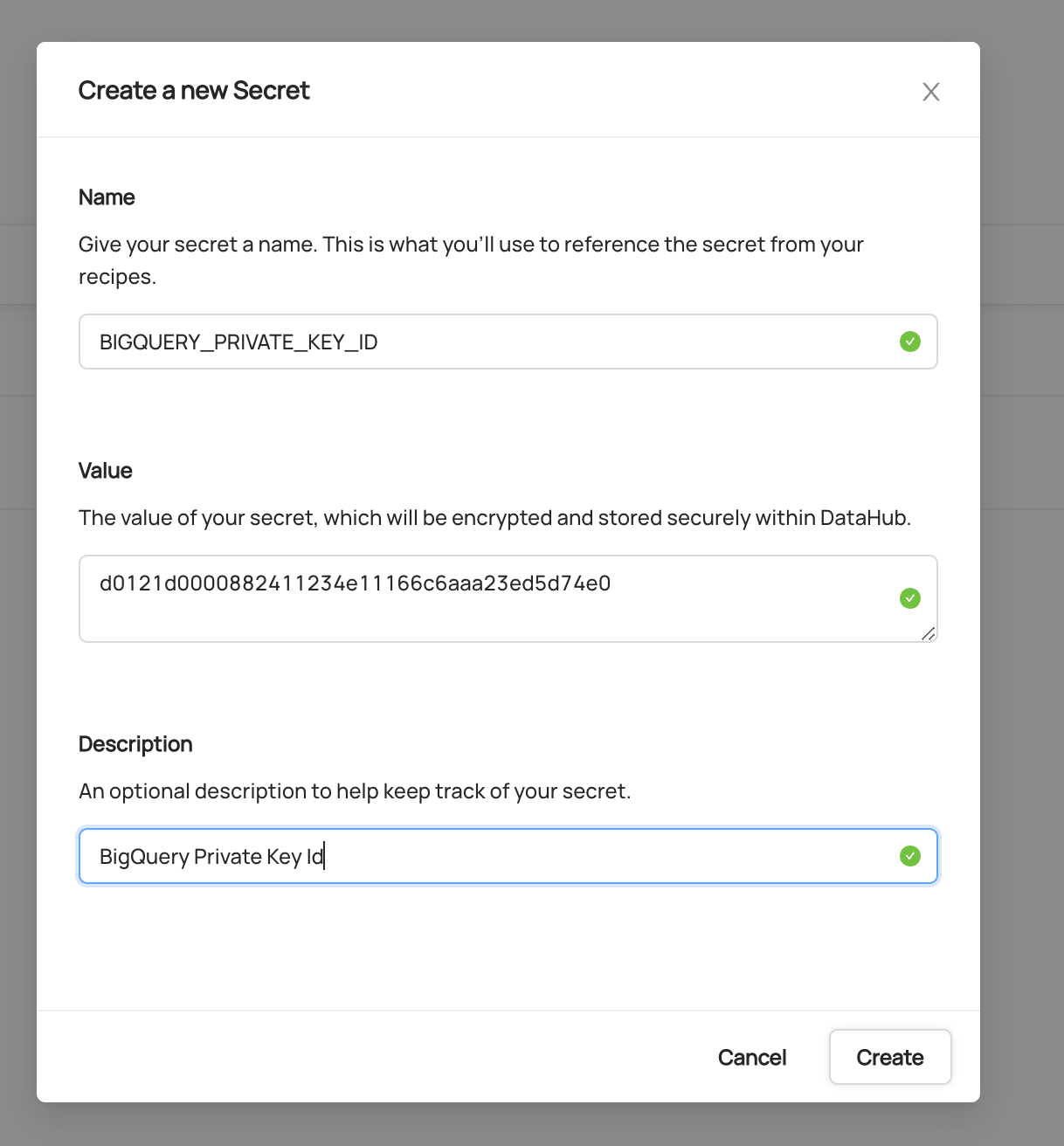

+4. Create a Private Key ID secret

+

+This will securely store your BigQuery Service Account Private Key ID within DataHub

+

+ * Click **Create new secret** again

+ * Enter a name like `BIGQUERY_PRIVATE_KEY_ID` - we will use this later to refer to the secret

+ * Copy and paste the `private_key_id` value from your Service Account Key

+ * Optionally add a description

+ * Click **Create**

+

+

+  +

+

+

+## Configure Recipe

+

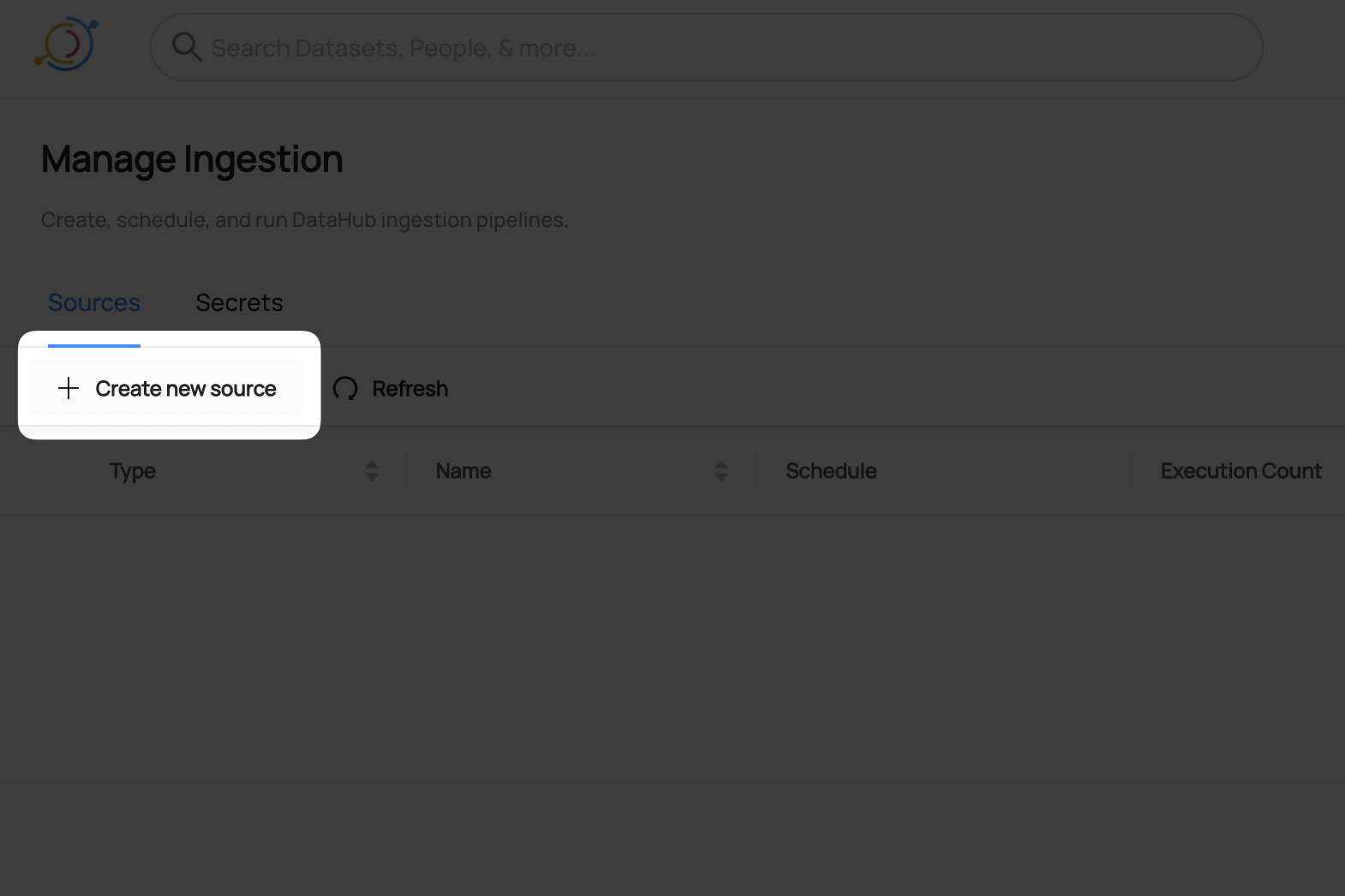

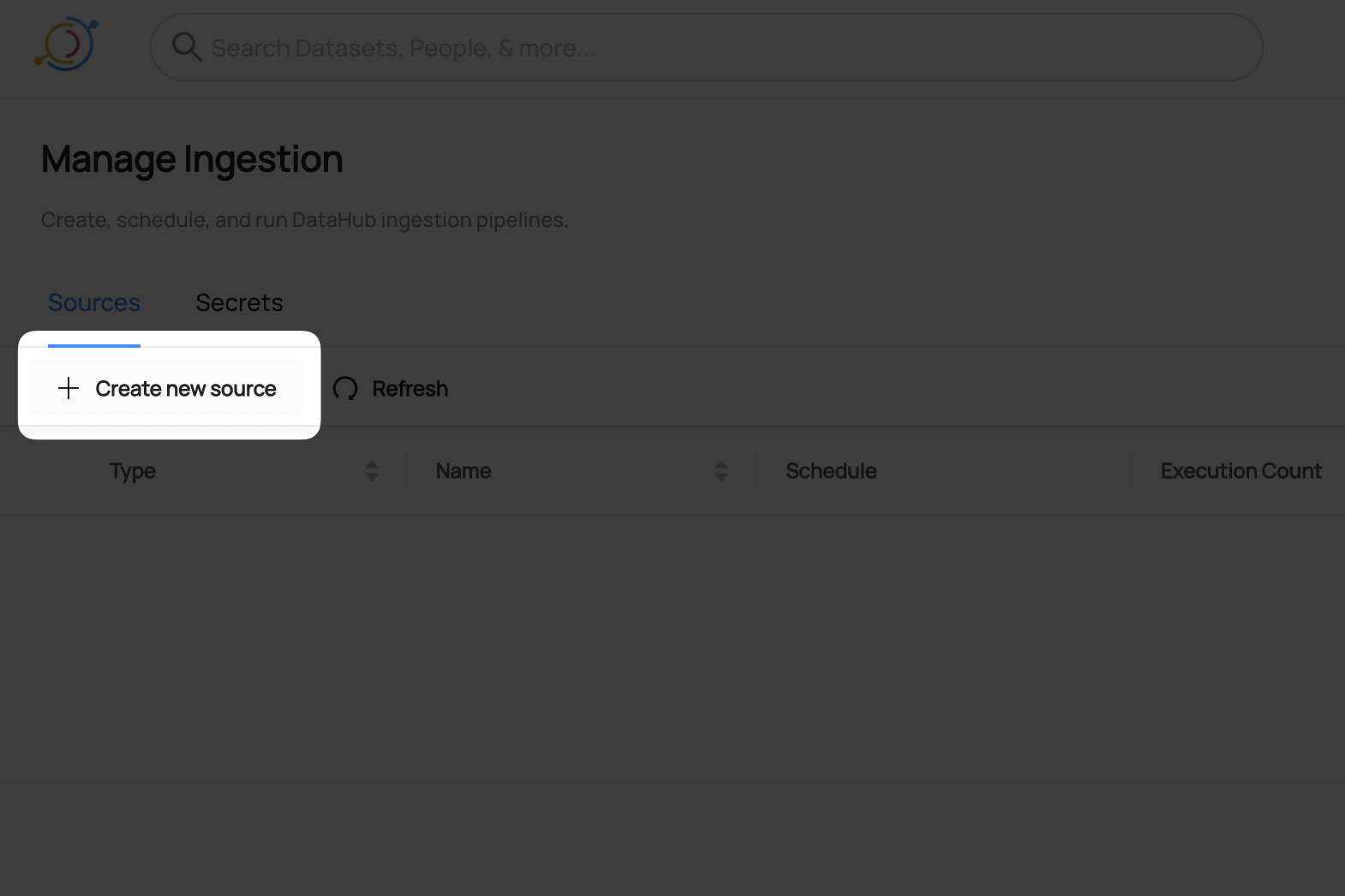

+5. Navigate to the **Sources** tab and click **Create new source**

+

+

+  +

+

+

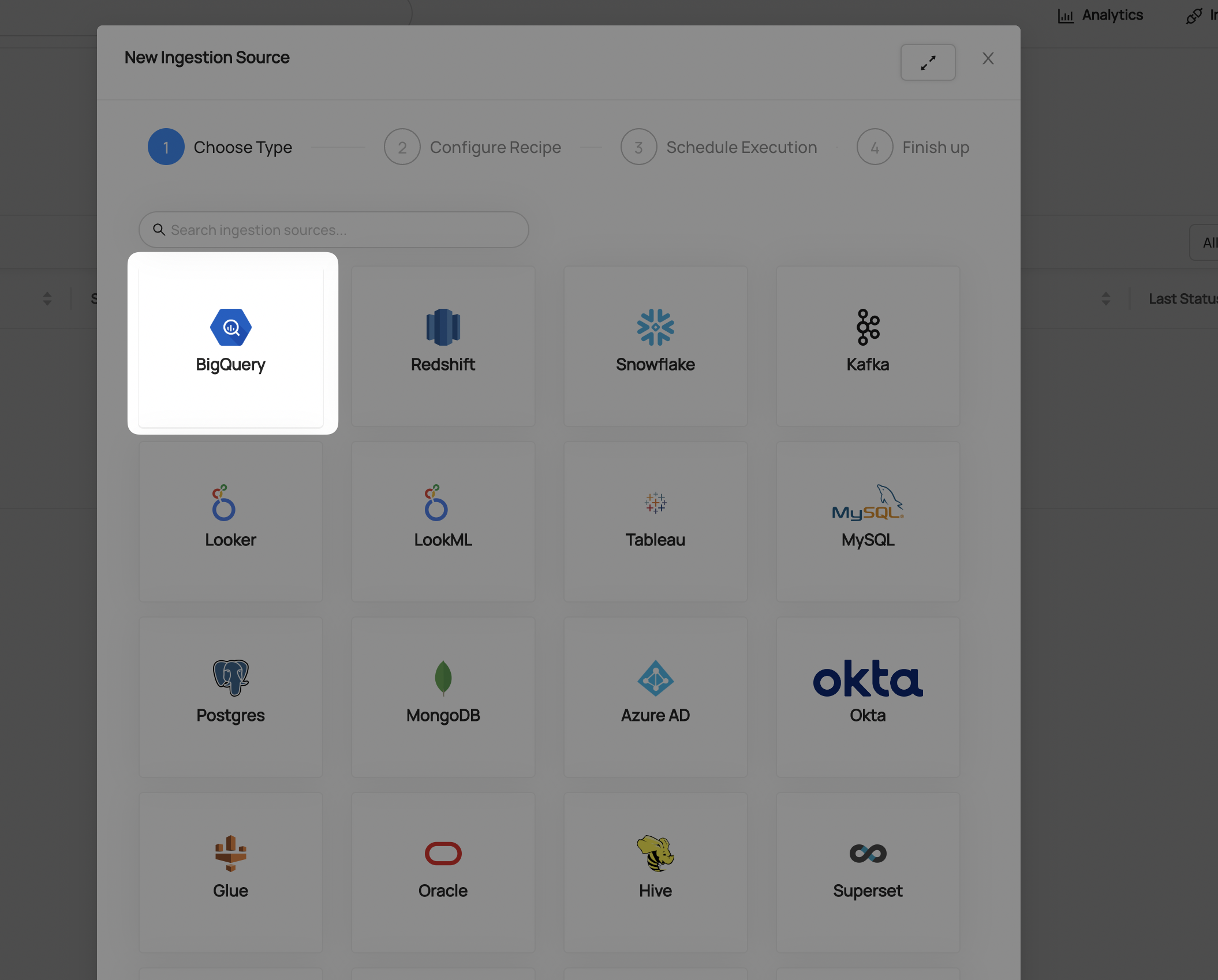

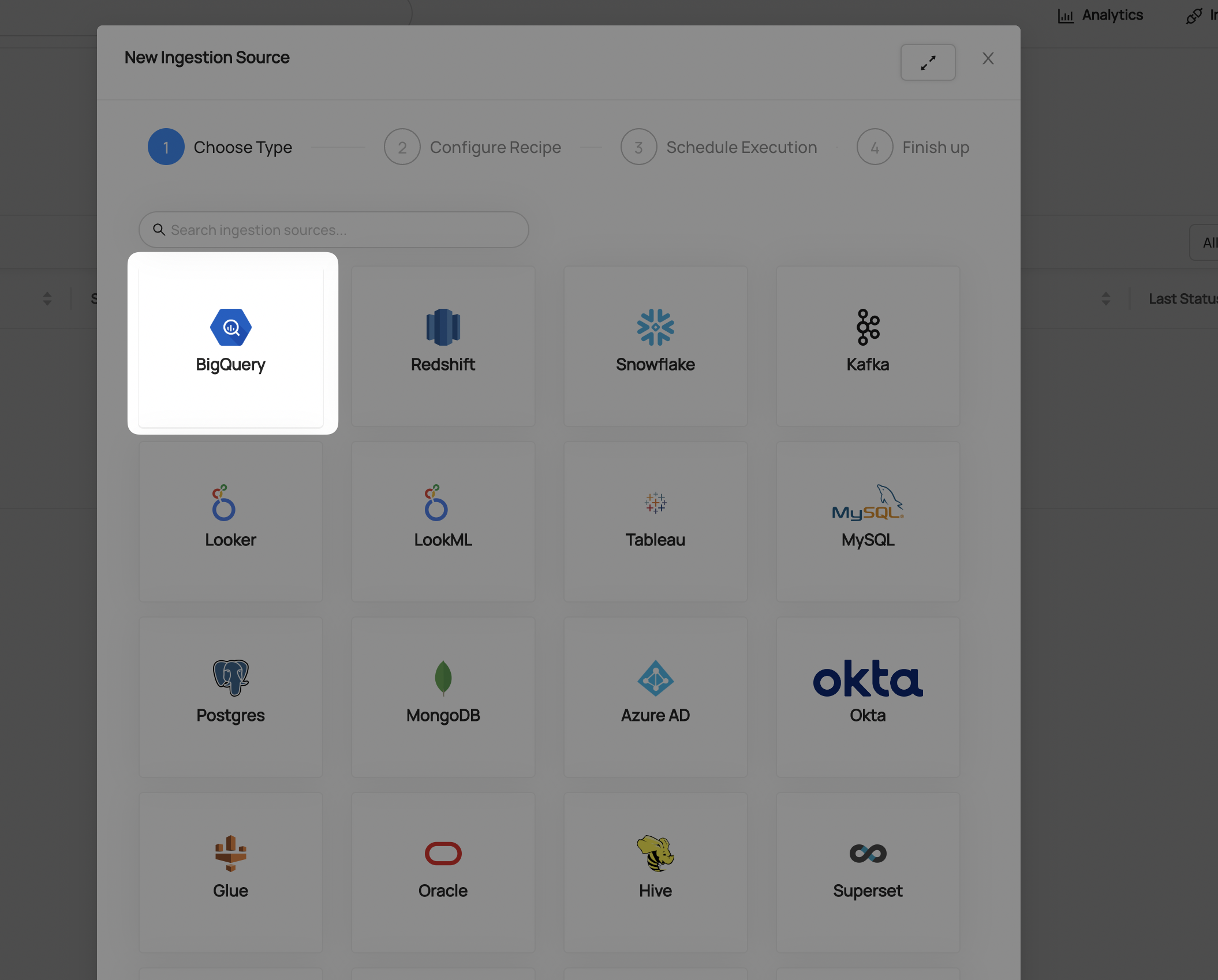

+6. Select BigQuery

+

+

+  +

+

+

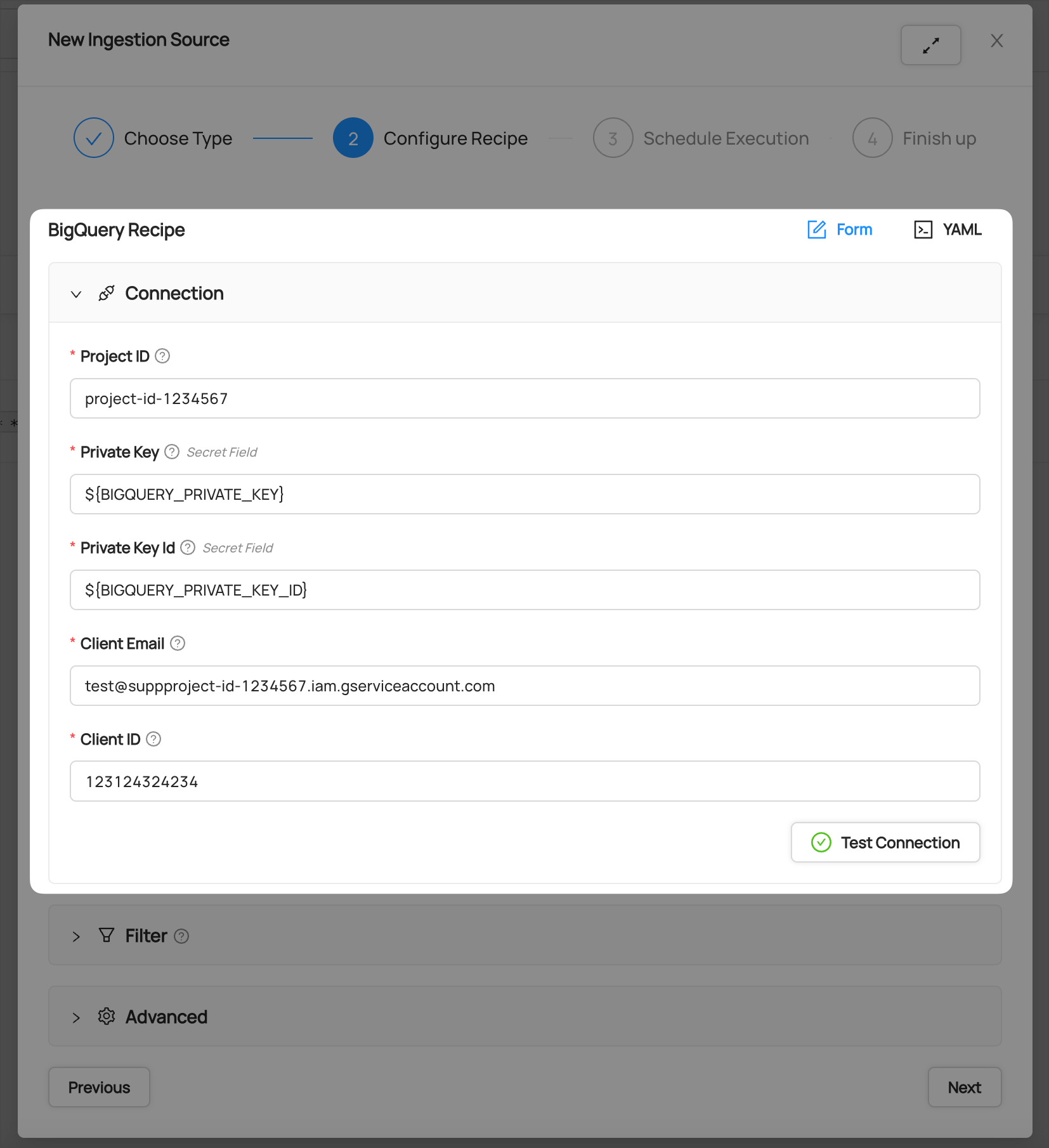

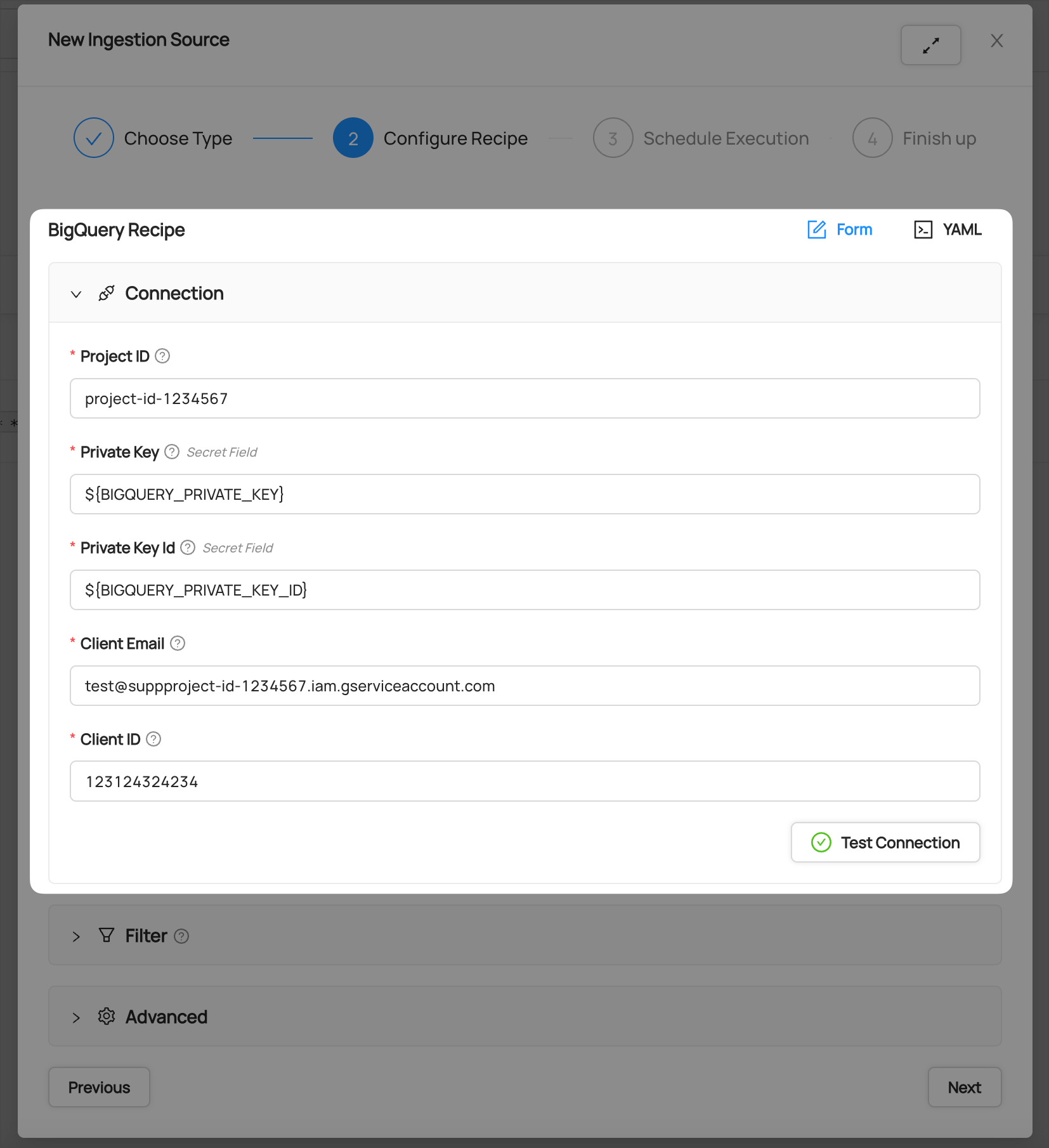

+7. Fill out the BigQuery Recipe

+

+You can find the following details in your Service Account Key file:

+

+* Project ID

+* Client Email

+* Client ID

+

+Populate the Secret Fields by selecting the Primary Key and Primary Key ID secrets you created in steps 3 and 4.

+

+

+  +

+

+

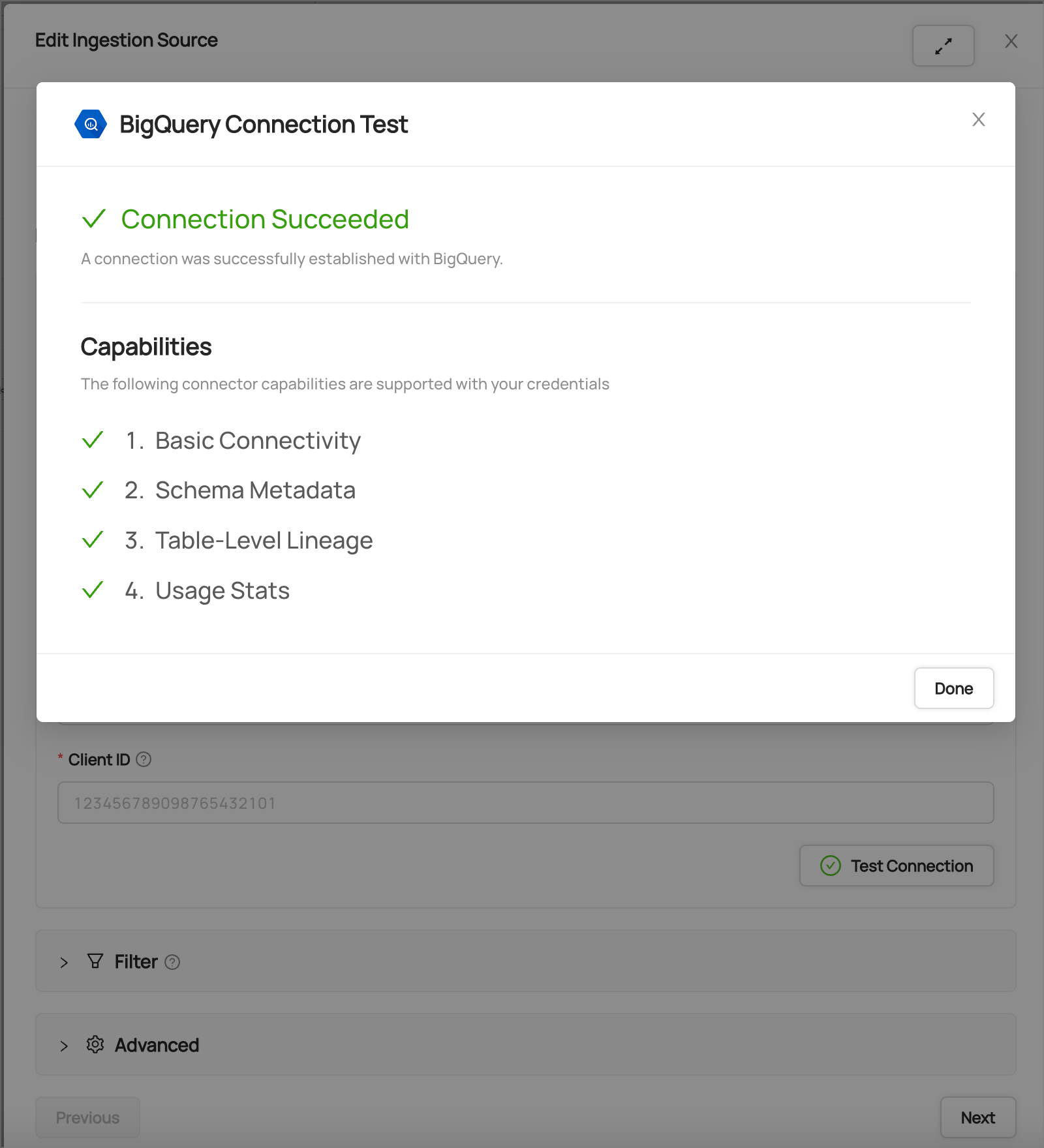

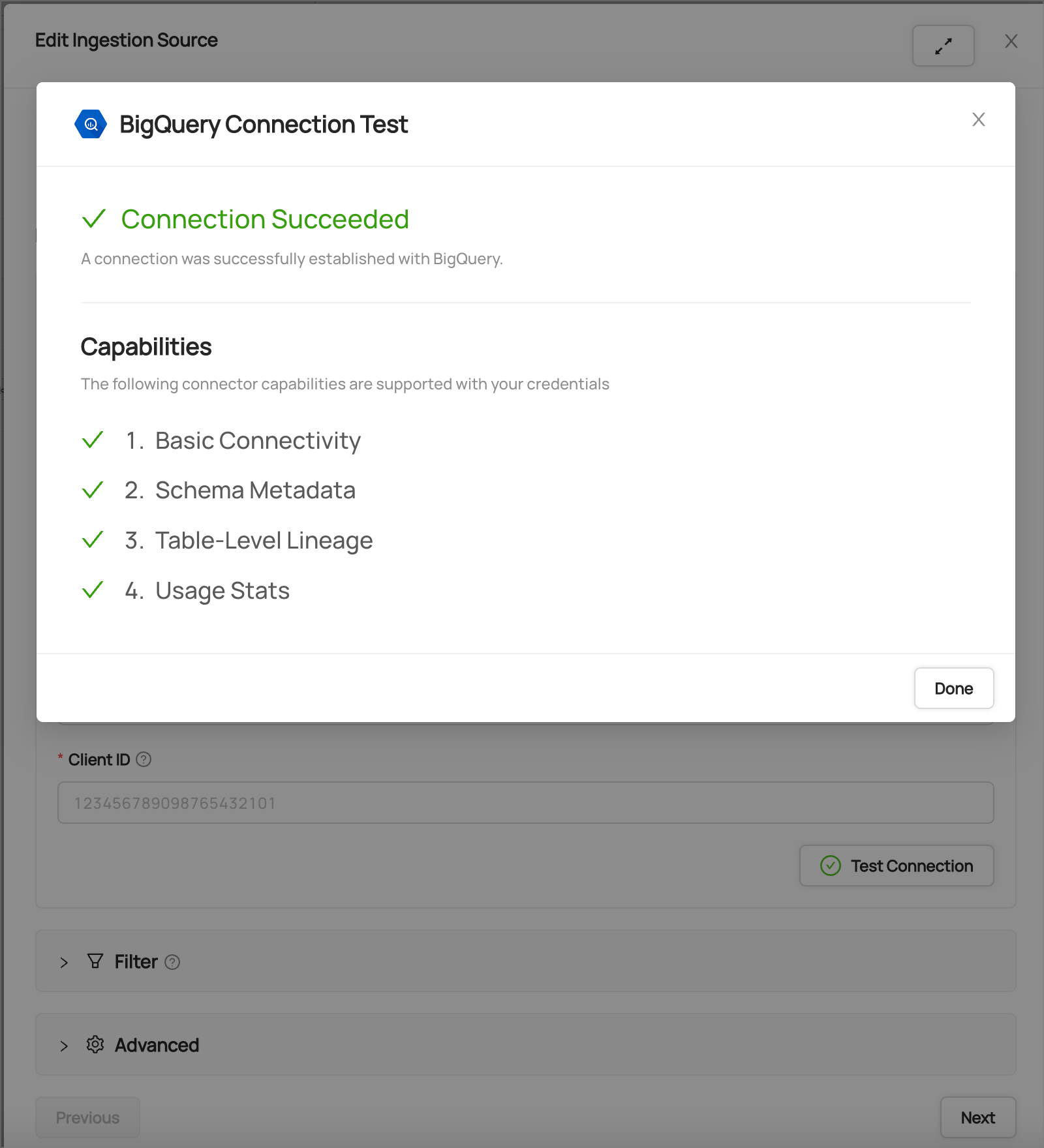

+8. Click **Test Connection**

+

+This step will ensure you have configured your credentials accurately and confirm you have the required permissions to extract all relevant metadata.

+

+

+  +

+

+

+After you have successfully tested your connection, click **Next**.

+

+## Schedule Execution

+

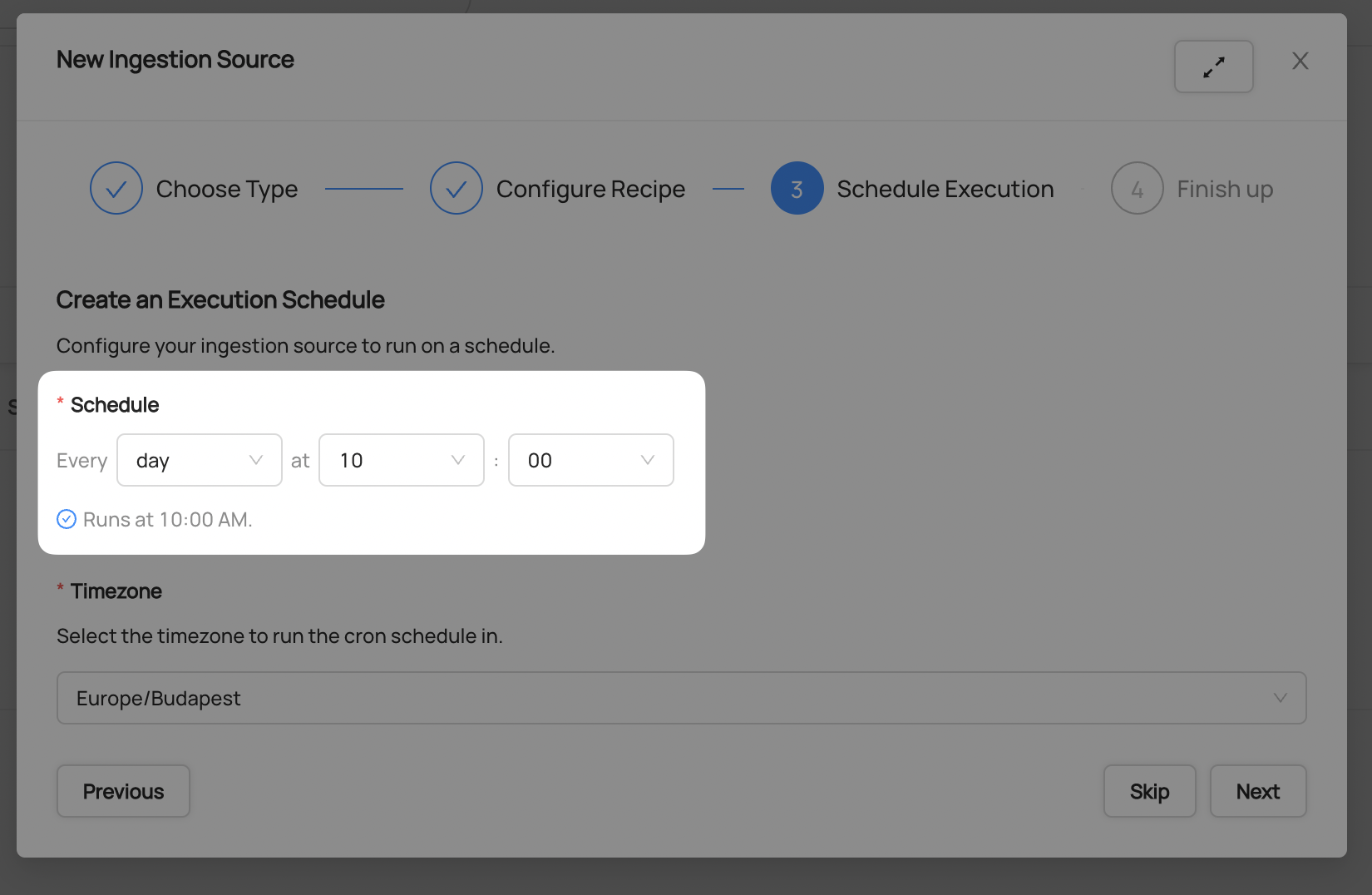

+Now it's time to schedule a recurring ingestion pipeline to regularly extract metadata from your BigQuery instance.

+

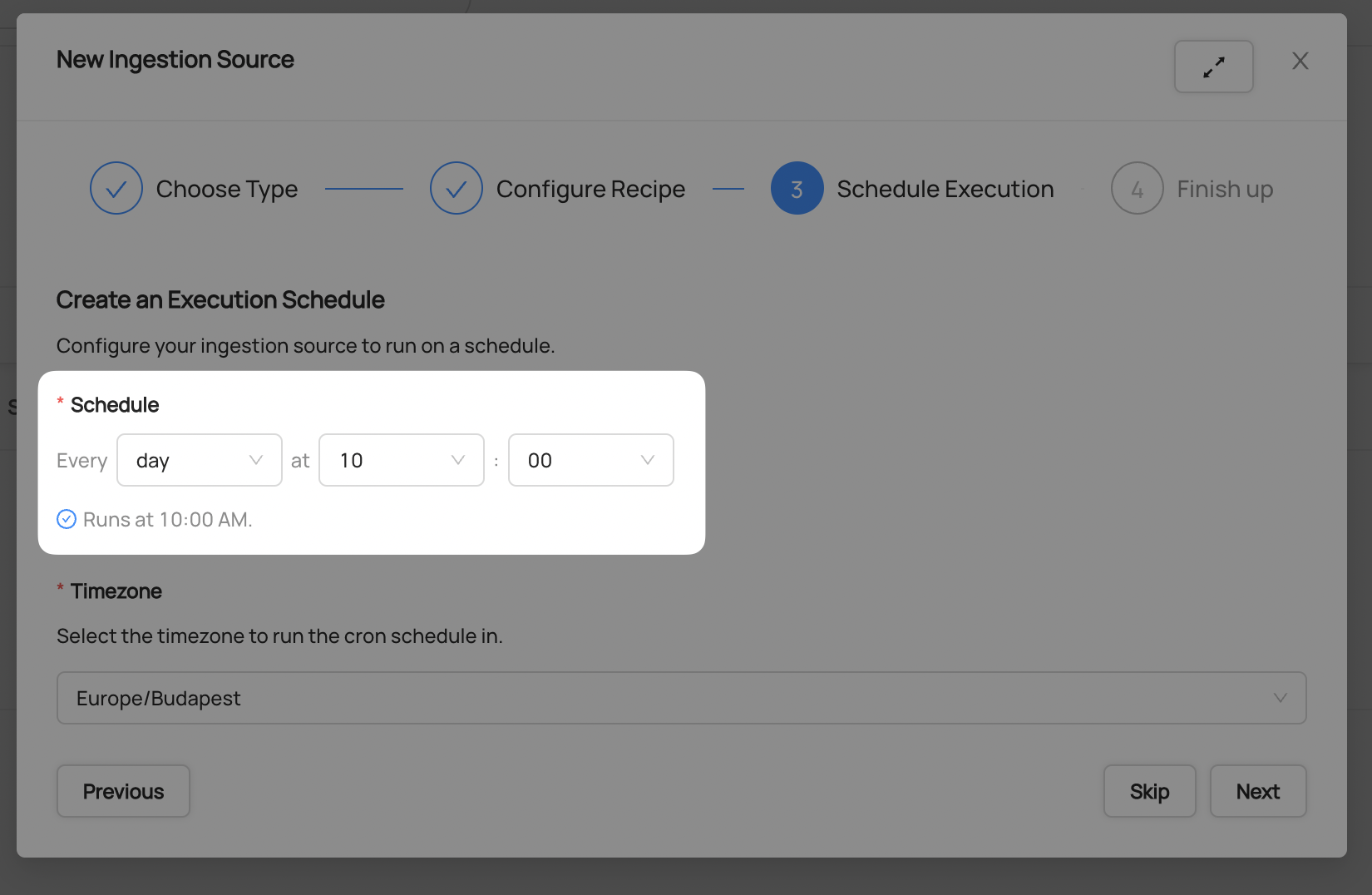

+9. Decide how regularly you want this ingestion to run-- day, month, year, hour, minute, etc. Select from the dropdown

+

+  +

+

+

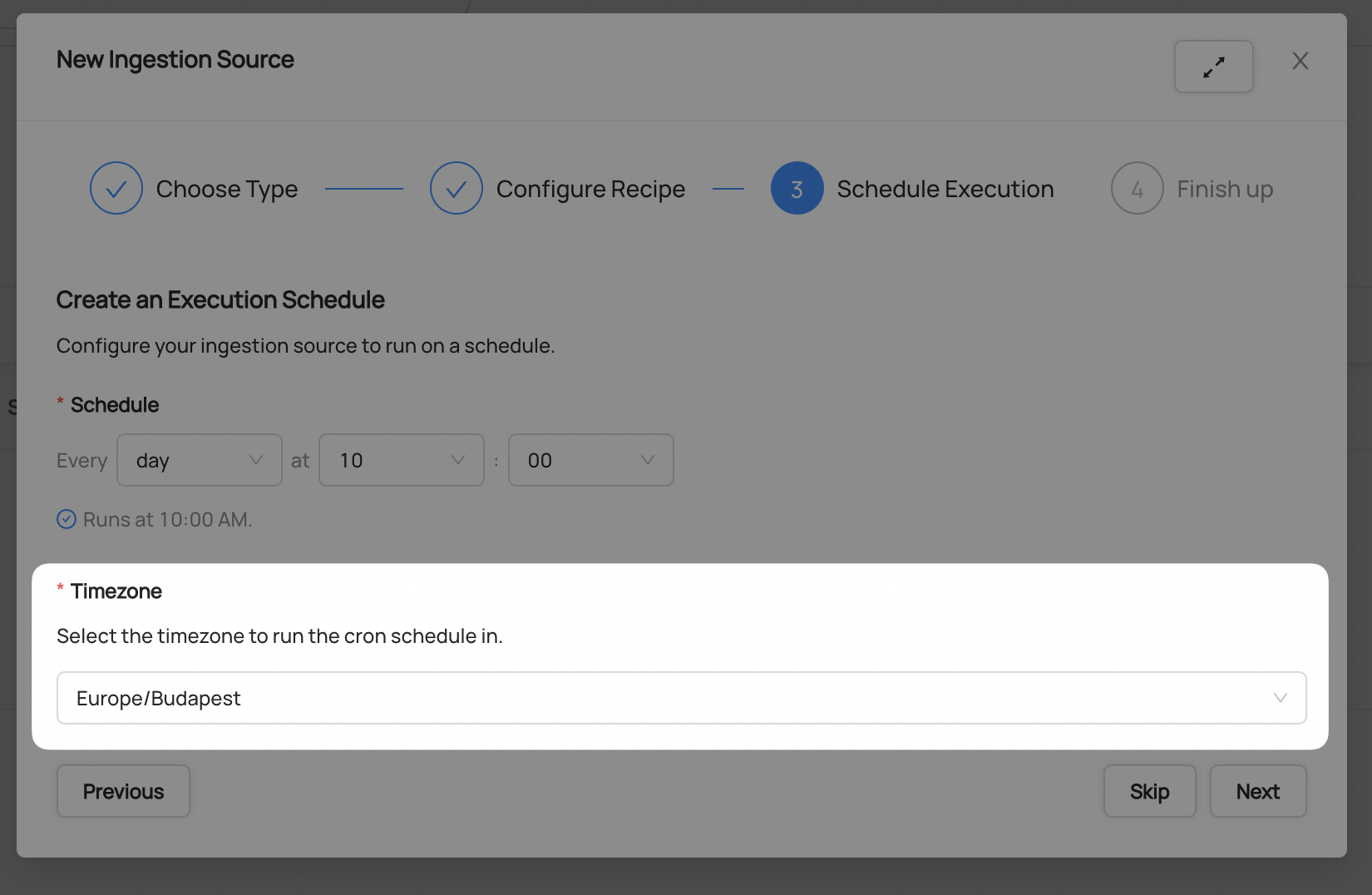

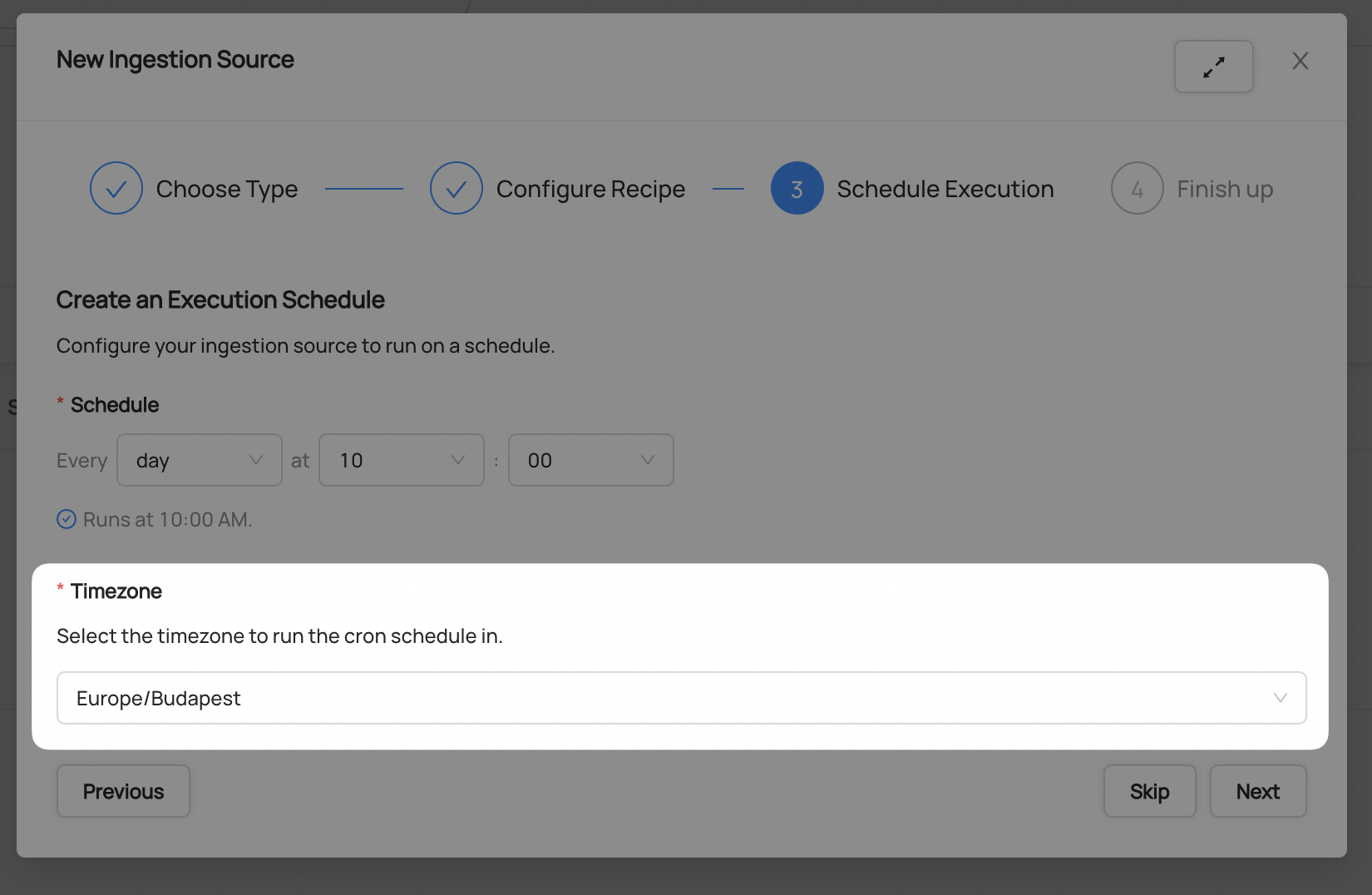

+10. Ensure you've configured your correct timezone

+

+  +

+

+

+11. Click **Next** when you are done

+

+## Finish Up

+

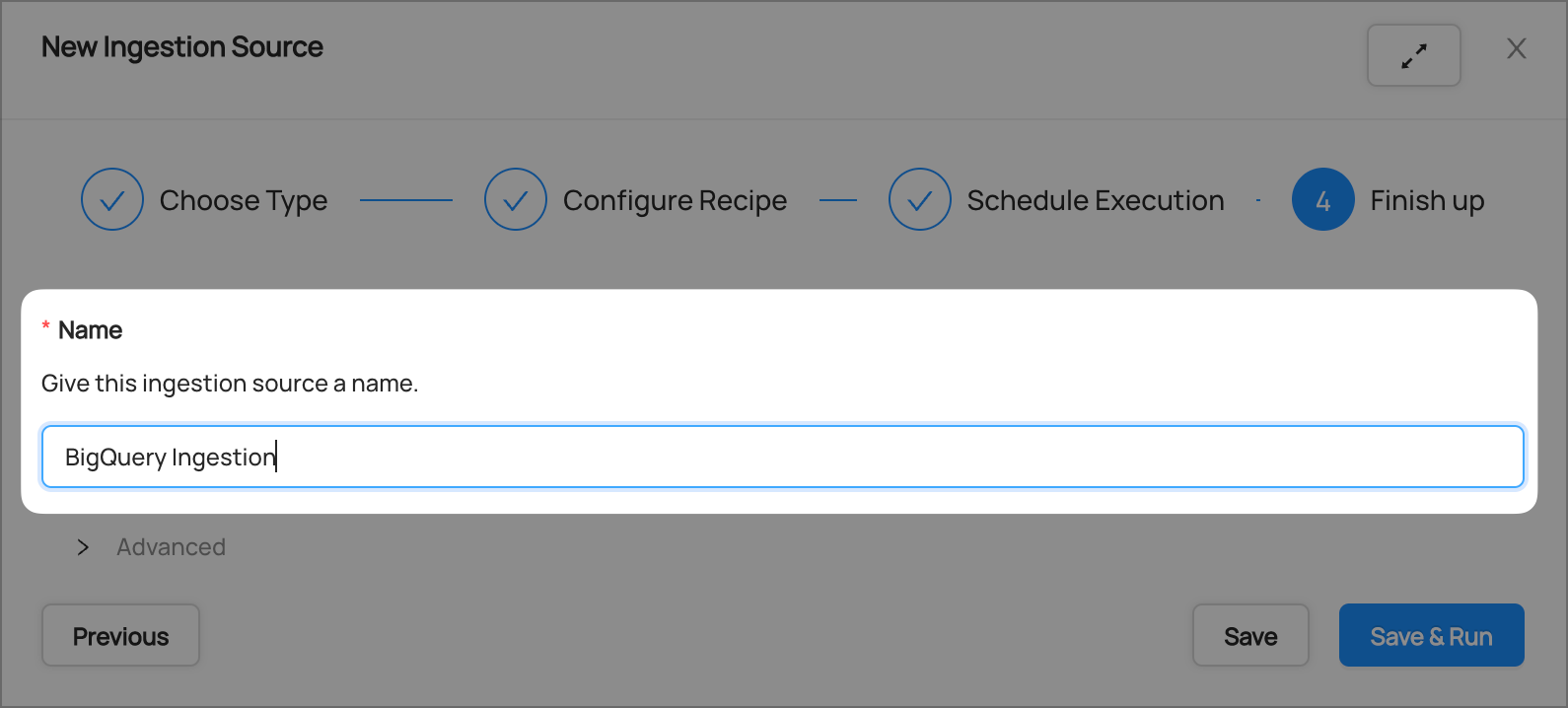

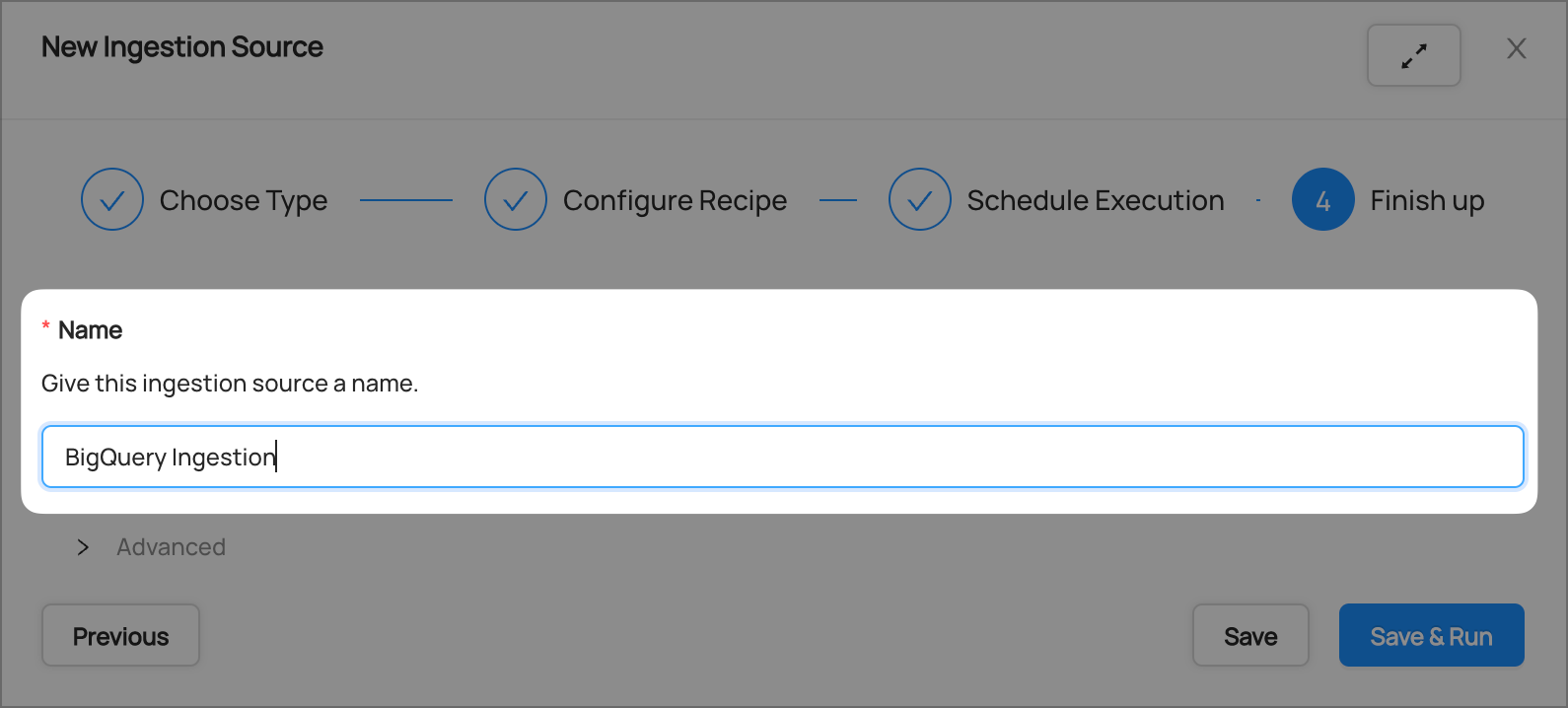

+12. Name your ingestion source, then click **Save and Run**

+

+  +

+

+

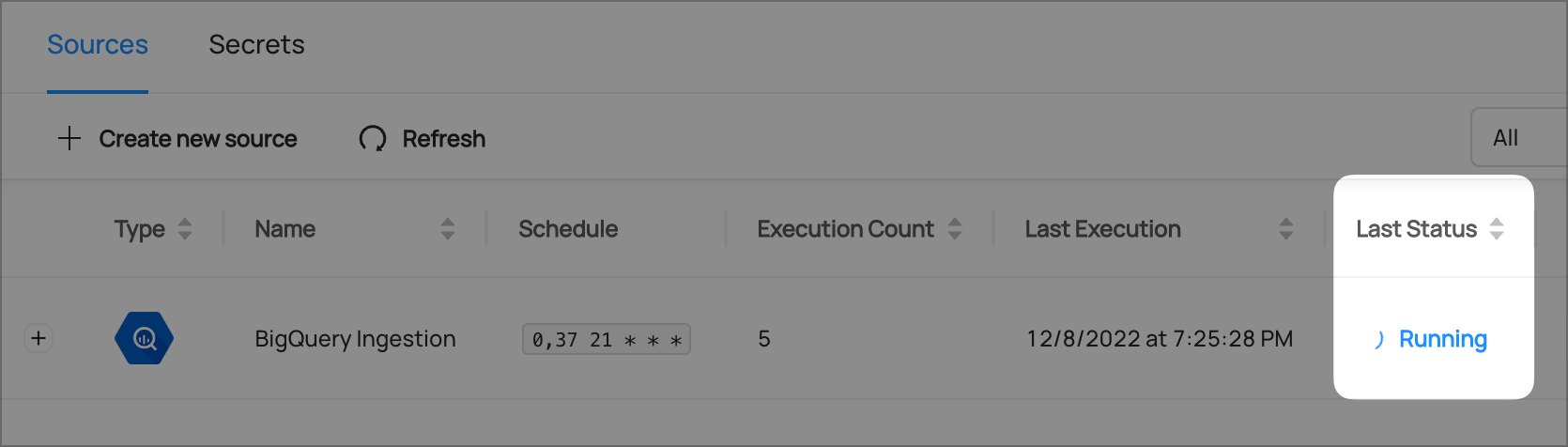

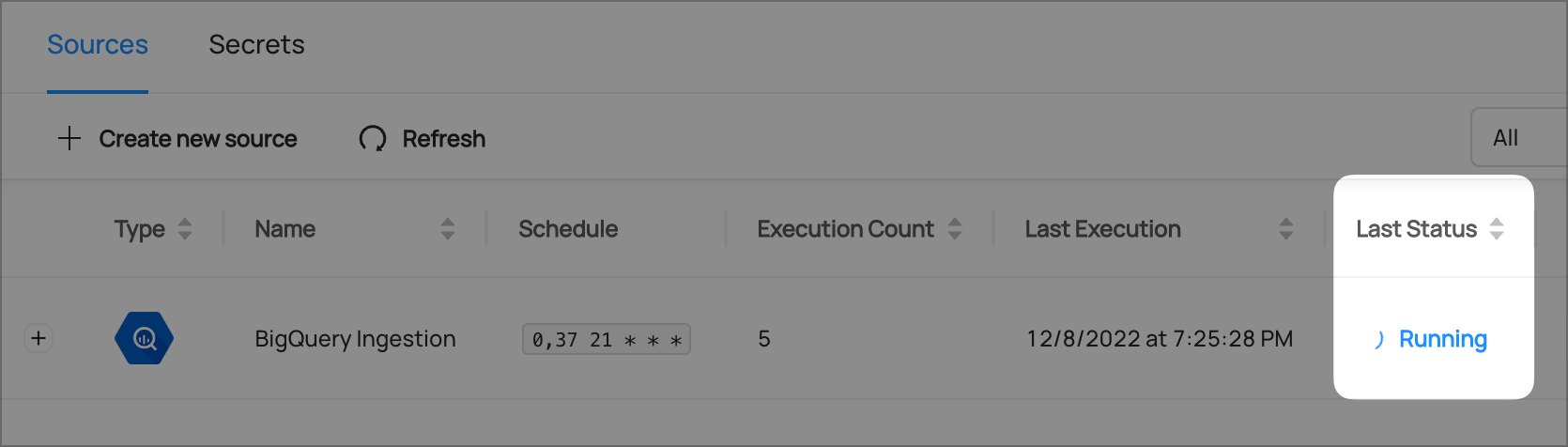

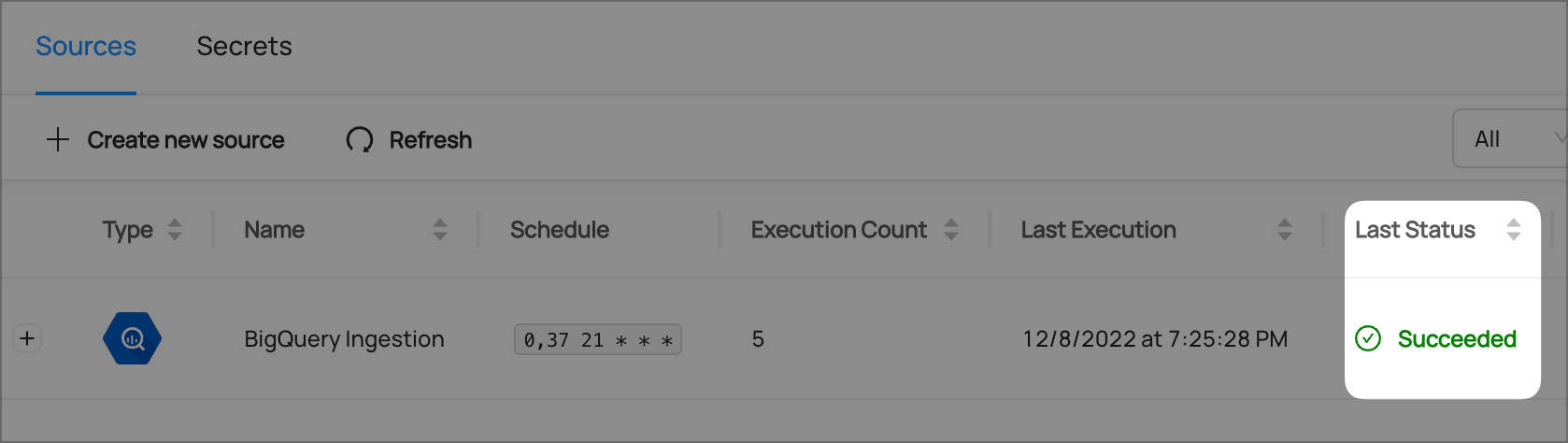

+You will now find your new ingestion source running

+

+

+  +

+

+

+## Validate Ingestion Runs

+

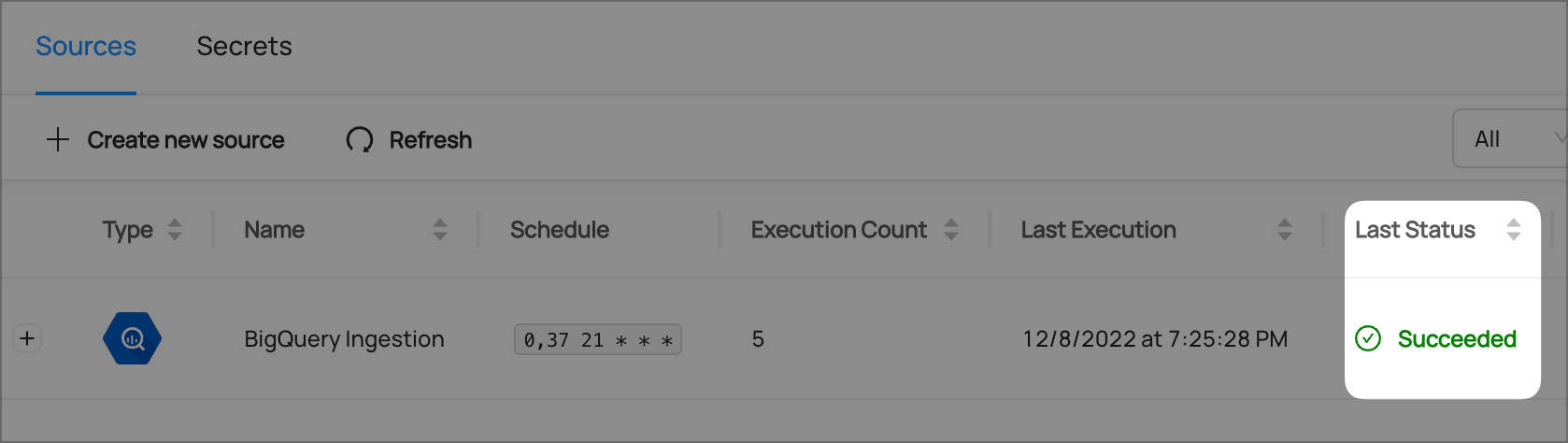

+13. View the latest status of ingestion runs on the Ingestion page

+

+

+  +

+

+

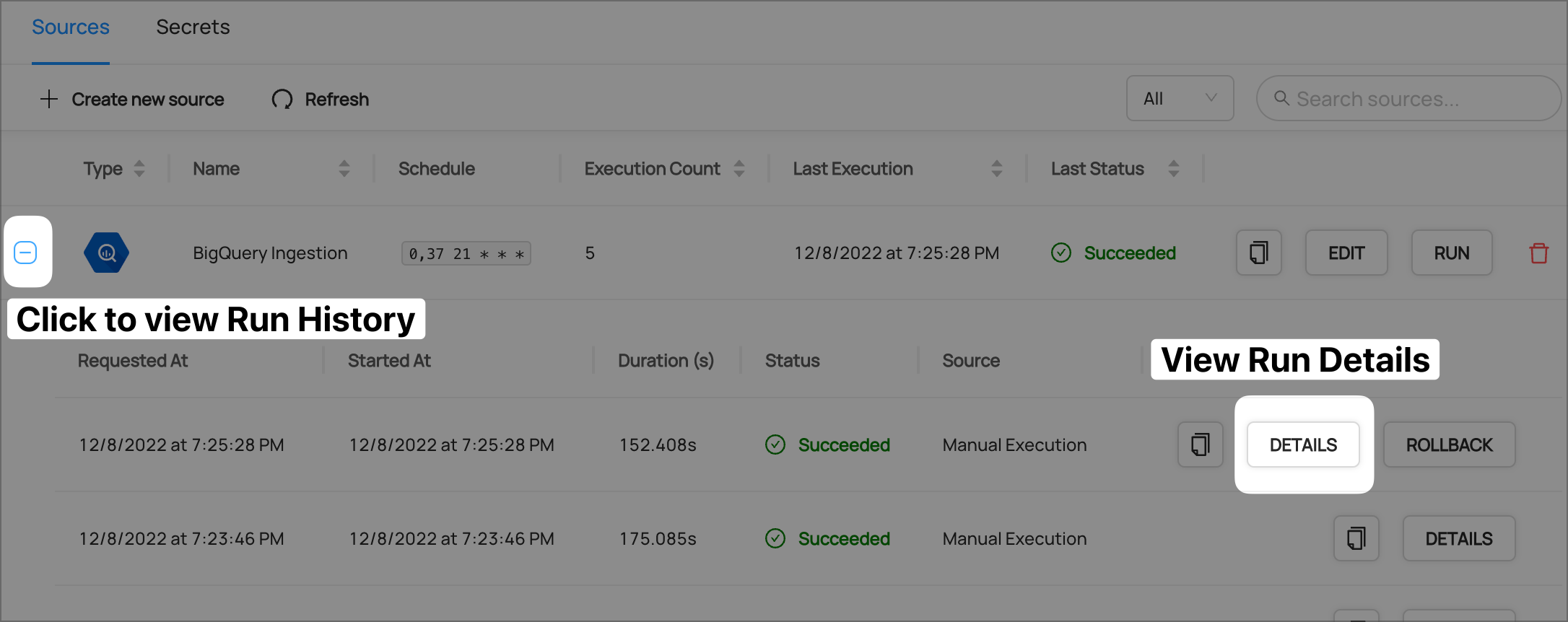

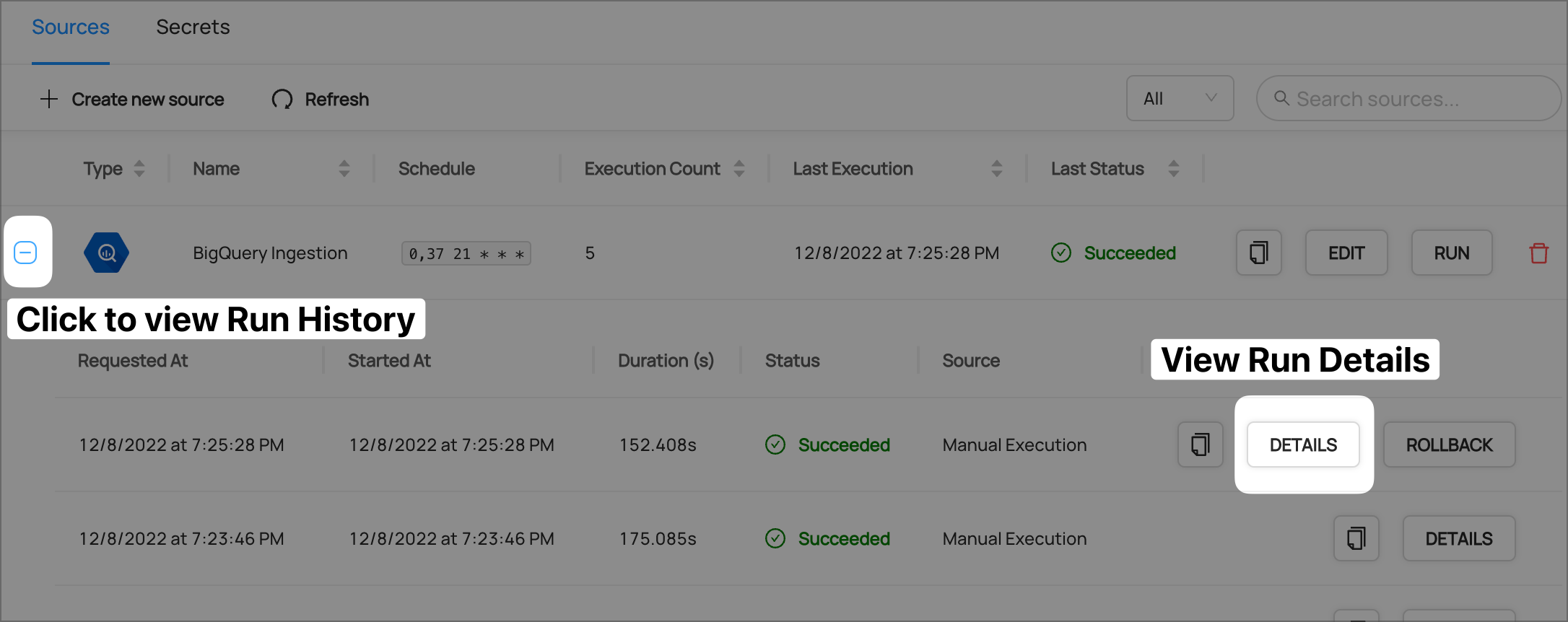

+14. Click the plus sign to expand the full list of historical runs and outcomes; click **Details** to see the outcomes of a specific run

+

+

+  +

+

+

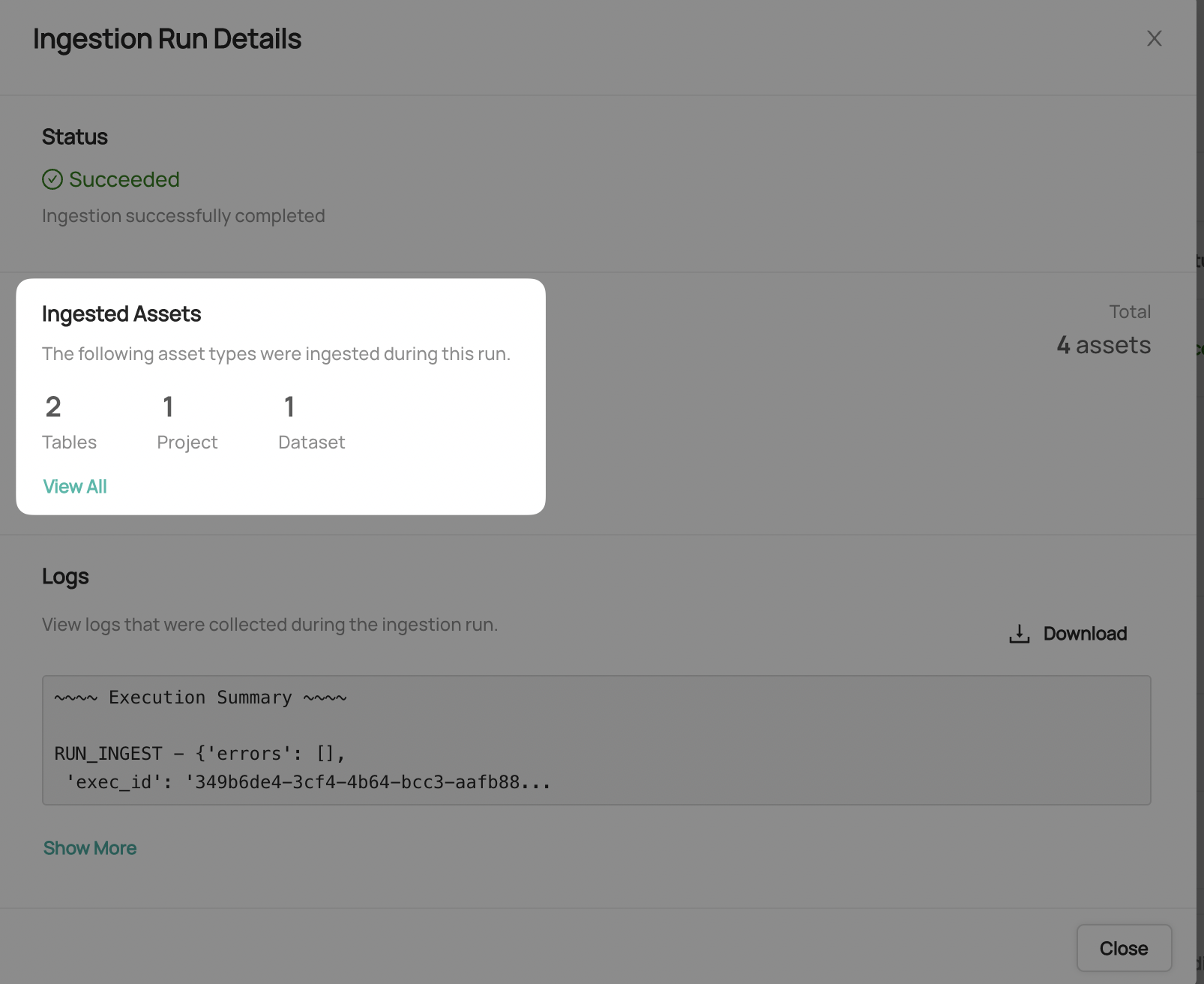

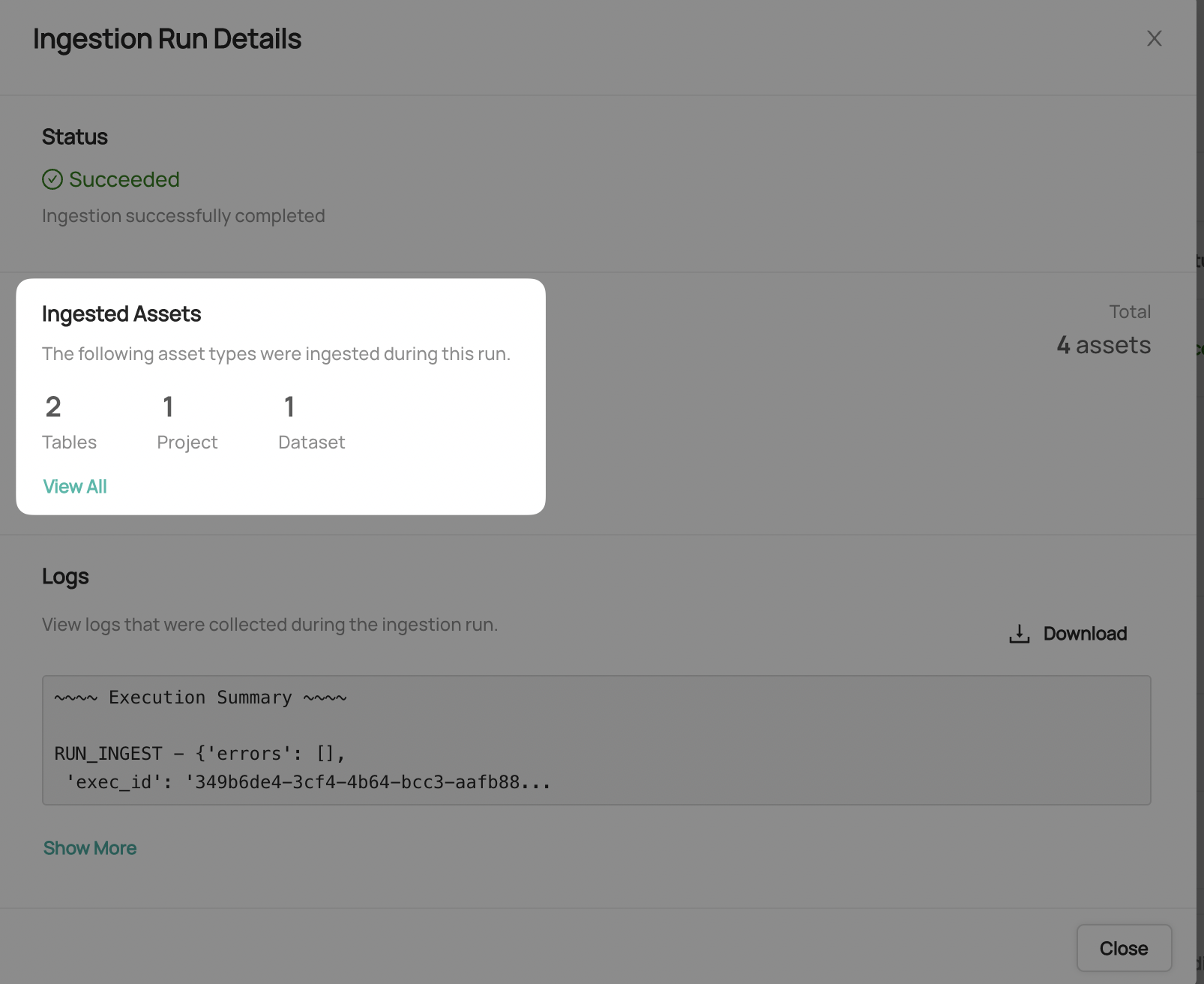

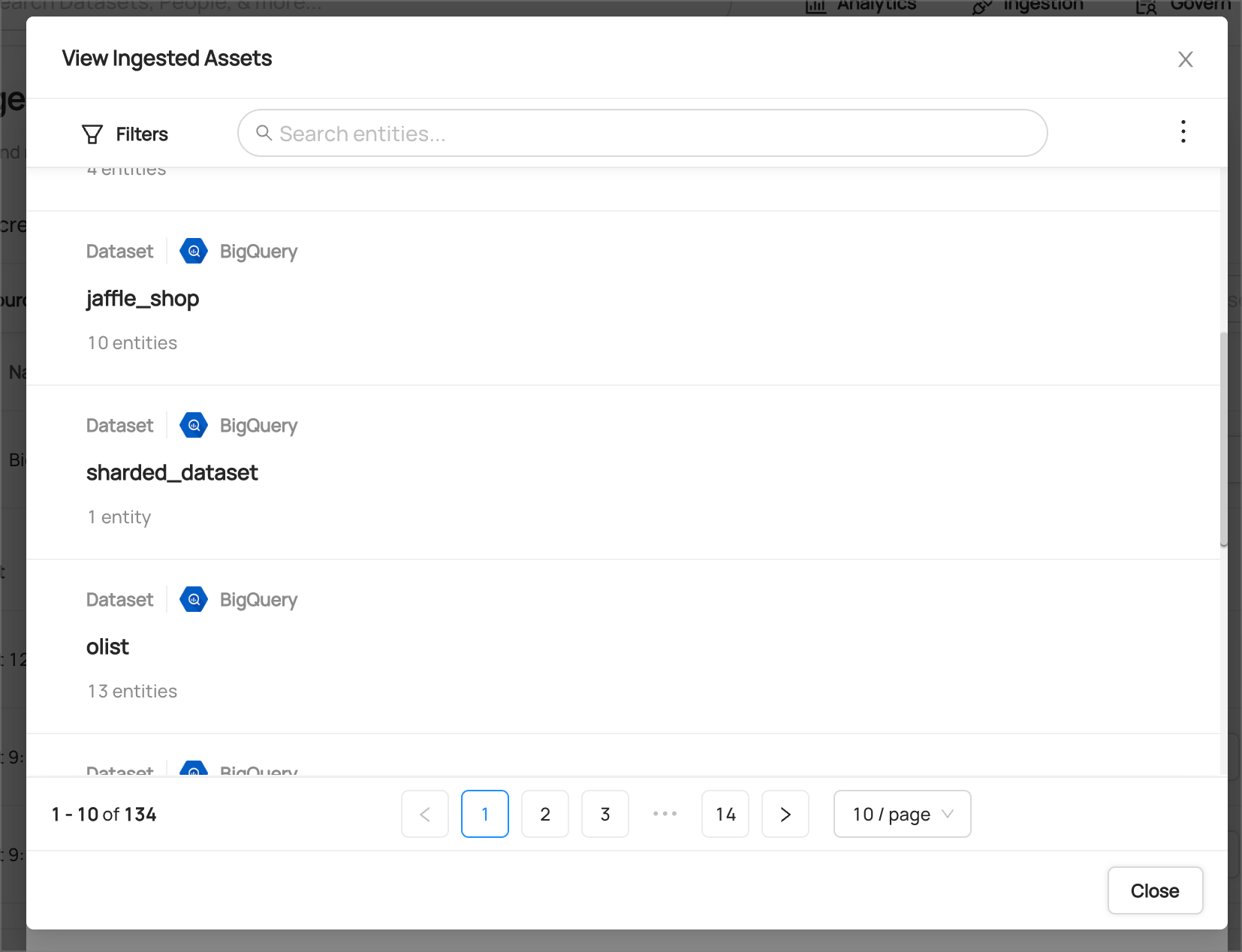

+15. From the Ingestion Run Details page, pick **View All** to see which entities were ingested

+

+

+  +

+

+

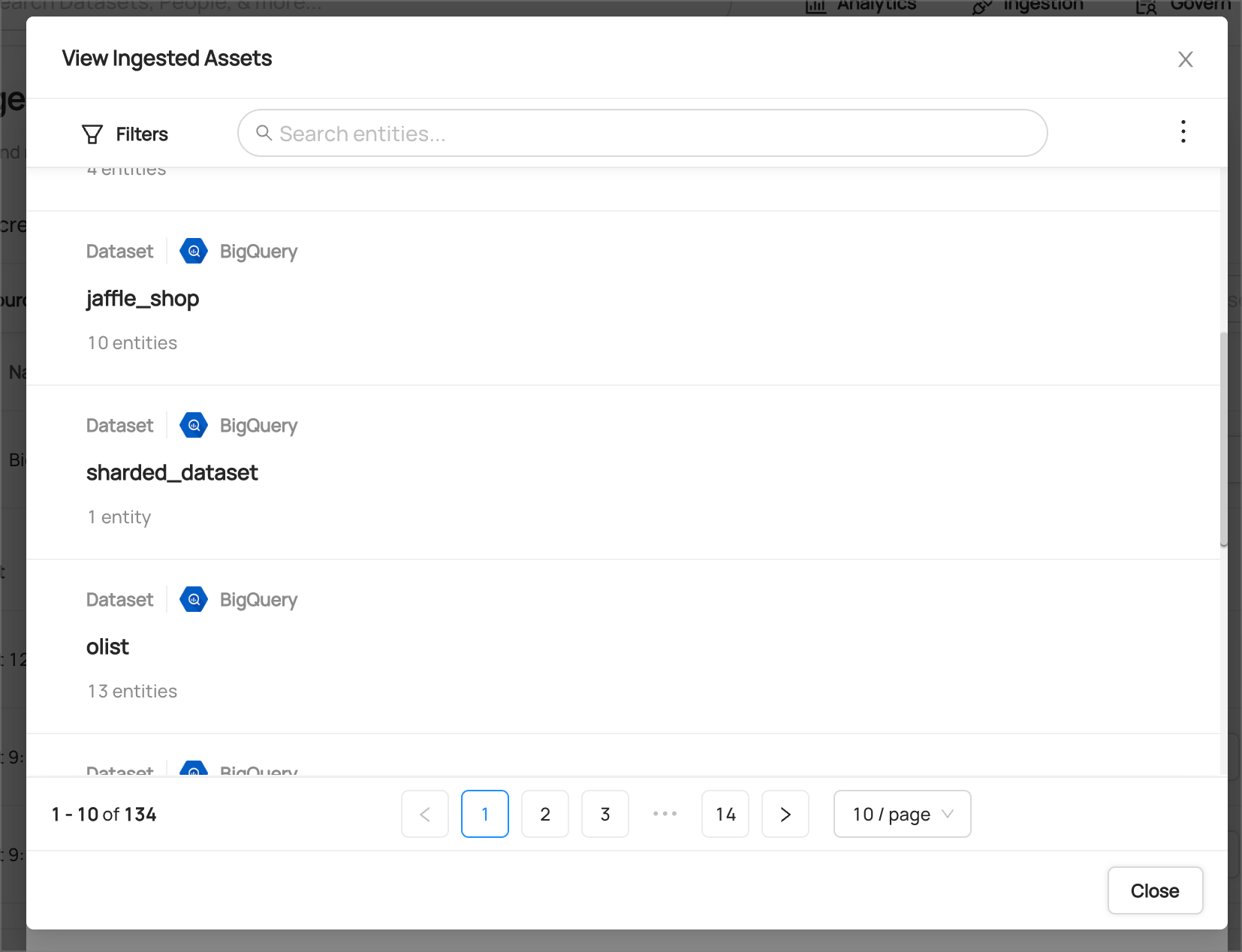

+16. Pick an entity from the list to manually validate if it contains the detail you expected

+

+

+  +

+

+

+

+**Congratulations!** You've successfully set up BigQuery as an ingestion source for DataHub!

+

+*Need more help? Join the conversation in [Slack](http://slack.datahubproject.io)!*

diff --git a/docs/quick-ingestion-guides/bigquery/overview.md b/docs/quick-ingestion-guides/bigquery/overview.md

new file mode 100644

index 0000000000..8cd6879847

--- /dev/null

+++ b/docs/quick-ingestion-guides/bigquery/overview.md

@@ -0,0 +1,37 @@

+---

+title: Overview

+---

+# BigQuery Ingestion Guide: Overview

+

+## What You Will Get Out of This Guide

+

+This guide will help you set up the BigQuery connector through the DataHub UI to begin ingesting metadata into DataHub.

+

+Upon completing this guide, you will have a recurring ingestion pipeline that will extract metadata from BigQuery and load it into DataHub. This will include to following BigQuery asset types:

+

+* [Projects](https://cloud.google.com/bigquery/docs/resource-hierarchy#projects)

+* [Datasets](https://cloud.google.com/bigquery/docs/datasets-intro)

+* [Tables](https://cloud.google.com/bigquery/docs/tables-intro)

+* [Views](https://cloud.google.com/bigquery/docs/views-intro)

+* [Materialized Views](https://cloud.google.com/bigquery/docs/materialized-views-intro)

+

+This recurring ingestion pipeline will also extract:

+

+* **Usage statistics** to help you understand recent query activity

+* **Table-level lineage** (where available) to automatically define interdependencies between datasets

+* **Table- and column-level profile statistics** to help you understand the shape of the data

+

+:::caution

+You will NOT have extracted [Routines](https://cloud.google.com/bigquery/docs/routines), [Search Indexes](https://cloud.google.com/bigquery/docs/search-intro) from BigQuery, as the connector does not support ingesting these assets

+:::

+

+## Next Steps

+If that all sounds like what you're looking for, navigate to the [next page](setup.md), where we'll talk about prerequisites

+

+## Advanced Guides and Reference

+If you're looking to do something more in-depth, want to use CLI instead of the DataHub UI, or just need to look at the reference documentation for this connector, use these links:

+

+* Learn about CLI Ingestion in the [Introduction to Metadata Ingestion](../../../metadata-ingestion/README.md)

+* [BigQuery Ingestion Reference Guide](https://datahubproject.io/docs/generated/ingestion/sources/bigquery/#module-bigquery)

+

+*Need more help? Join the conversation in [Slack](http://slack.datahubproject.io)!*

diff --git a/docs/quick-ingestion-guides/bigquery/setup.md b/docs/quick-ingestion-guides/bigquery/setup.md

new file mode 100644

index 0000000000..3635ea66f7

--- /dev/null

+++ b/docs/quick-ingestion-guides/bigquery/setup.md

@@ -0,0 +1,60 @@

+---

+title: Setup

+---

+# BigQuery Ingestion Guide: Setup & Prerequisites

+

+To configure ingestion from BigQuery, you'll need a [Service Account](https://cloud.google.com/iam/docs/creating-managing-service-accounts) configured with the proper permission sets, and an associated [Service Account Key](https://cloud.google.com/iam/docs/creating-managing-service-account-keys).

+

+This setup guide will walk you through the steps you'll need to take via your Google Cloud Console.

+

+## BigQuery Prerequisites

+

+If you do not have an existing Service Account and Service Account Key, please work with your BigQuery Admin to ensure you have the appropriate permissions and/or roles to continue with this setup guide.

+

+When creating and managing new Service Accounts and Service Account Keys, we have found the following permissions and roles to be required:

+

+* Create a Service Account: `iam.serviceAccounts.create` permission

+* Assign roles to a Service Account: `serviceusage.services.enable` permission

+* Set permission policy to the project: `resourcemanager.projects.setIamPolicy` permission

+* Generate Key for Service Account: Service Account Key Admin (`roles/iam.serviceAccountKeyAdmin`) IAM role

+

+:::note

+Please refer to the BigQuery [Permissions](https://cloud.google.com/iam/docs/permissions-reference) and [IAM Roles](https://cloud.google.com/iam/docs/understanding-roles) references for details

+:::

+

+## BigQuery Setup

+

+1. To set up a new Service Account follow [this guide](https://cloud.google.com/iam/docs/creating-managing-service-accounts)

+

+2. When you are creating a Service Account, assign the following predefined Roles:

+ - [BigQuery Job User](https://cloud.google.com/bigquery/docs/access-control#bigquery.jobUser)

+ - [BigQuery Metadata Viewer](https://cloud.google.com/bigquery/docs/access-control#bigquery.metadataViewer)

+ - [BigQuery Resource Viewer](https://cloud.google.com/bigquery/docs/access-control#bigquery.resourceViewer) -> This role is for Table-Level Lineage and Usage extraction

+ - [Logs View Accessor](https://cloud.google.com/bigquery/docs/access-control#bigquery.dataViewer) -> This role is for Table-Level Lineage and Usage extraction

+ - [BigQuery Data Viewer](https://cloud.google.com/bigquery/docs/access-control#bigquery.dataViewer) -> This role is for Profiling

+

+:::note

+You can always add/remove roles to Service Accounts later on. Please refer to the BigQuery [Manage access to projects, folders, and organizations](https://cloud.google.com/iam/docs/granting-changing-revoking-access) guide for more details.

+:::

+

+3. Create and download a [Service Account Key](https://cloud.google.com/iam/docs/creating-managing-service-account-keys). We will use this to set up authentication within DataHub.

+

+The key file looks like this:

+```json

+{

+ "type": "service_account",

+ "project_id": "project-id-1234567",

+ "private_key_id": "d0121d0000882411234e11166c6aaa23ed5d74e0",

+ "private_key": "-----BEGIN PRIVATE KEY-----\nMIIyourkey\n-----END PRIVATE KEY-----",

+ "client_email": "test@suppproject-id-1234567.iam.gserviceaccount.com",

+ "client_id": "113545814931671546333",

+ "auth_uri": "https://accounts.google.com/o/oauth2/auth",

+ "token_uri": "https://oauth2.googleapis.com/token",

+ "auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

+ "client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/test%suppproject-id-1234567.iam.gserviceaccount.com"

+}

+```

+## Next Steps

+Once you've confirmed all of the above in BigQuery, it's time to [move on](configuration.md) to configure the actual ingestion source within the DataHub UI.

+

+*Need more help? Join the conversation in [Slack](http://slack.datahubproject.io)!*

+

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+