diff --git a/metadata-ingestion/docs/sources/vertexai/vertexai_pre.md b/metadata-ingestion/docs/sources/vertexai/vertexai_pre.md

index a1e0259dd7..890ccc8df1 100644

--- a/metadata-ingestion/docs/sources/vertexai/vertexai_pre.md

+++ b/metadata-ingestion/docs/sources/vertexai/vertexai_pre.md

@@ -46,3 +46,37 @@ Please read the section to understand how to set up application default Credenti

client_email: "test@suppproject-id-1234567.iam.gserviceaccount.com"

client_id: "123456678890"

```

+

+### Integration Details

+

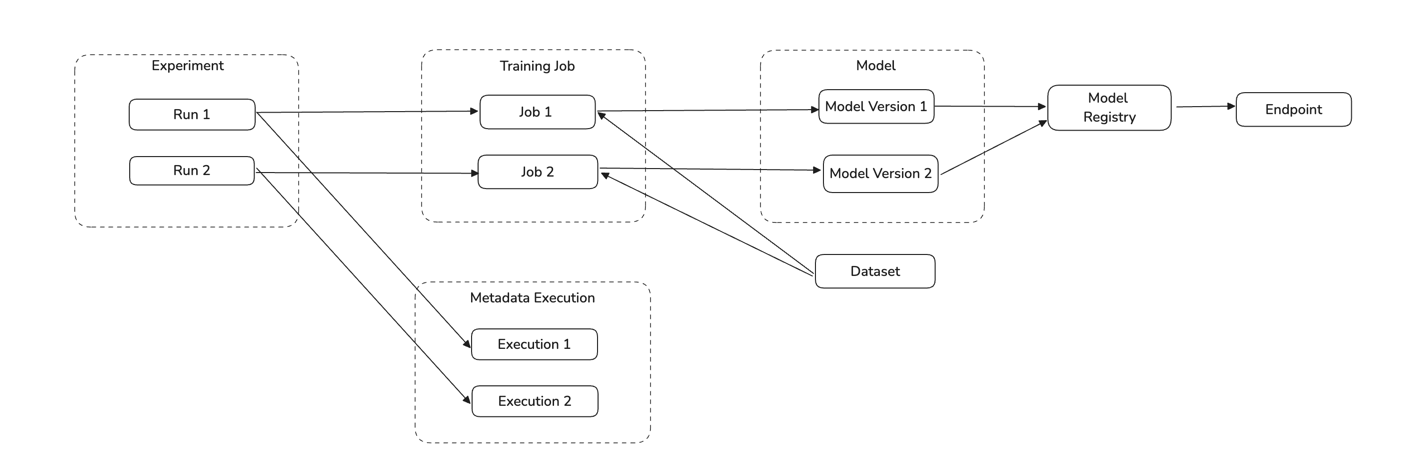

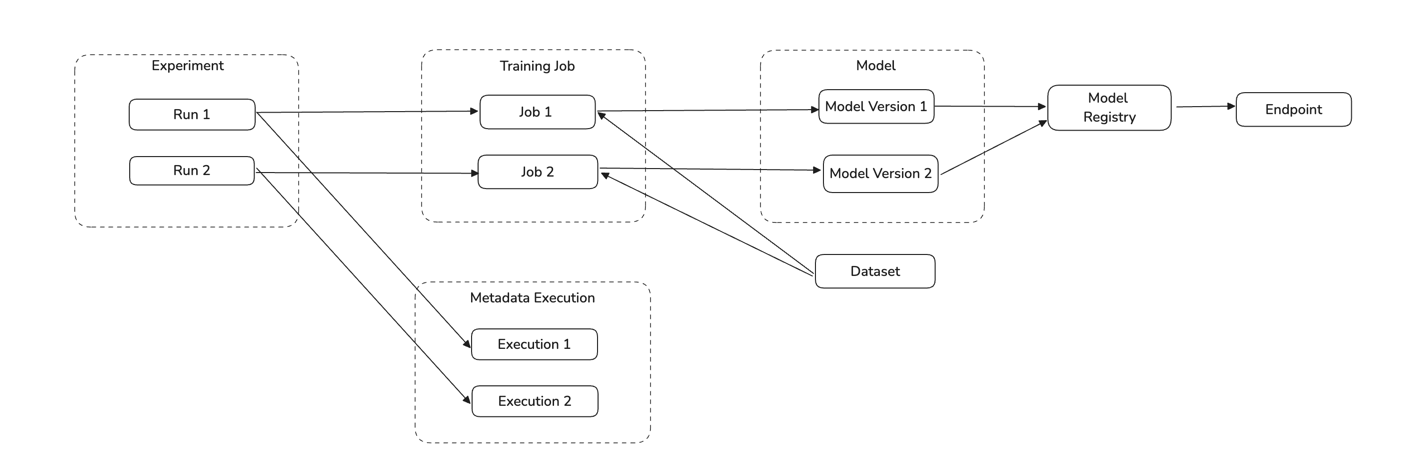

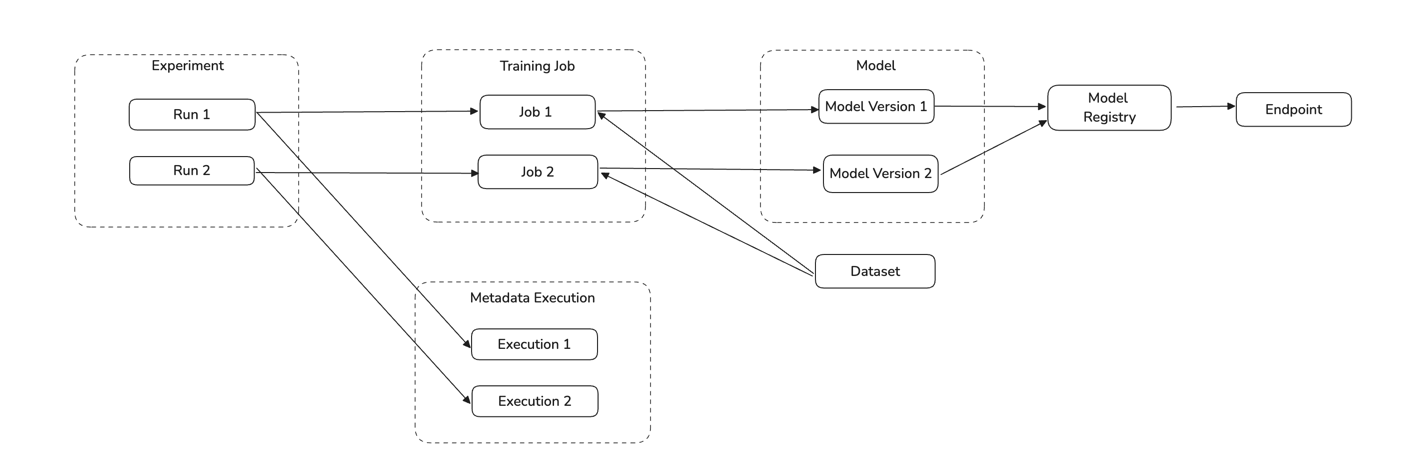

+Ingestion Job extract Models, Datasets, Training Jobs, Endpoints, Experiment and Experiment Runs in a given project and region on Vertex AI.

+

+#### Concept Mapping

+

+This ingestion source maps the following Vertex AI Concepts to DataHub Concepts:

+

+| Source Concept | DataHub Concept | Notes |

+|:--------------------------------:|:---:|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|

+| [`Model`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.Model) | [`MlModelGroup`](https://datahubproject.io/docs/generated/metamodel/entities/mlmodelgroup/) | The name of a Model Group is the same as Model's name. Model serve as containers for multiple versions of the same model in Vertex AI. |

+| [`Model Version`](https://cloud.google.com/vertex-ai/docs/model-registry/versioning) | [`MlModel`](https://datahubproject.io/docs/generated/metamodel/entities/mlmodel/) | The name of a Model is `{model_name}_{model_version}` (e.g. my_vertexai_model_1 for model registered to Model Registry or Deployed to Endpoint. Each Model Version represents a specific iteration of a model with its own metadata. |

+| Dataset

| [`Dataset`](https://datahubproject.io/docs/generated/metamodel/entities/dataset) | A Managed Dataset resource in Vertex AI is mapped to Dataset in DataHub.

Supported types of datasets include ([`Text`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.TextDataset), [`Tabular`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.TabularDataset), [`Image Dataset`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.ImageDataset), [`Video`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.VideoDataset), [`TimeSeries`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.TimeSeriesDataset)) |

+| [`Training Job`](https://cloud.google.com/vertex-ai/docs/beginner/beginners-guide) | [`DataProcessInstance`](https://datahubproject.io/docs/generated/metamodel/entities/dataprocessinstance/) | A Training Job is mapped as DataProcessInstance in DataHub.

Supported types of training jobs include ([`AutoMLTextTrainingJob`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.AutoMLTextTrainingJob), [`AutoMLTabularTrainingJob`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.AutoMLTabularTrainingJob), [`AutoMLImageTrainingJob`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.AutoMLImageTrainingJob), [`AutoMLVideoTrainingJob`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.AutoMLVideoTrainingJob), [`AutoMLForecastingTrainingJob`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.AutoMLForecastingTrainingJob), [`Custom Job`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.CustomJob), [`Custom TrainingJob`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.CustomTrainingJob), [`Custom Container TrainingJob`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.CustomContainerTrainingJob), [`Custom Python Packaging Job`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.CustomPythonPackageTrainingJob) ) |

+| [`Experiment`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.Experiment) | [`Container`](https://datahubproject.io/docs/generated/metamodel/entities/container/) | Experiments organize related runs and serve as logical groupings for model development iterations. Each Experiment is mapped to a Container in DataHub. |

+| [`Experiment Run`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.ExperimentRun) | [`DataProcessInstance`](https://datahubproject.io/docs/generated/metamodel/entities/dataprocessinstance/) | An Experiment Run represents a single execution of a ML workflow. An Experiment Run tracks ML parameters, metricis, artifacts and metadata |

+| [`Execution`](https://cloud.google.com/python/docs/reference/aiplatform/latest/google.cloud.aiplatform.Execution) | [`DataProcessInstance`](https://datahubproject.io/docs/generated/metamodel/entities/dataprocessinstance/) | Metadata Execution resource for Vertex AI. Metadata Execution is started in a experiment run and captures input and output artifacts. |

+

+Vertex AI Concept Diagram:

+

+  +

+

+

+

+#### Lineage

+

+Lineage is emitted using Vertex AI API to capture the following relationships:

+

+- A training job and a model (which training job produce a model)

+- A dataset and a training job (which dataset was consumed by a training job to train a model)

+- Experiment runs and an experiment

+- Metadata execution and an experiment run

+

diff --git a/metadata-ingestion/src/datahub/ingestion/source/vertexai/vertexai.py b/metadata-ingestion/src/datahub/ingestion/source/vertexai/vertexai.py

index f6febd1200..f2f76370bd 100644

--- a/metadata-ingestion/src/datahub/ingestion/source/vertexai/vertexai.py

+++ b/metadata-ingestion/src/datahub/ingestion/source/vertexai/vertexai.py

@@ -107,7 +107,6 @@ class ContainerKeyWithId(ContainerKey):

SourceCapability.DESCRIPTIONS,

"Extract descriptions for Vertex AI Registered Models and Model Versions",

)

-@capability(SourceCapability.TAGS, "Extract tags for Vertex AI Registered Model Stages")

class VertexAISource(Source):

platform: str = "vertexai"

+

+ +

+