---

title: Configuration

---

# Configuring Your BigQuery Connector to DataHub

Now that you have created a Service Account and Service Account Key in BigQuery in [the prior step](setup.md), it's now time to set up a connection via the DataHub UI.

## Configure Secrets

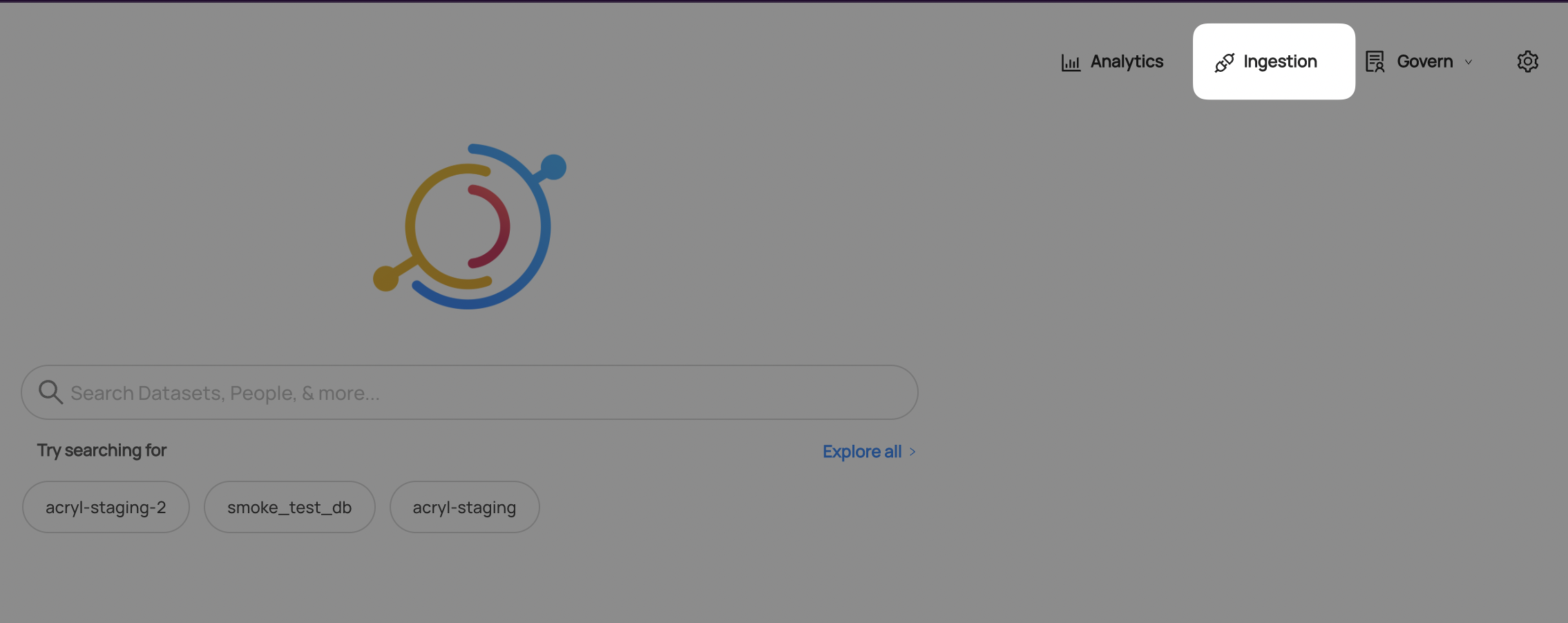

1. Within DataHub, navigate to the **Ingestion** tab in the top, right corner of your screen

:::note

If you do not see the Ingestion tab, please contact your DataHub admin to grant you the correct permissions

:::

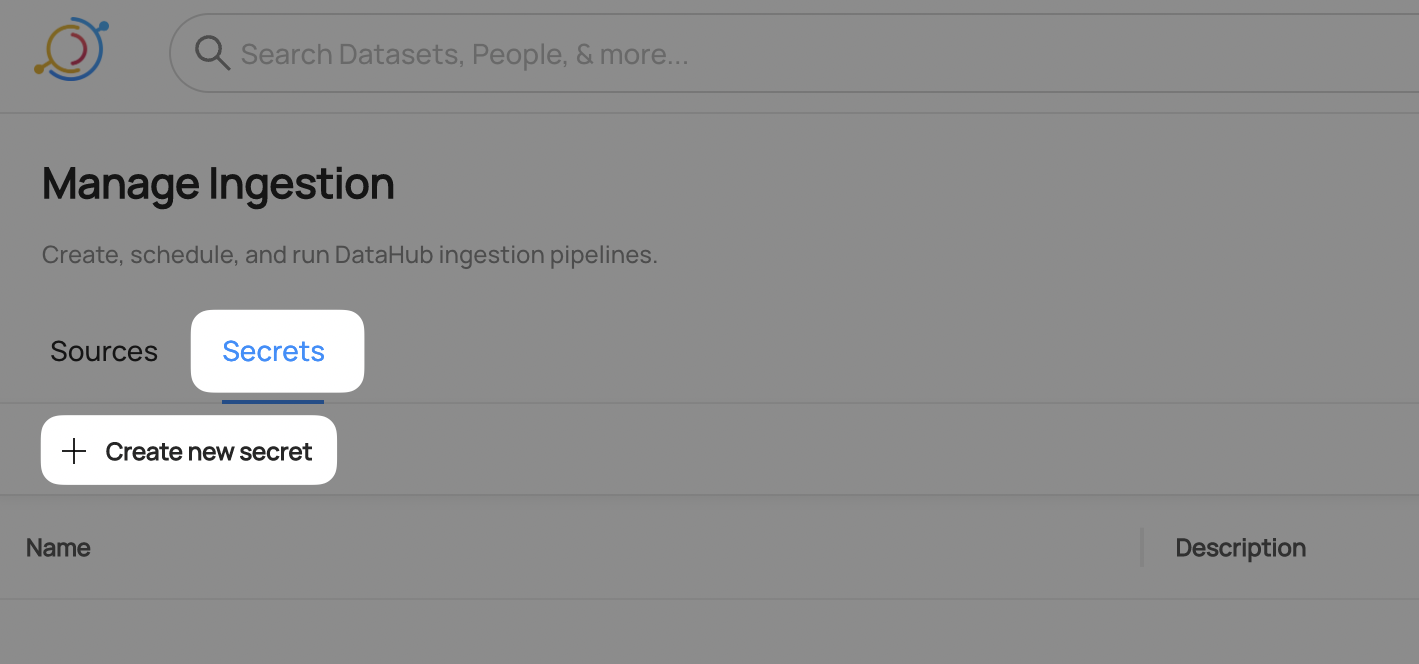

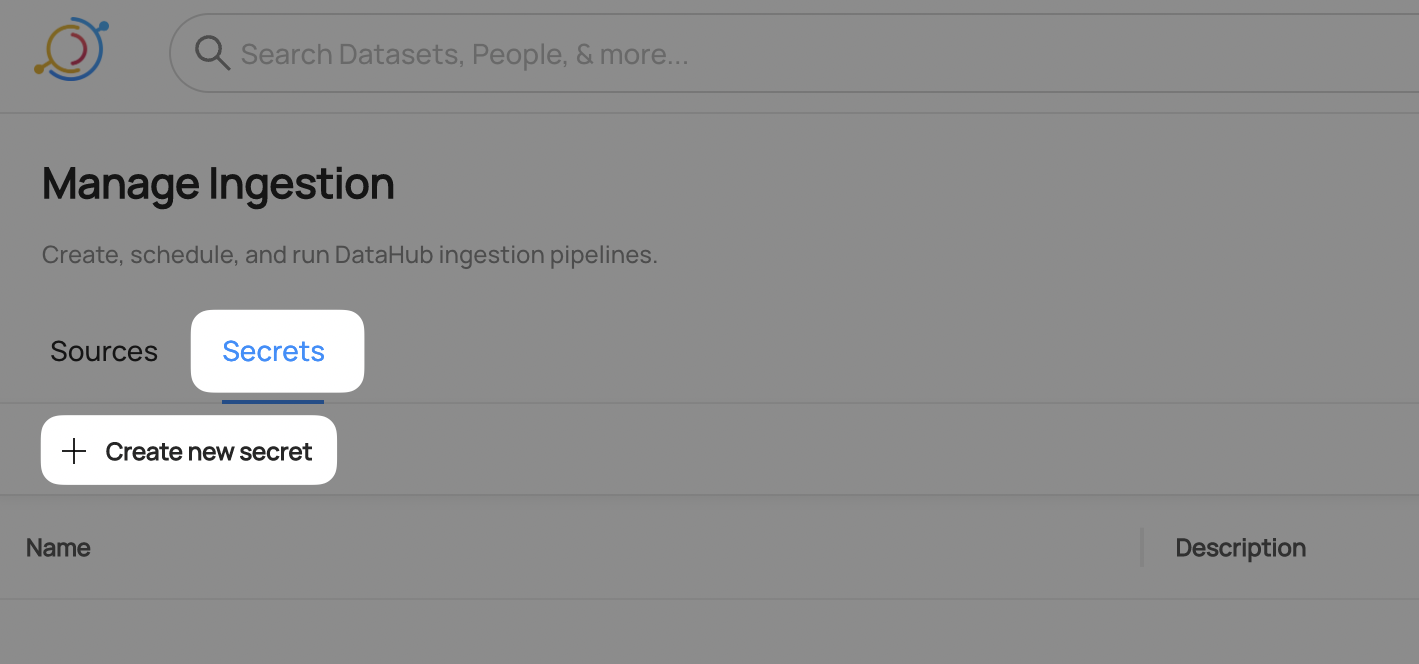

2. Navigate to the **Secrets** tab and click **Create new secret**

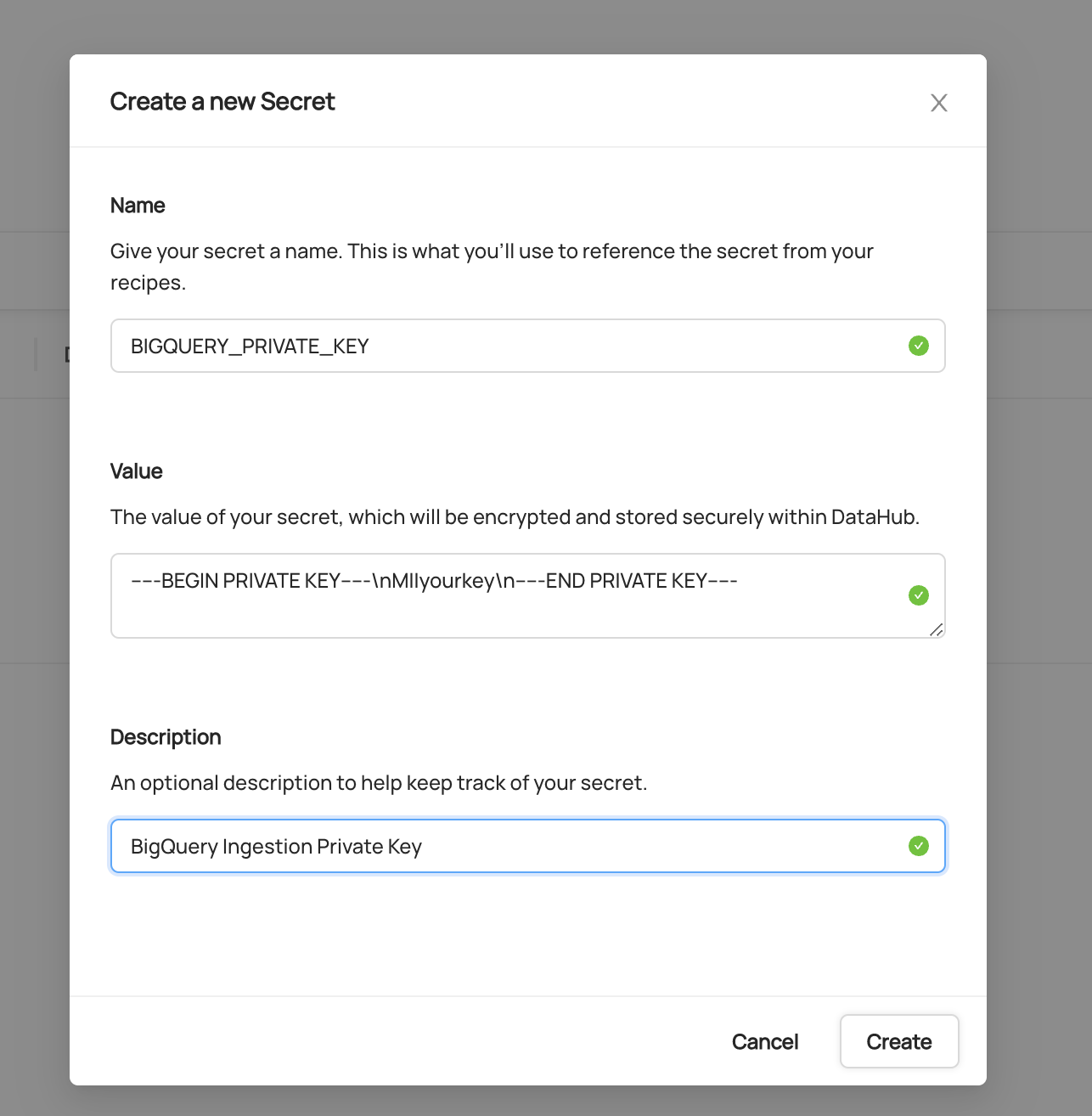

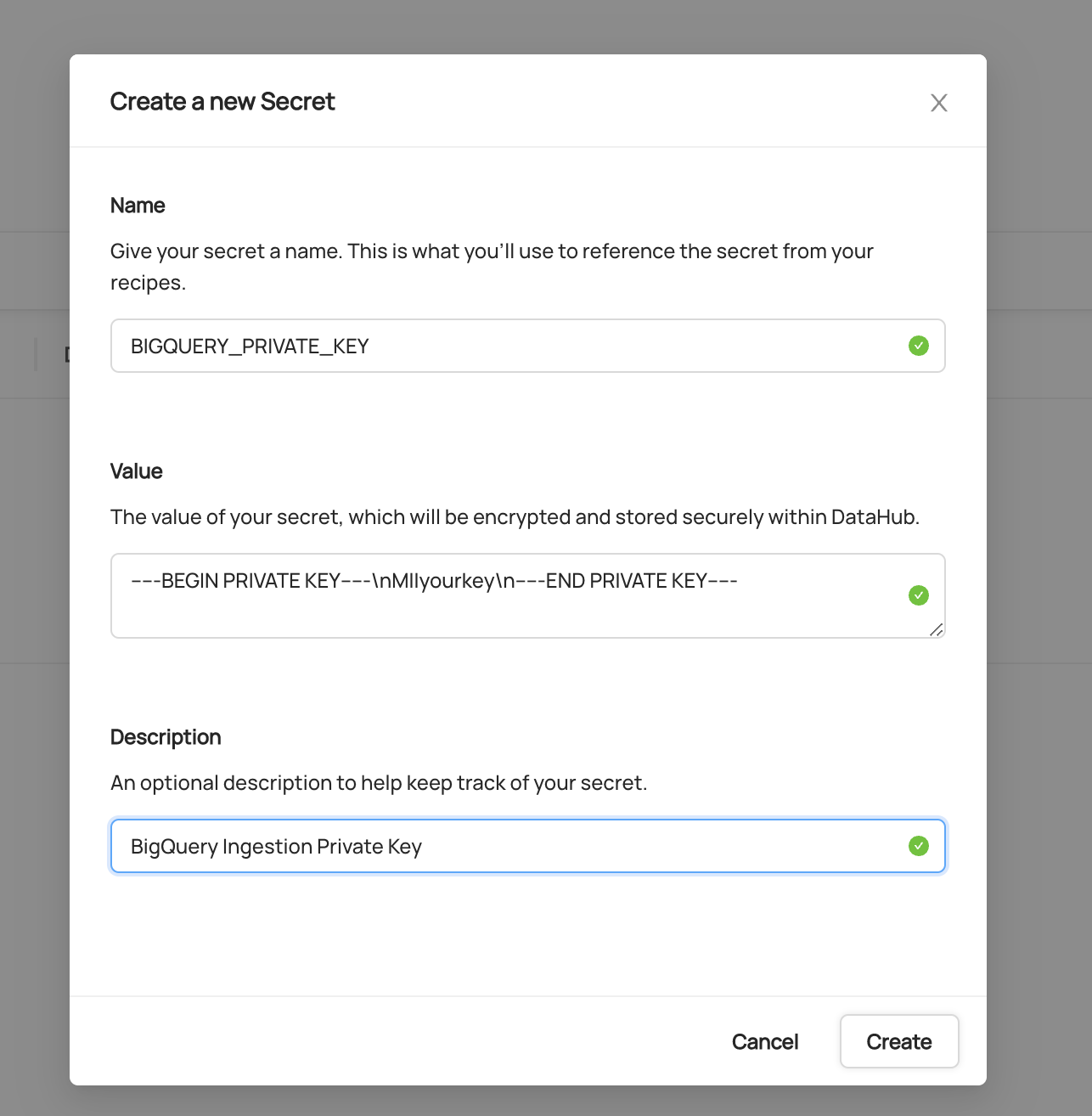

3. Create a Private Key secret

This will securely store your BigQuery Service Account Private Key within DataHub

- Enter a name like `BIGQUERY_PRIVATE_KEY` - we will use this later to refer to the secret

- Copy and paste the `private_key` value from your Service Account Key

- Optionally add a description

- Click **Create**

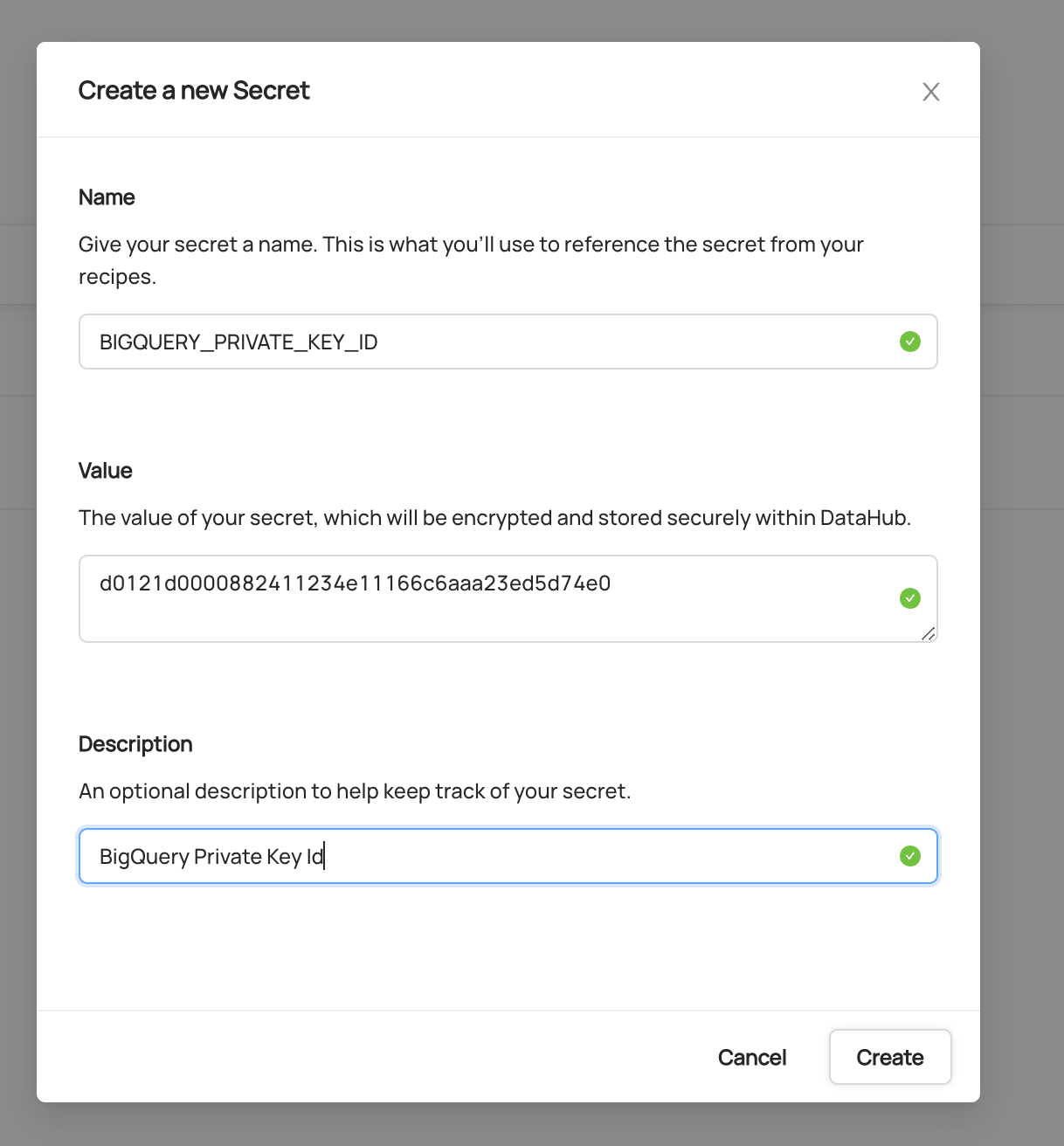

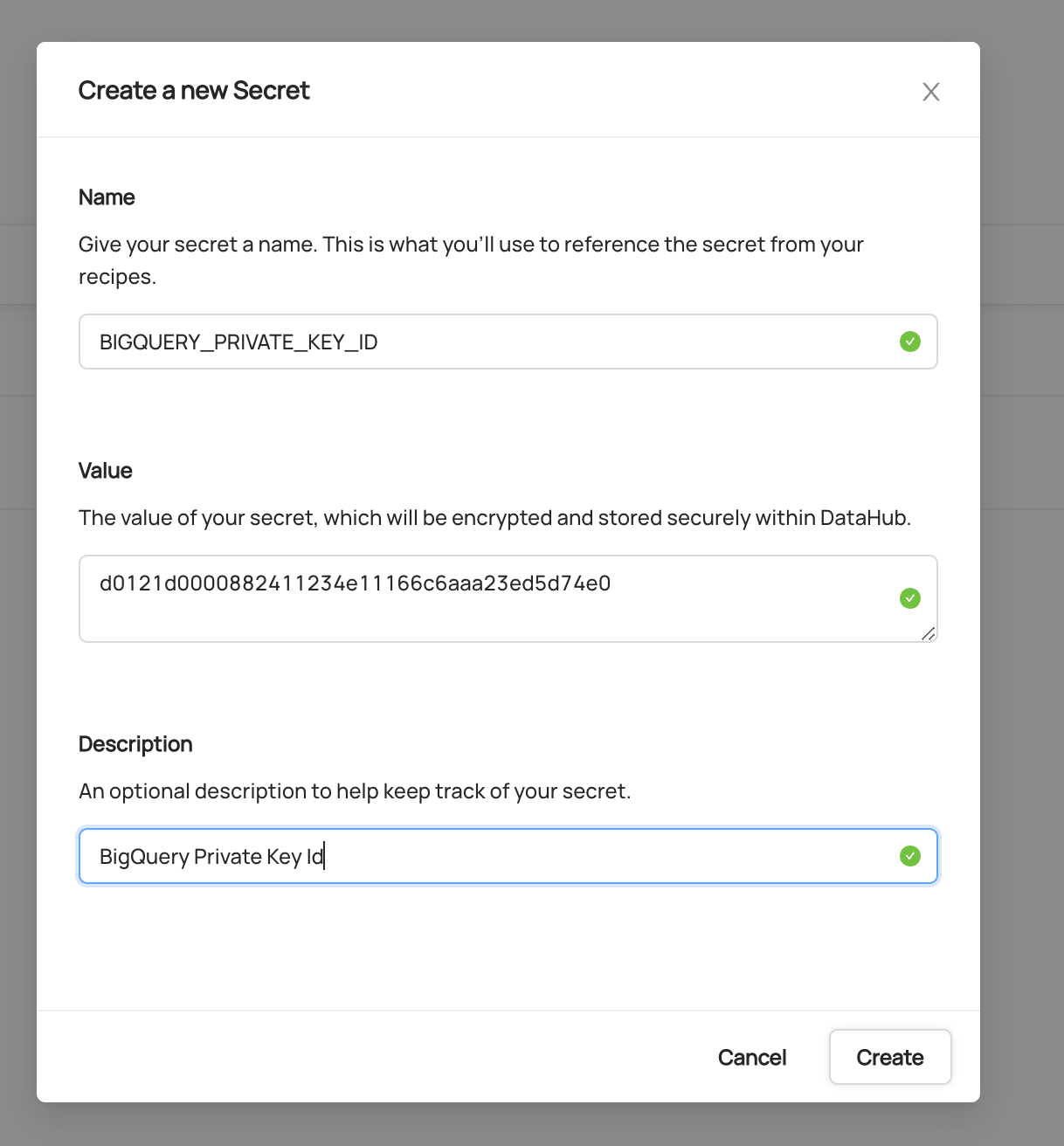

4. Create a Private Key ID secret

This will securely store your BigQuery Service Account Private Key ID within DataHub

- Click **Create new secret** again

- Enter a name like `BIGQUERY_PRIVATE_KEY_ID` - we will use this later to refer to the secret

- Copy and paste the `private_key_id` value from your Service Account Key

- Optionally add a description

- Click **Create**

## Configure Recipe

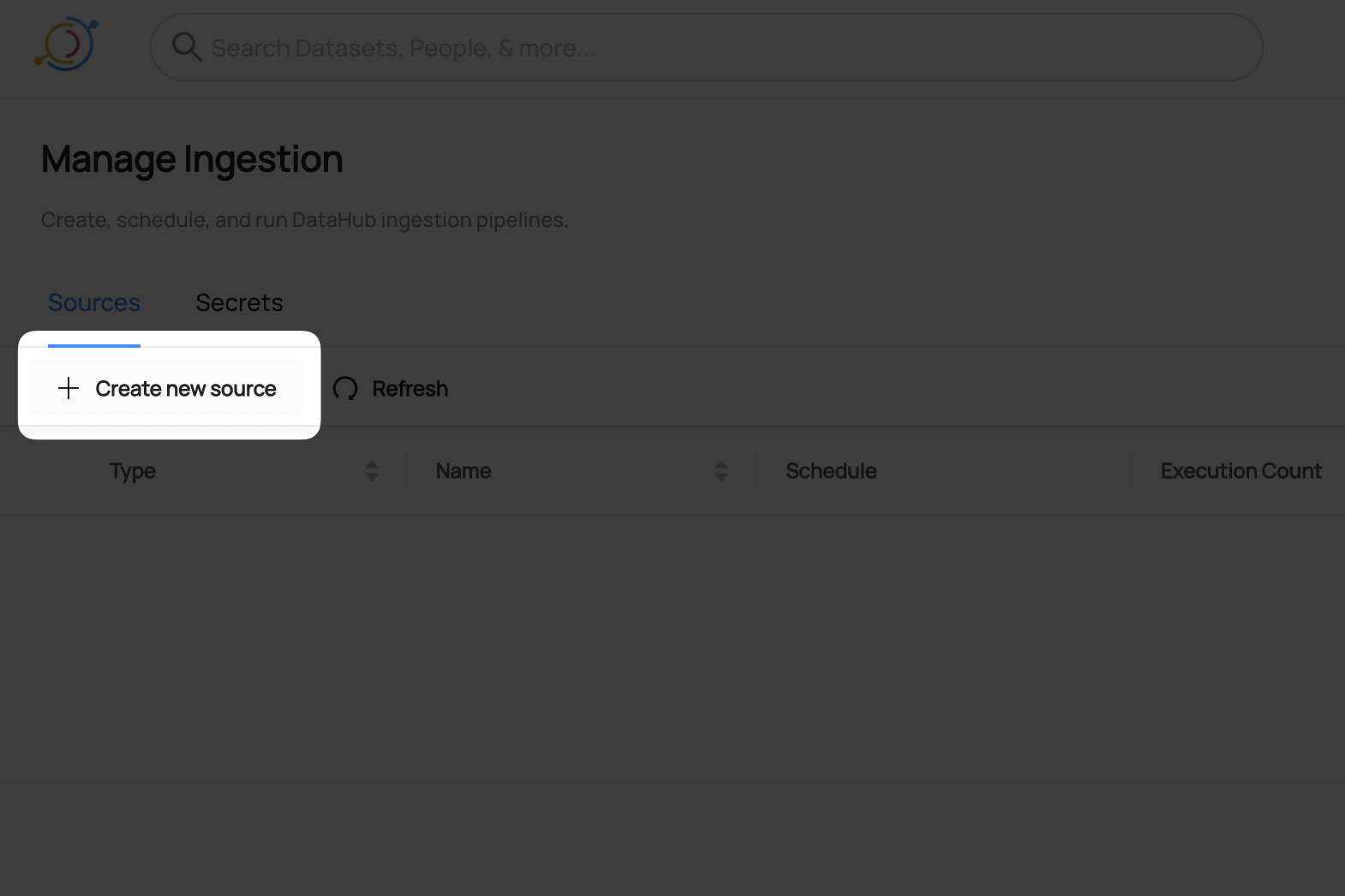

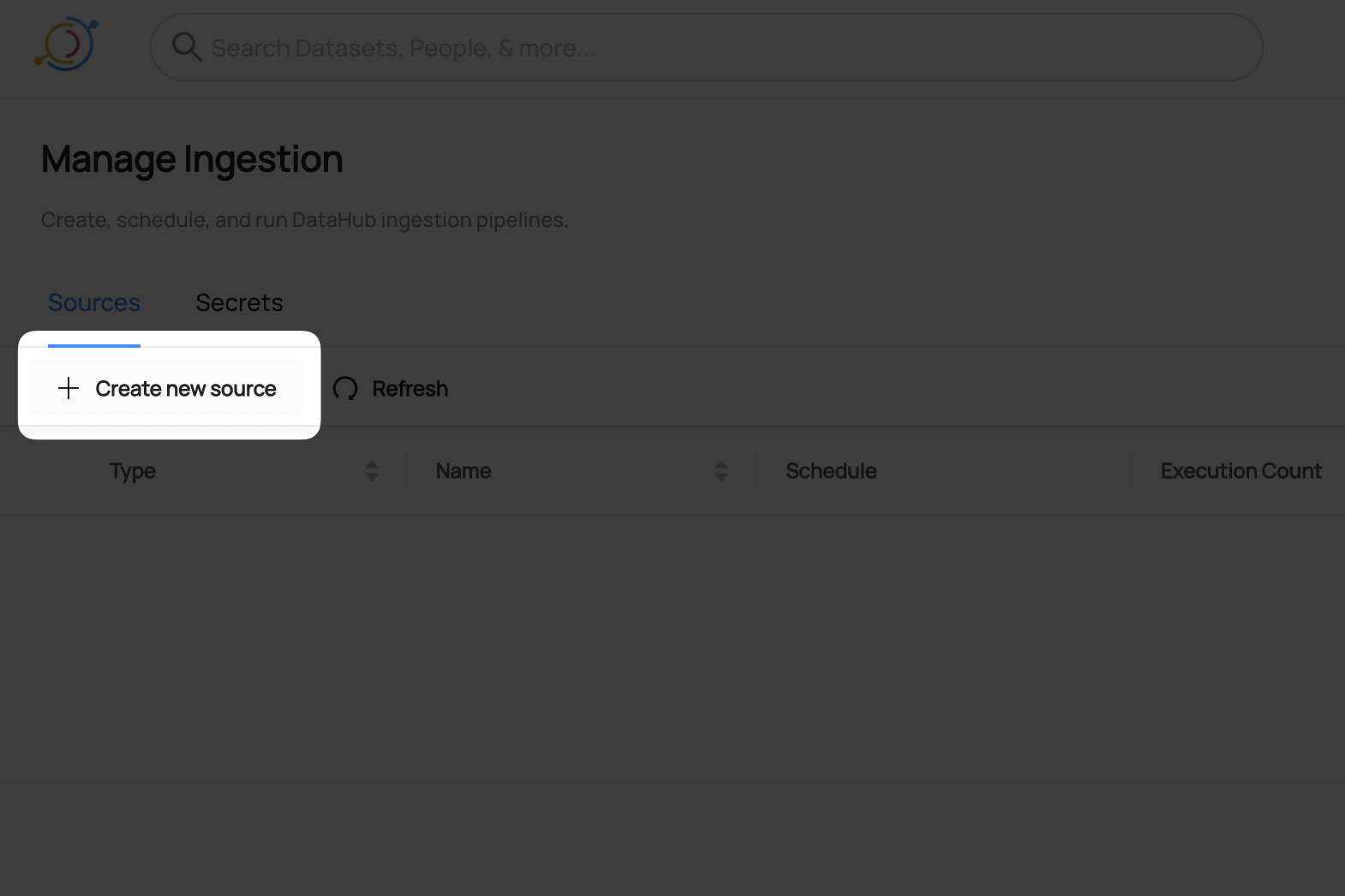

5. Navigate to the **Sources** tab and click **Create new source**

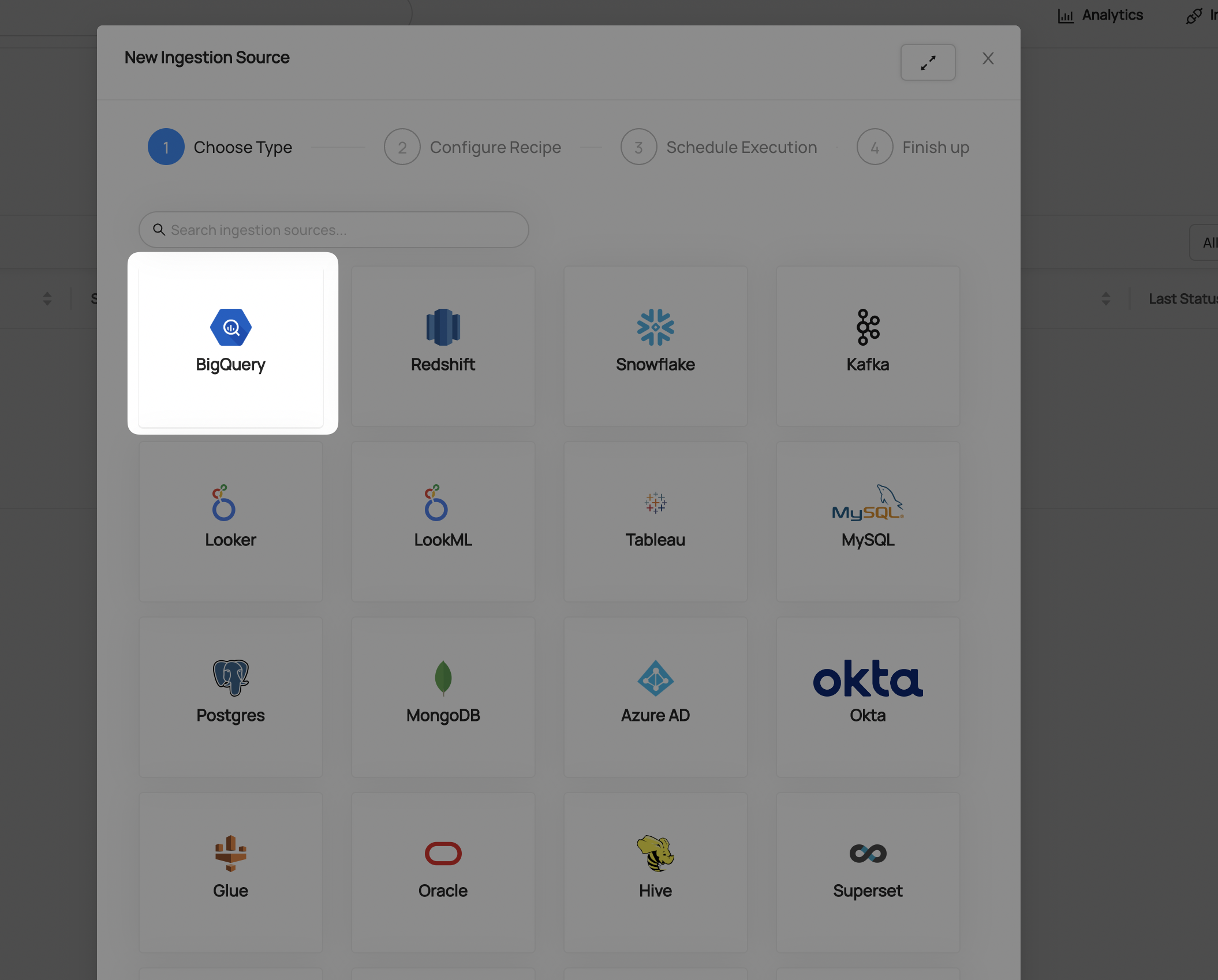

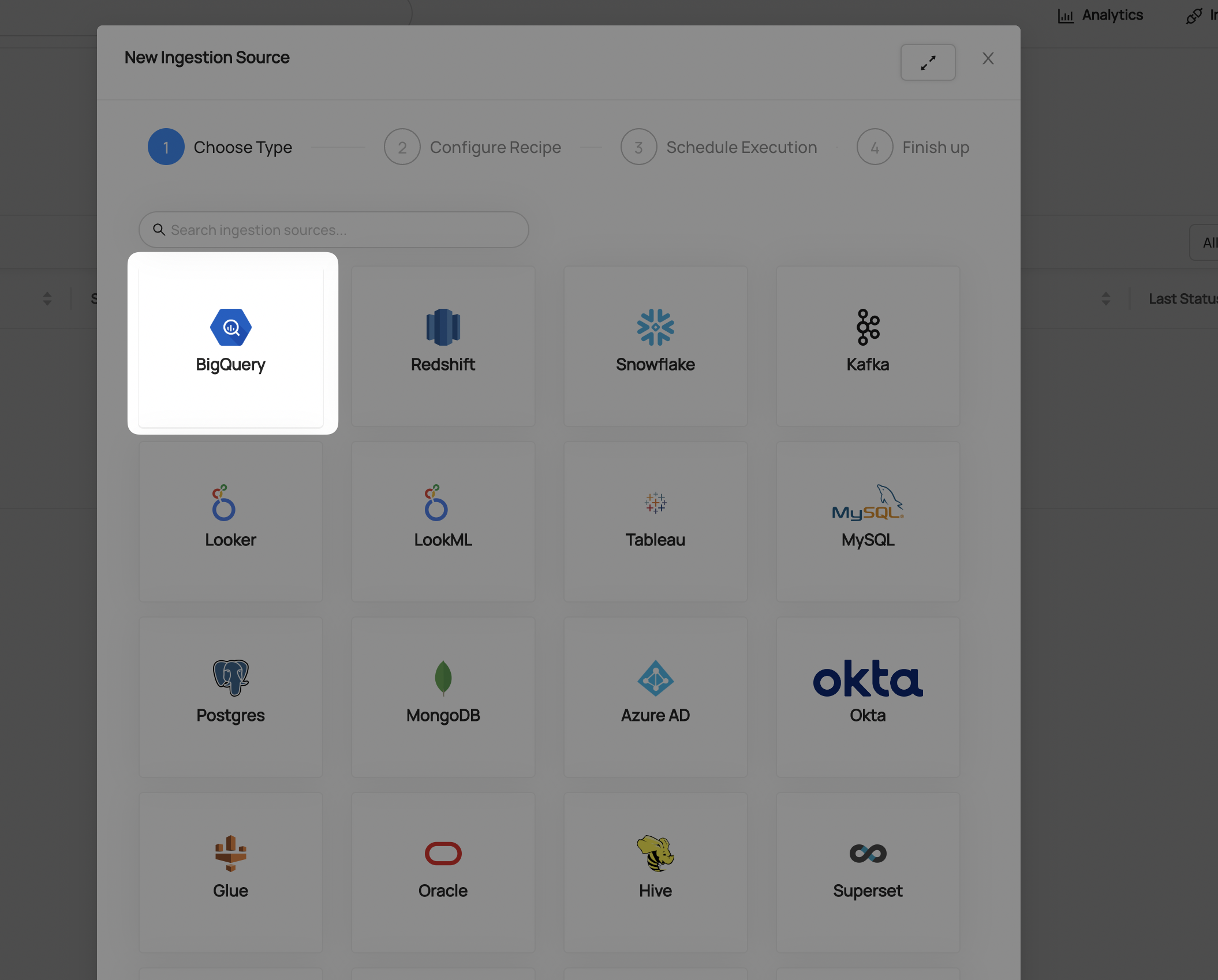

6. Select BigQuery

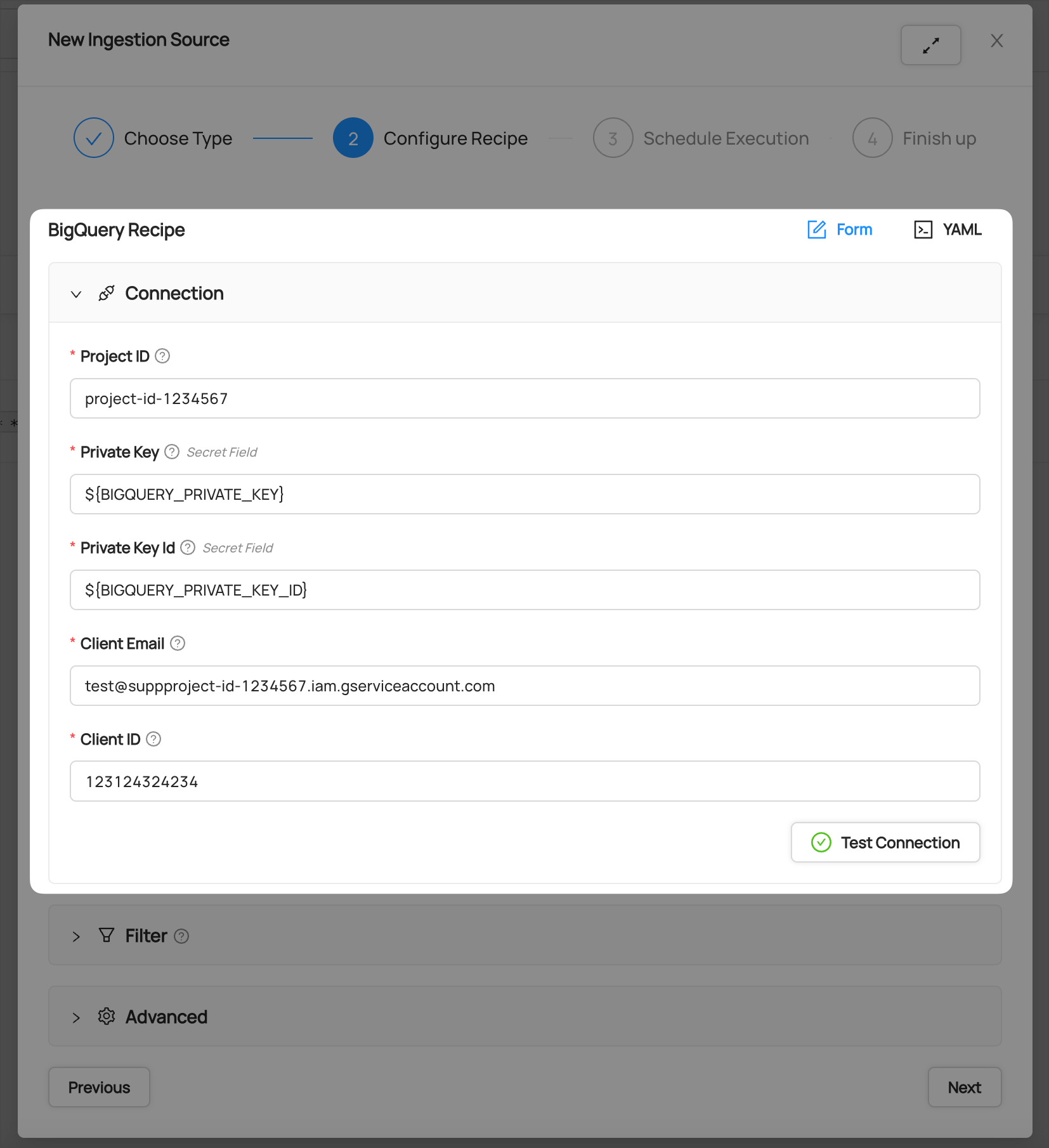

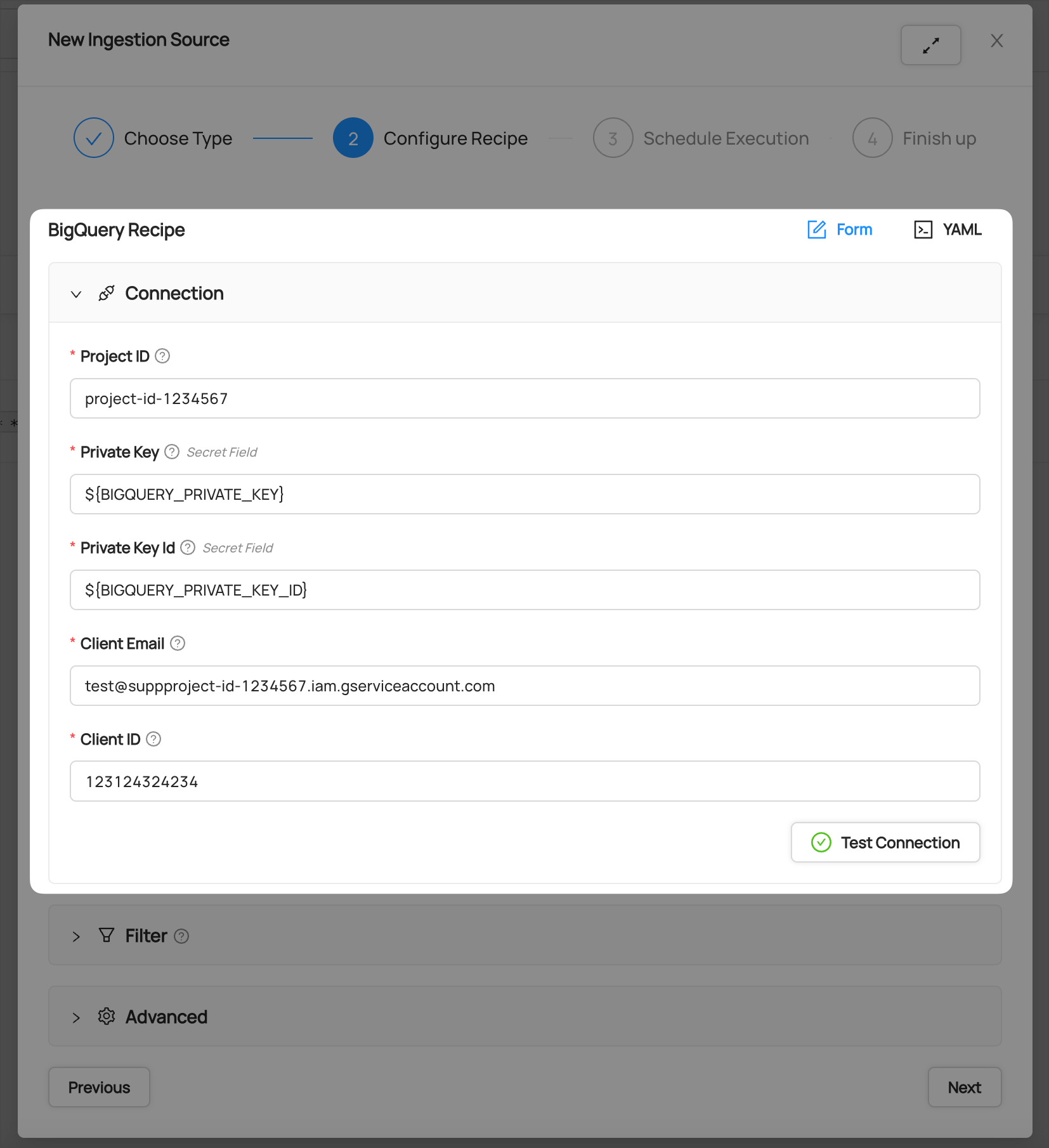

7. Fill out the BigQuery Recipe

You can find the following details in your Service Account Key file:

- Project ID

- Client Email

- Client ID

Populate the Secret Fields by selecting the Private Key and Private Key ID secrets you created in steps 3 and 4.

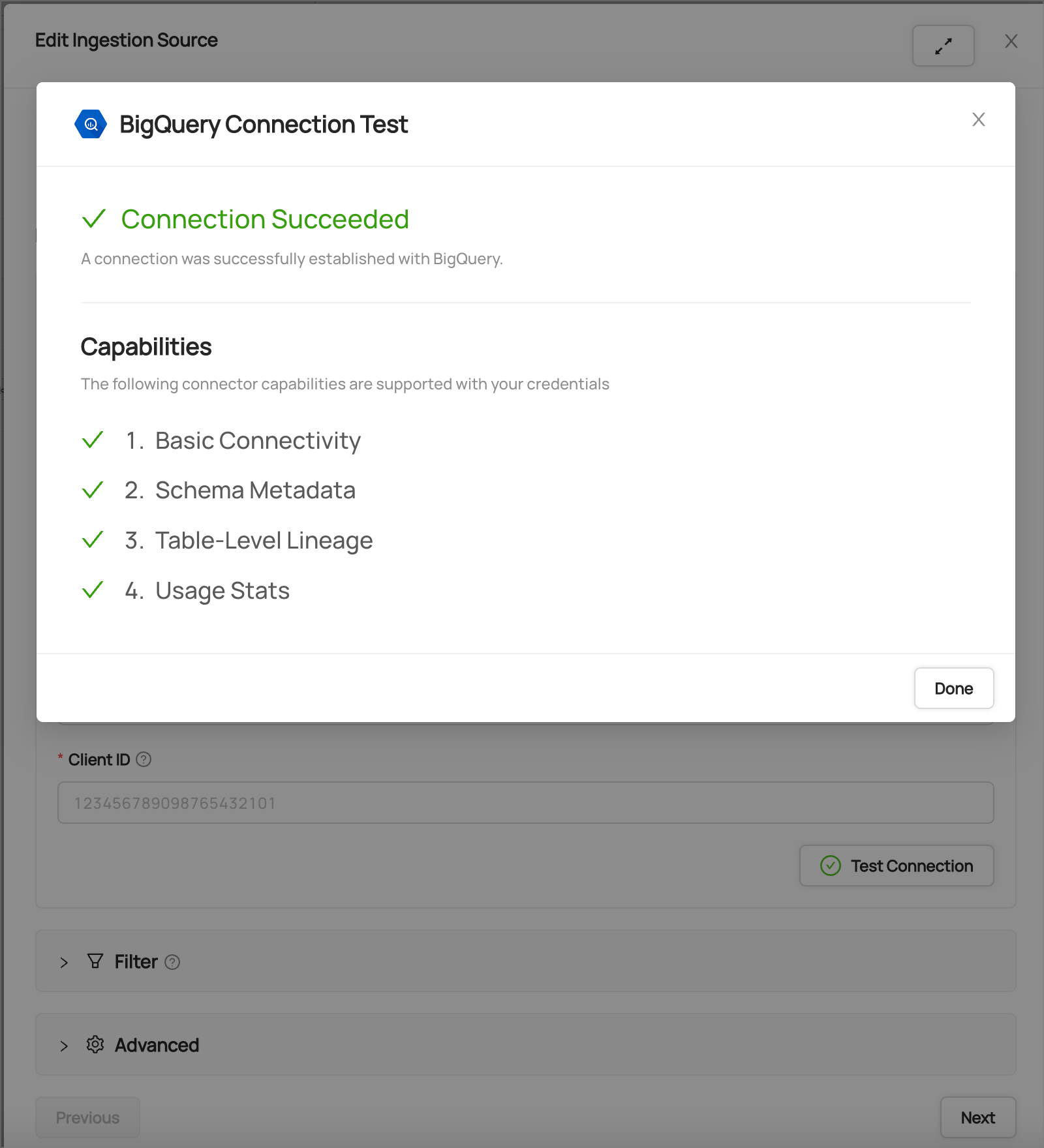

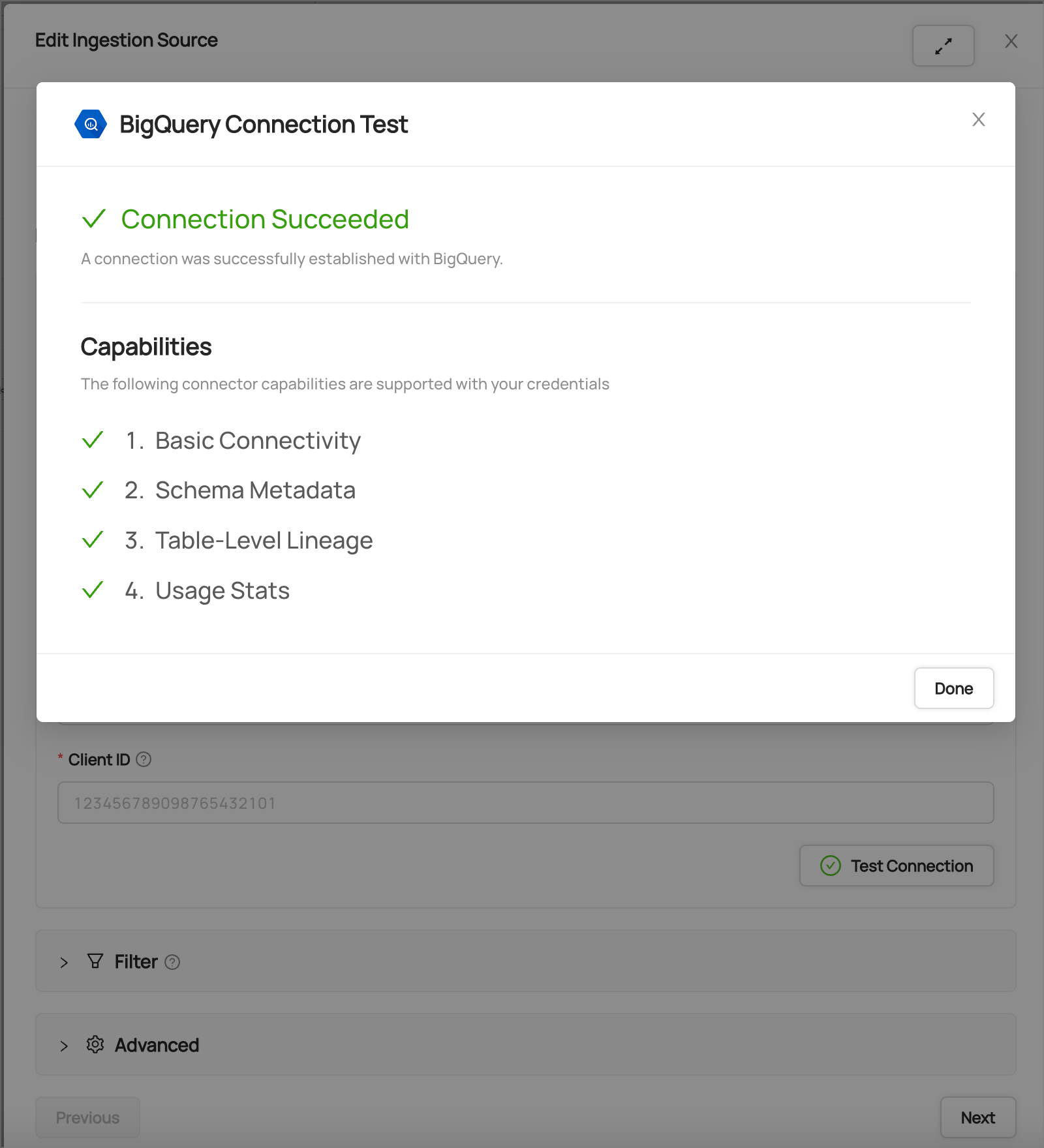

8. Click **Test Connection**

This step will ensure you have configured your credentials accurately and confirm you have the required permissions to extract all relevant metadata.

After you have successfully tested your connection, click **Next**.

## Schedule Execution

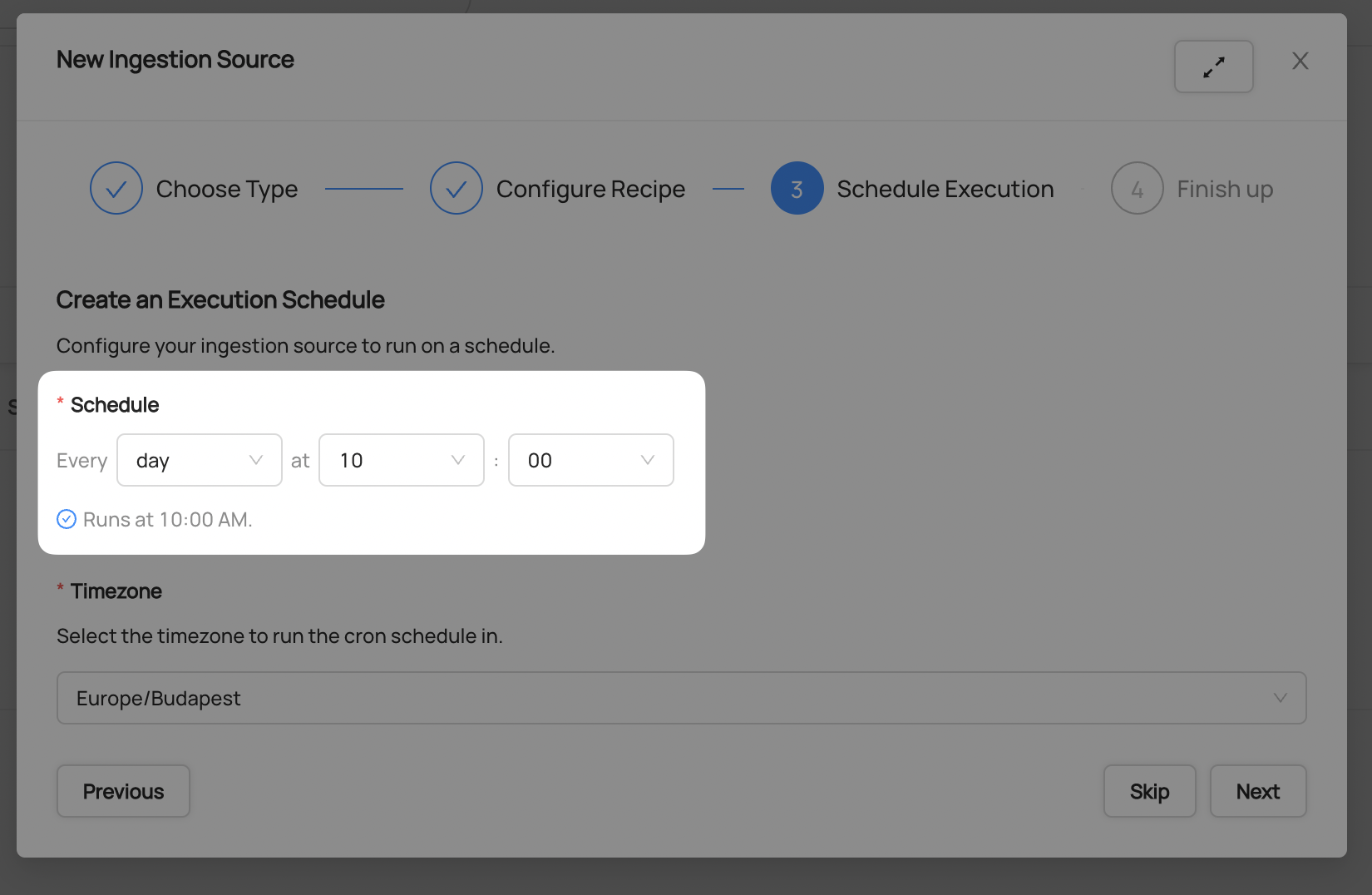

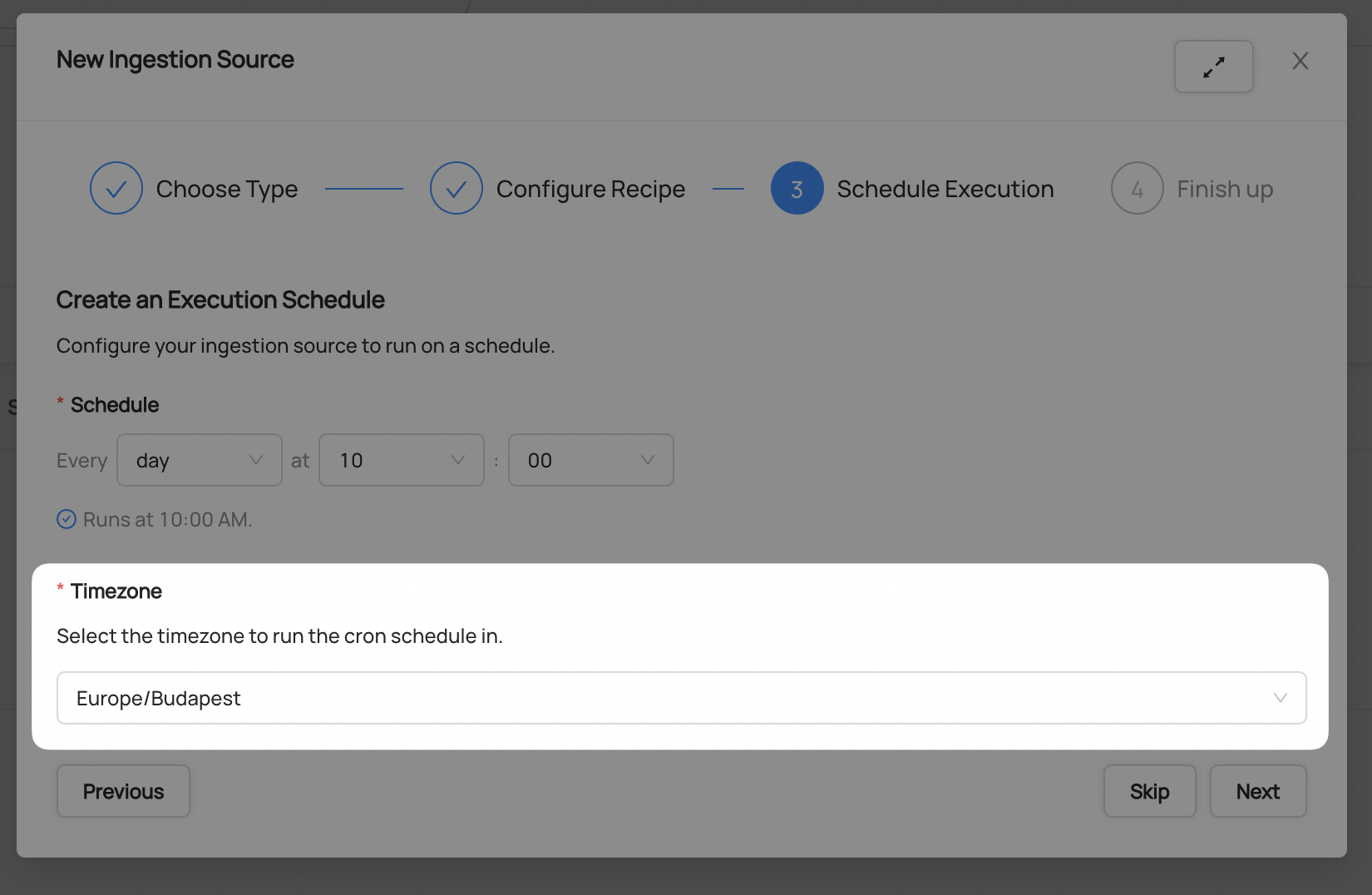

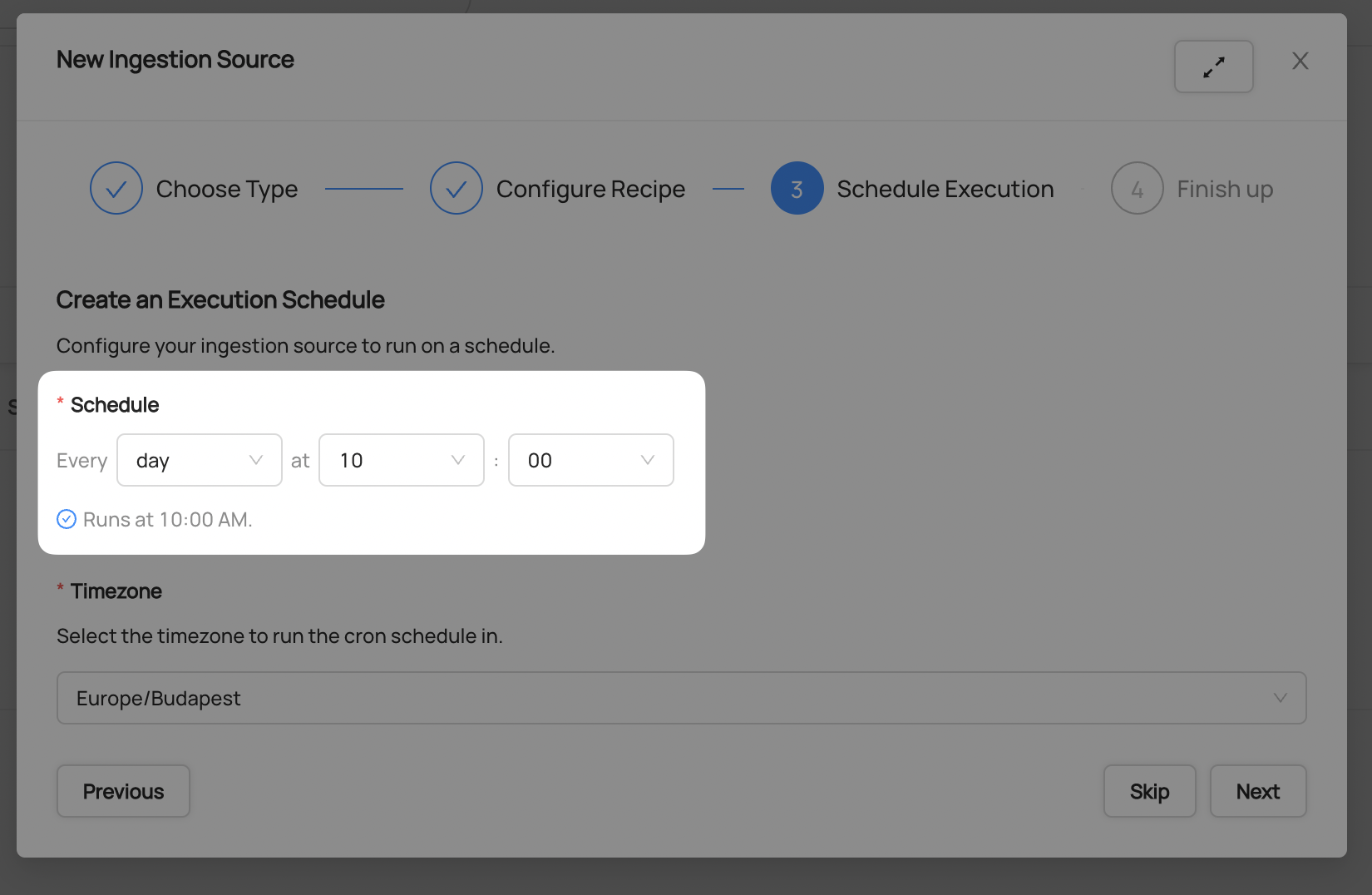

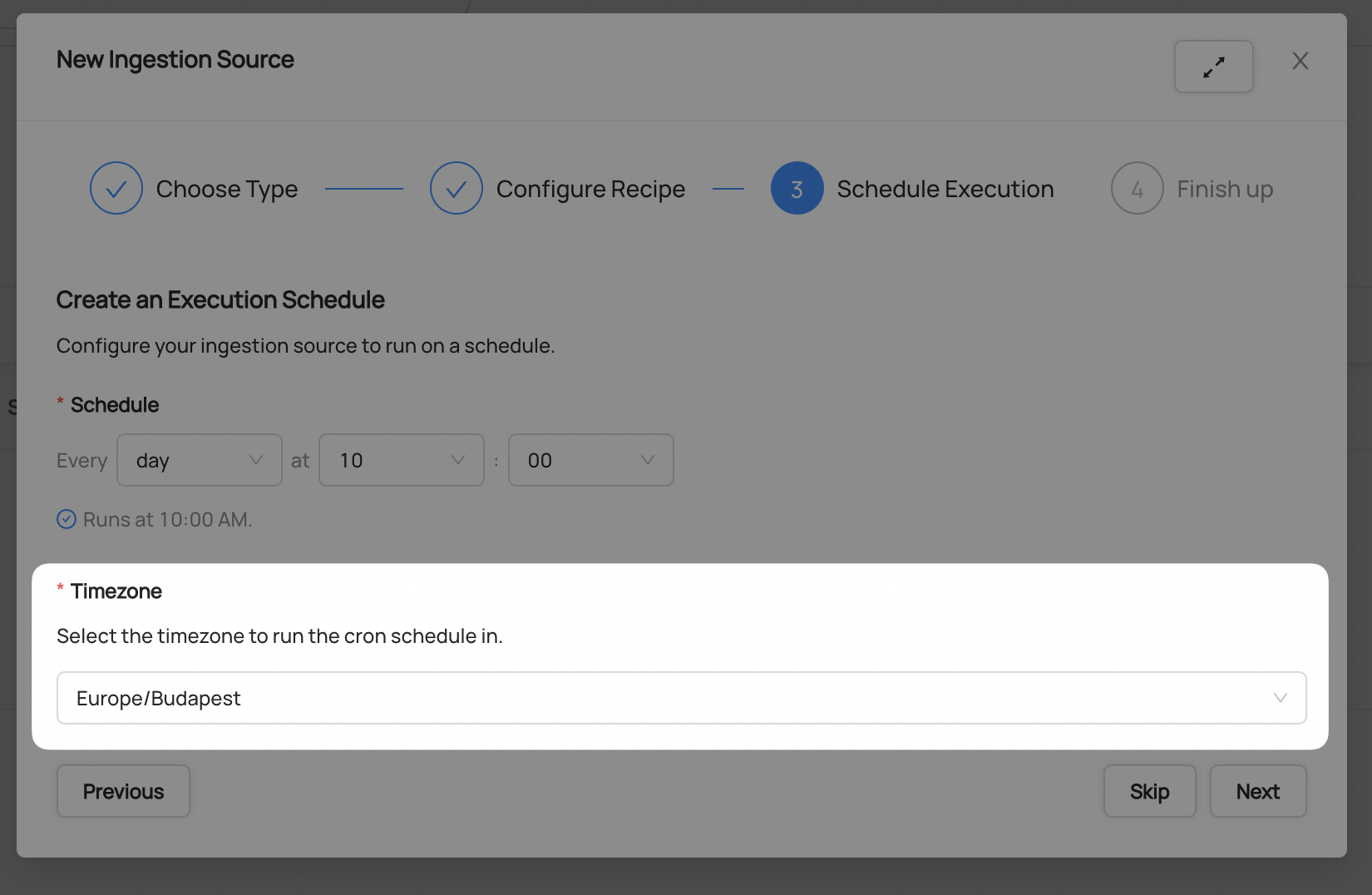

Now it's time to schedule a recurring ingestion pipeline to regularly extract metadata from your BigQuery instance.

9. Decide how regularly you want this ingestion to run-- day, month, year, hour, minute, etc. Select from the dropdown

10. Ensure you've configured your correct timezone

11. Click **Next** when you are done

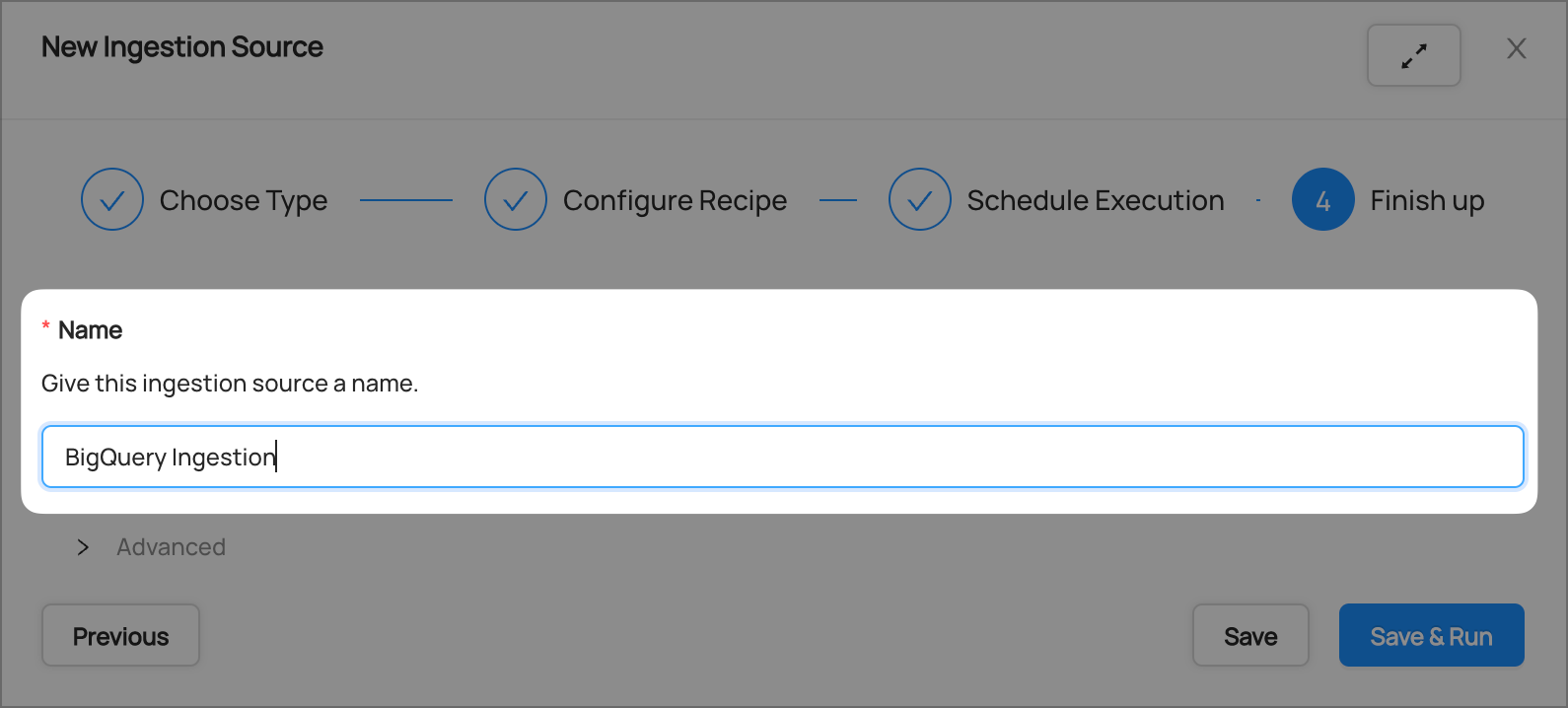

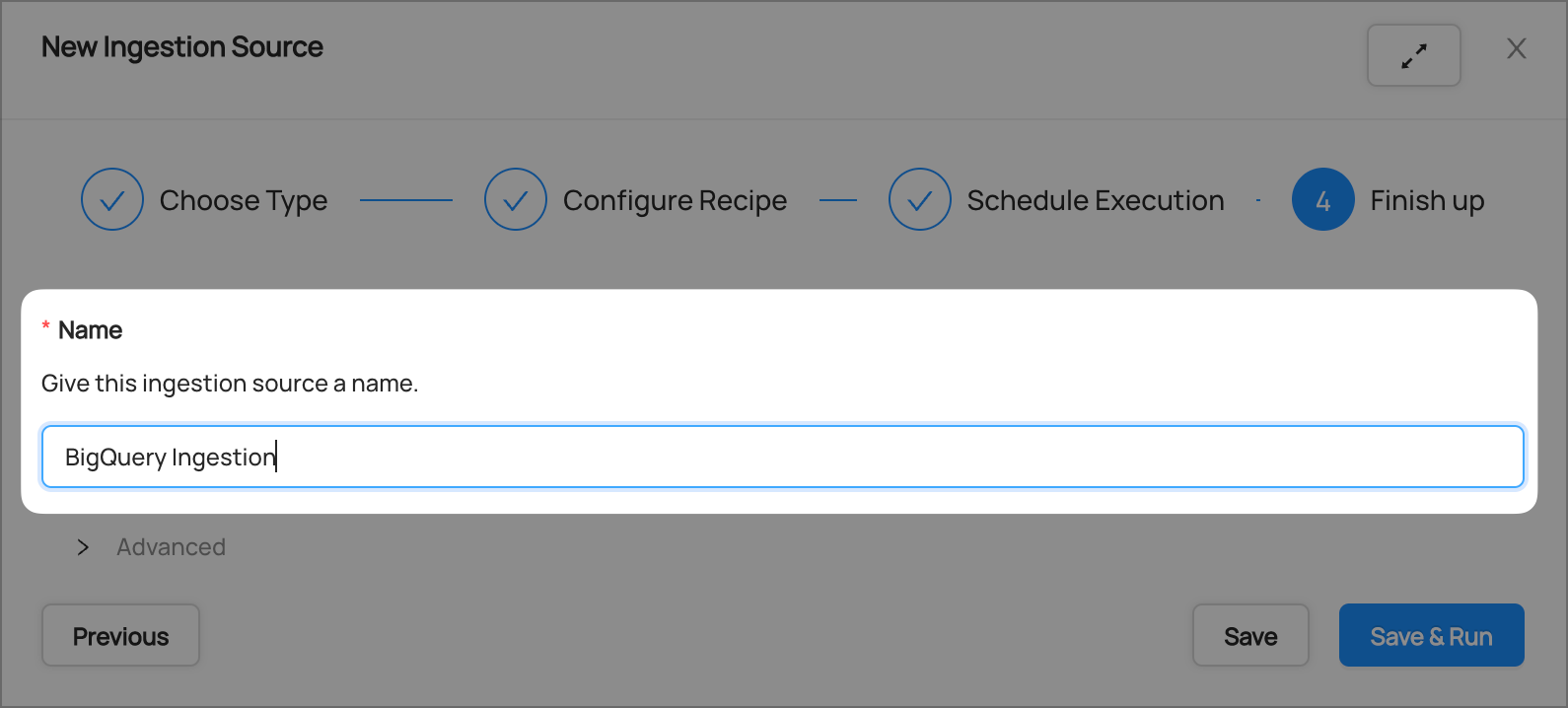

## Finish Up

12. Name your ingestion source, then click **Save and Run**

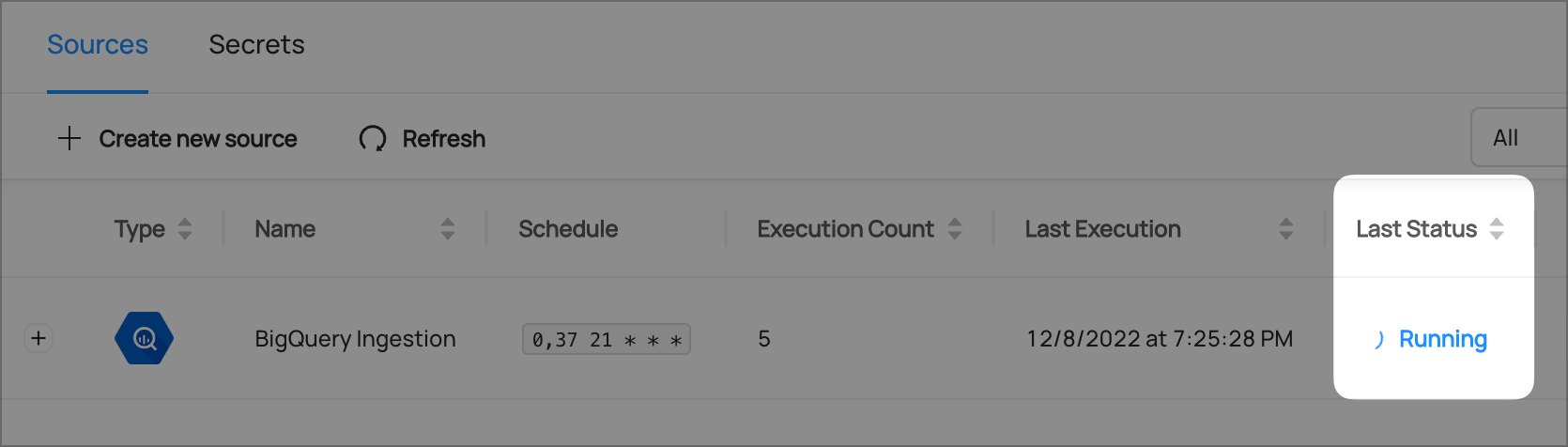

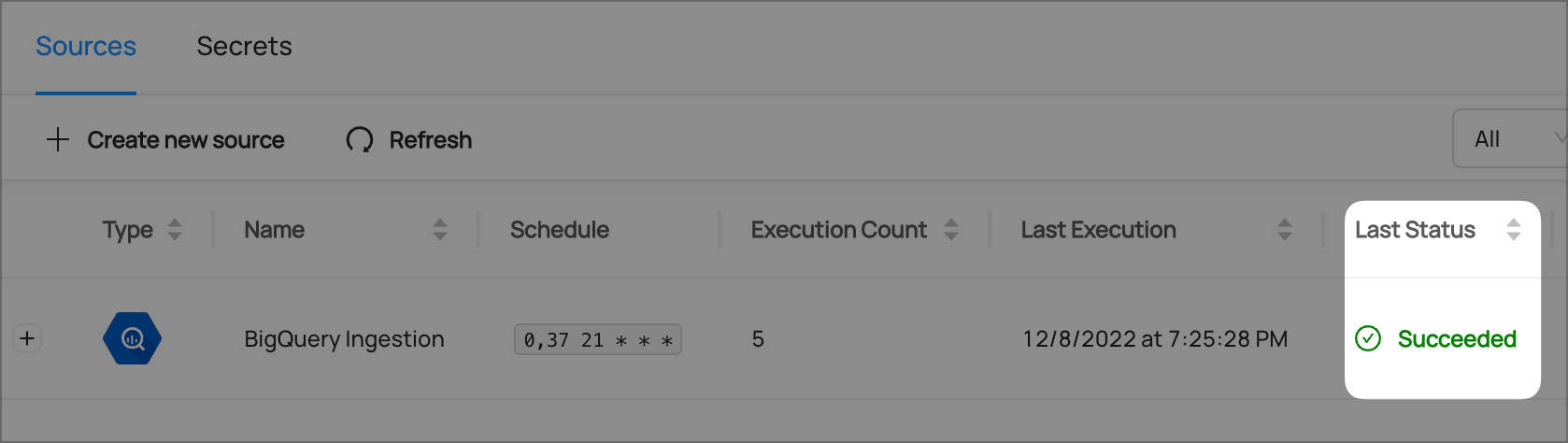

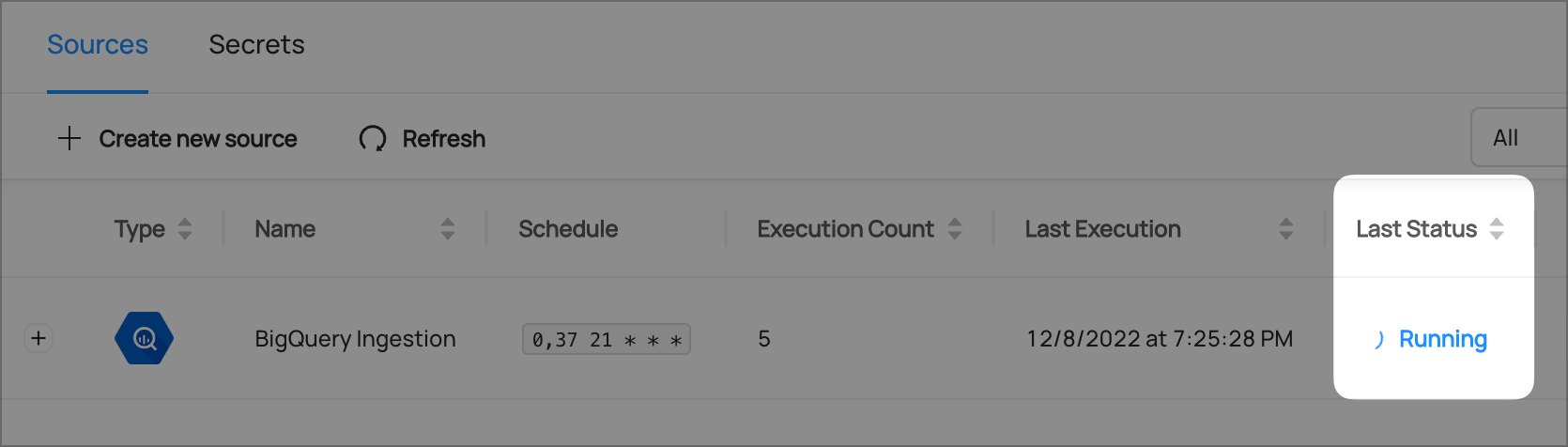

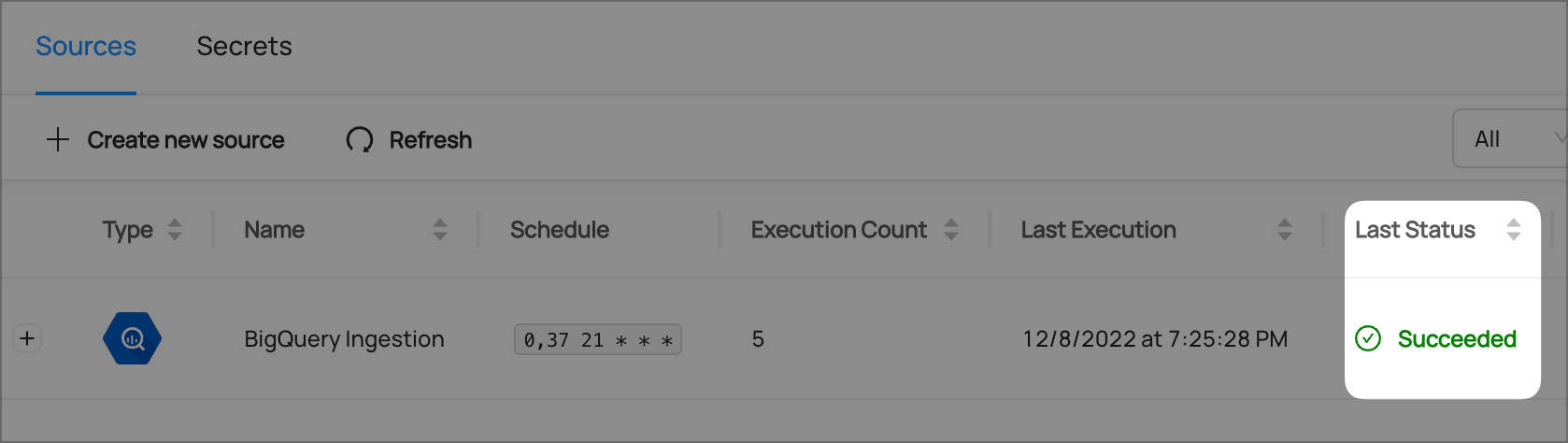

You will now find your new ingestion source running

## Validate Ingestion Runs

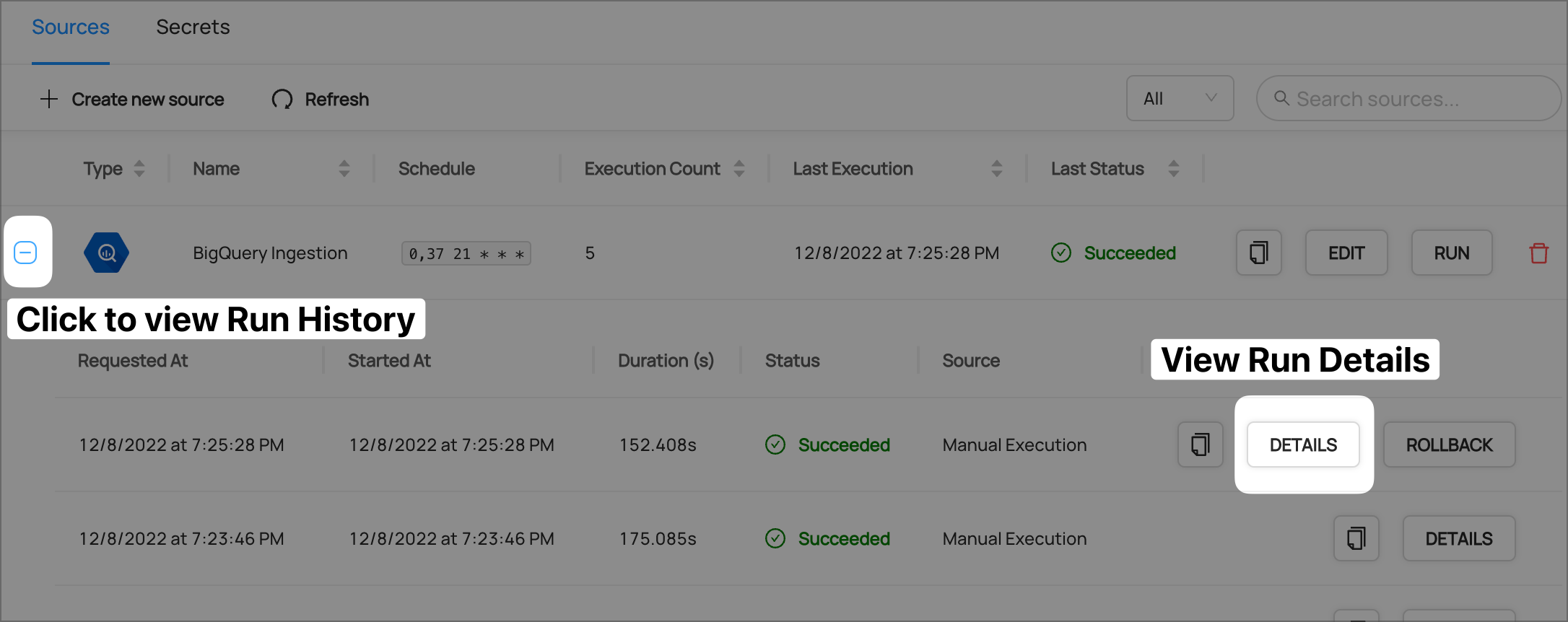

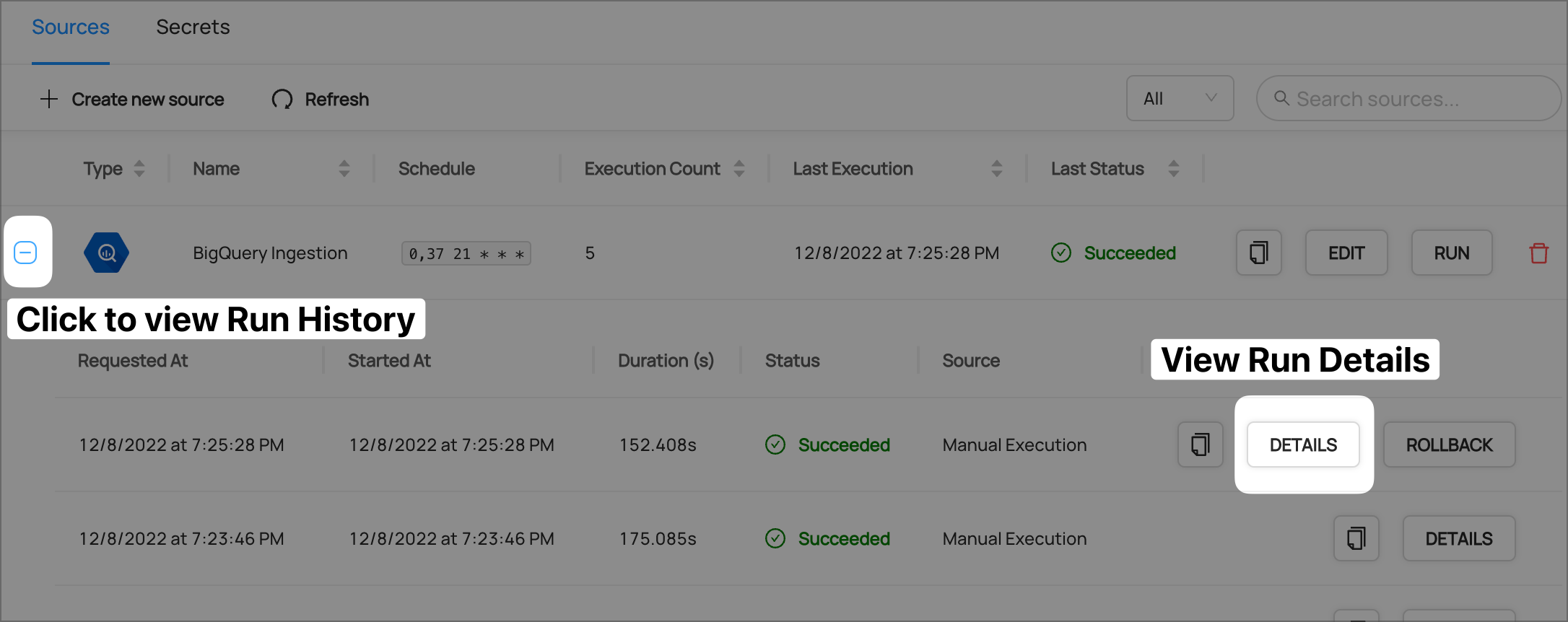

13. View the latest status of ingestion runs on the Ingestion page

14. Click the plus sign to expand the full list of historical runs and outcomes; click **Details** to see the outcomes of a specific run

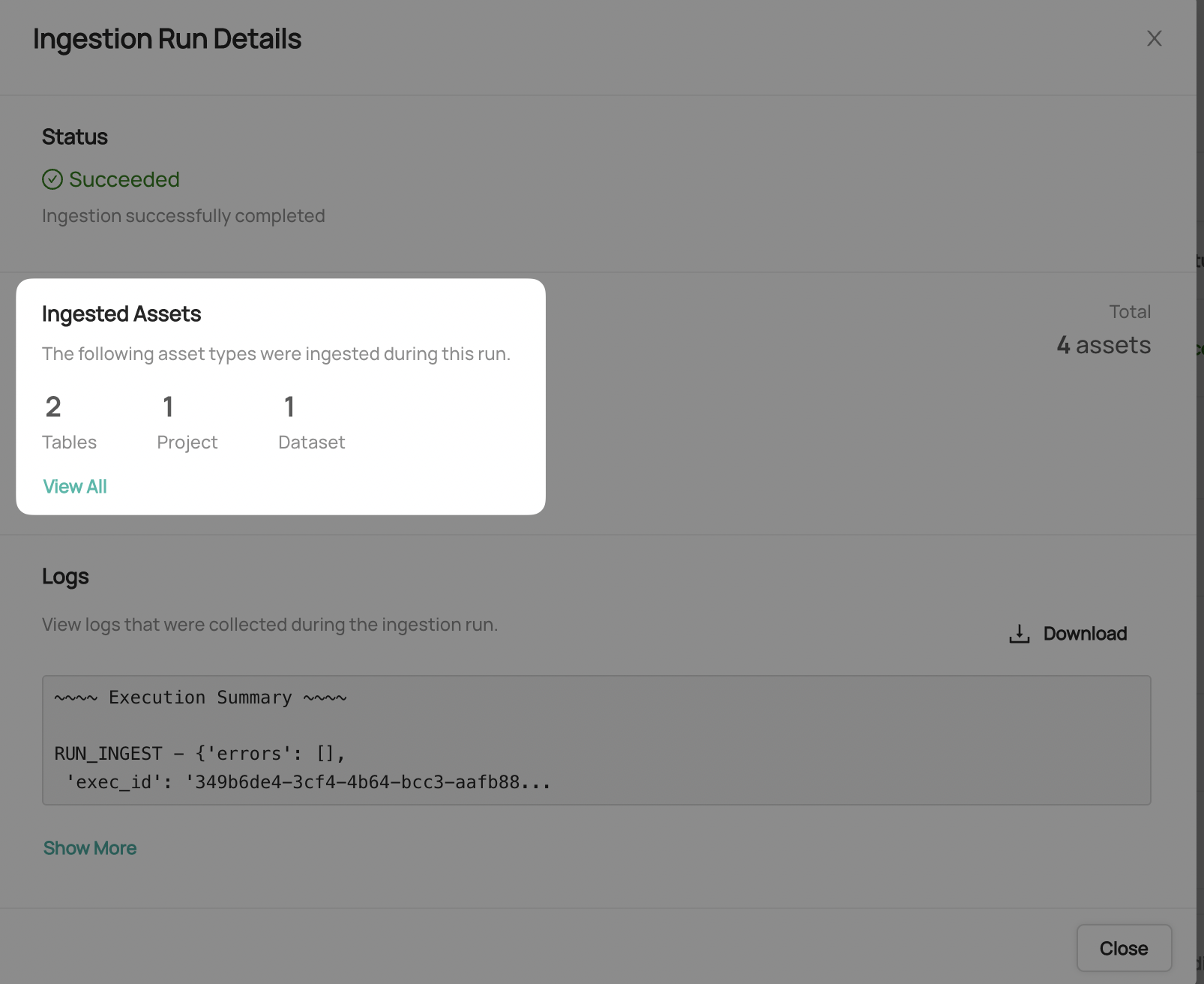

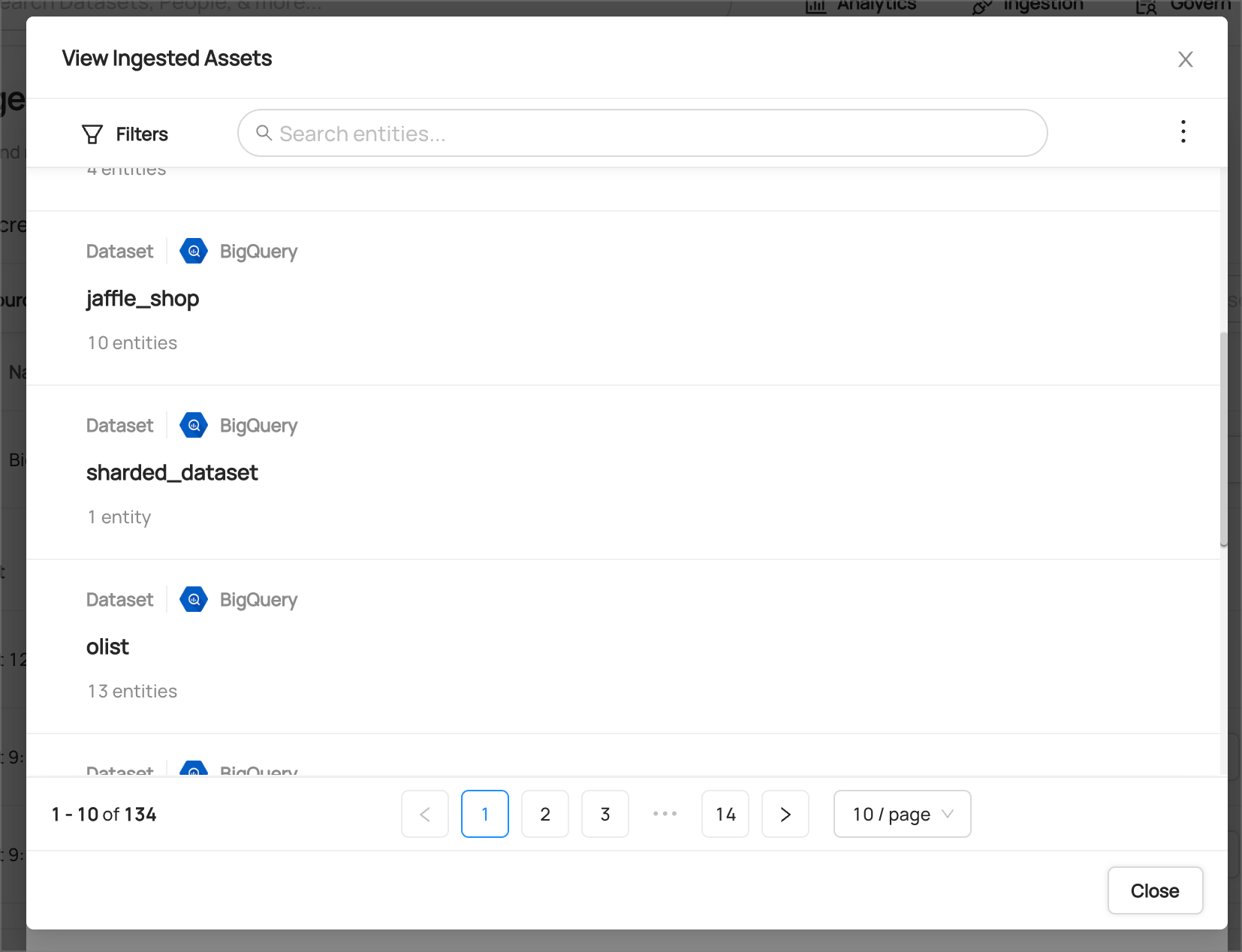

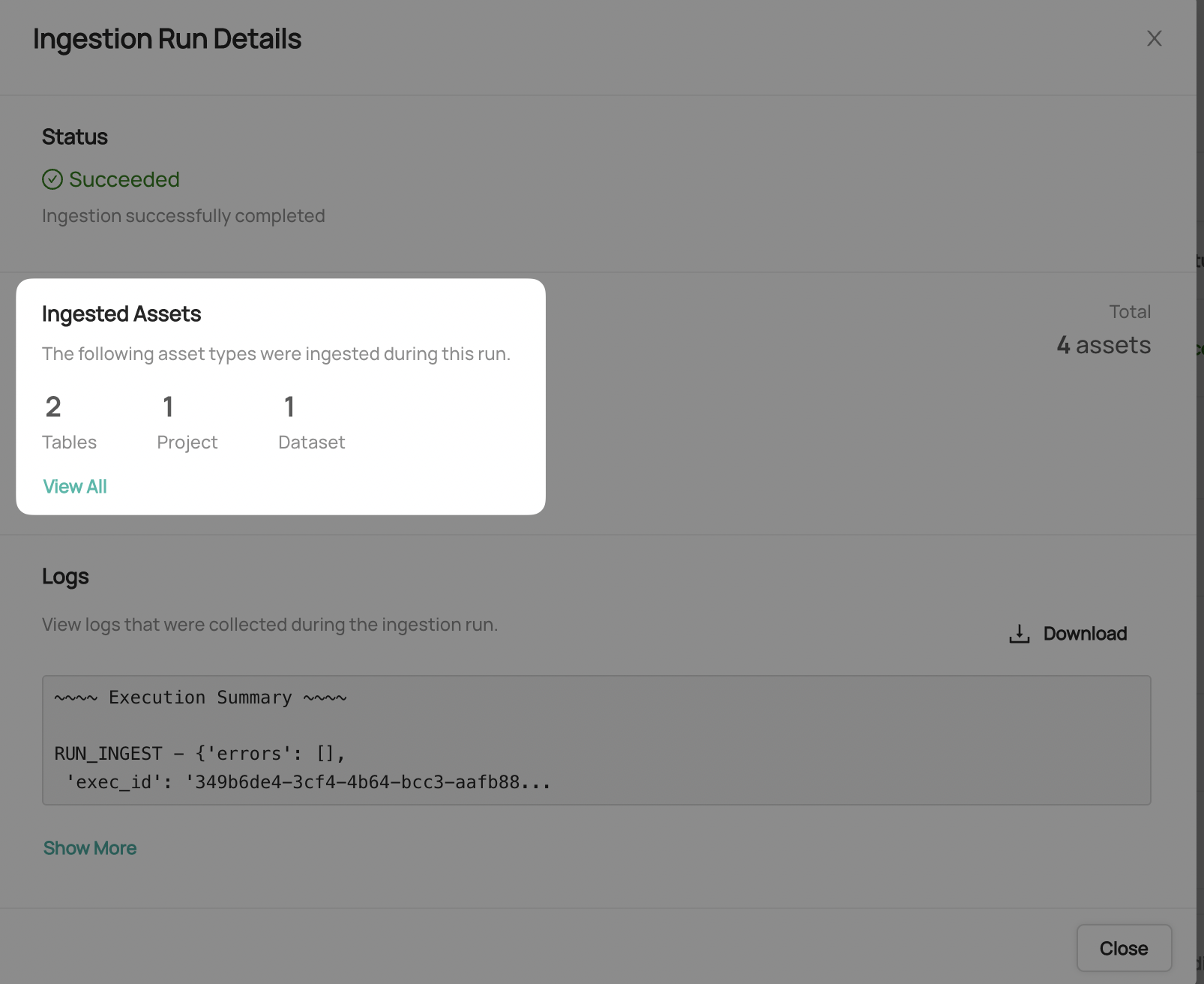

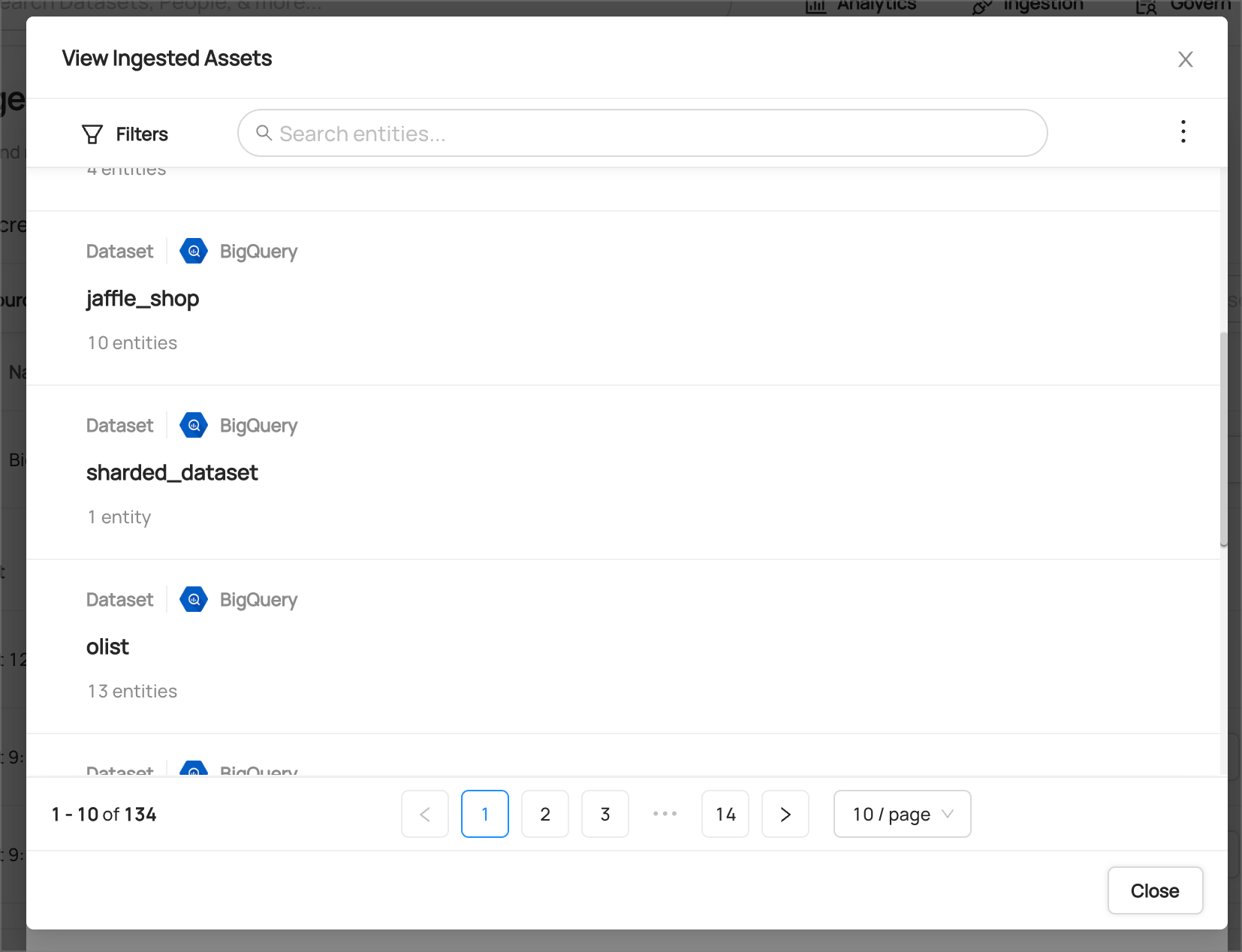

15. From the Ingestion Run Details page, pick **View All** to see which entities were ingested

16. Pick an entity from the list to manually validate if it contains the detail you expected

**Congratulations!** You've successfully set up BigQuery as an ingestion source for DataHub!