MultiModalRetriever (#2891)

* Adding Data2VecVision and Data2VecText to the supported models and adapt Tokenizers accordingly * content_types * Splitting classes into respective folders * small changes * Fix EOF * eof * black * API * EOF * whitespace * api * improve multimodal similarity processor * tokenizer -> feature extractor * Making feature vectors come out of the feature extractor in the similarity head * embed_queries is now self-sufficient * couple trivial errors * Implemented separate language model classes for multimodal inference * Document embedding seems to work * removing batch_encode_plus, is deprecated anyway * Realized the base Data2Vec models are not trained on retrieval tasks * Issue with the generated embeddings * Add batching * Try to fit CLIP in * Stub of CLIP integration * Retrieval goes through but returns noise only * Still working on the scores * Introduce temporary adapter for CLIP models * Image retrieval now works with sentence-transformers * Tidying up the code * Refactoring is now functional * Add MPNet to the supported sentence transformers models * Remove unused classes * pylint * docs * docs * Remove the method renaming * mpyp first pass * docs * tutorial * schema * mypy * Move devices setup into get_model * more mypy * mypy * pylint * Move a few params in HaystackModel's init * make feature extractor work with squadprocessor * fix feature_extractor_kwargs forwarding * Forgotten part of the fix * Revert unrelated ES change * Revert unrelated memdocstore changes * comment * Small corrections * mypy and pylint * mypy * typo * mypy * Refactor the call * mypy * Do not make FARMReader use the new FeatureExtractor * mypy * Detach DPR tests from FeatureExtractor too * Detach processor tests too * Add end2end marker * extract end2end feature extractor tests * temporary disable feature extraction tests * Introduce end2end tests for tokenizer tests * pylint * Fix model loading from folder in FeatureExtractor * working o n end2end * end2end keeps failing * Restructuring retriever tests * Restructuring retriever tests * remove covert_dataset_to_dataloader * remove comment * Better check sentence-transformers models * Use embed_meta_fields properly * rename passage into document * Embedding dims can't be found * Add check for models that support it * pylint * Split all retriever tests into suites, running mostly on InMemory only * fix mypy * fix tfidf test * fix weaviate tests * Parallelize on every docstore * Fix schema and specify modality in base retriever suite * tests * Add first image tests * remove comment * Revert to simpler tests * Update docs/_src/api/api/primitives.md Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Update haystack/modeling/model/multimodal/__init__.py Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * get_args * mypy * Update haystack/modeling/model/multimodal/__init__.py * Update haystack/modeling/model/multimodal/base.py * Update haystack/modeling/model/multimodal/base.py Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Update haystack/modeling/model/multimodal/sentence_transformers.py * Update haystack/modeling/model/multimodal/sentence_transformers.py Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Update haystack/modeling/model/multimodal/transformers.py * Update haystack/modeling/model/multimodal/transformers.py Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Update haystack/modeling/model/multimodal/transformers.py Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Apply suggestions from code review Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * Update haystack/nodes/retriever/multimodal/retriever.py Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> * mypy * mypy * removing more ContentTypes * more contentypes * pylint * add to __init__ * revert end2end workflow for now * missing integration markers * Update haystack/nodes/retriever/multimodal/embedder.py Co-authored-by: bogdankostic <bogdankostic@web.de> * review feedback, removing HaystackImageTransformerModel * review feedback part 2 * mypy & pylint * mypy * mypy * fix multimodal docs also for Pinecone * add note on internal constants * Fix pinecone write_documents * schemas * keep support for sentence-transformers only * fix pinecone test * schemas * fix pinecone again * temporarily disable some tests, need to understand if they're still relevant Co-authored-by: Agnieszka Marzec <97166305+agnieszka-m@users.noreply.github.com> Co-authored-by: bogdankostic <bogdankostic@web.de>

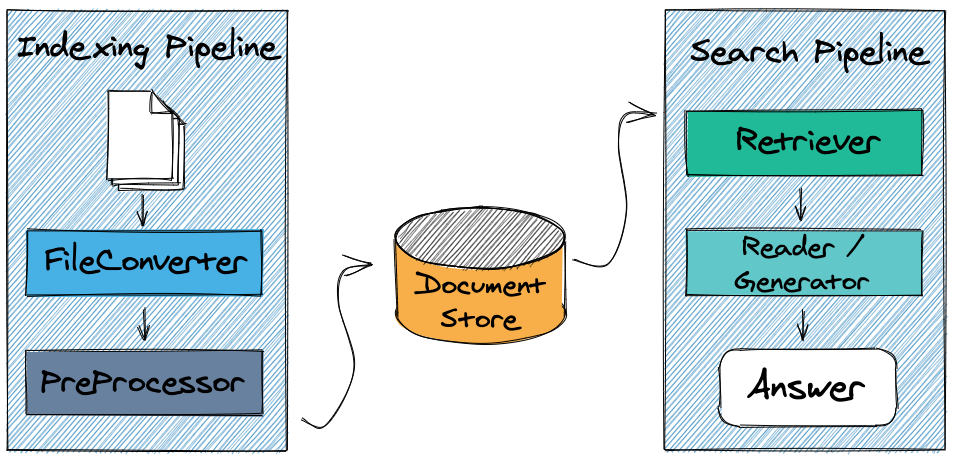

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want to perform Question Answering or semantic document search, you can use the State-of-the-Art NLP models in Haystack to provide unique search experiences and allow your users to query in natural language. Haystack is built in a modular fashion so that you can combine the best technology from other open-source projects like Huggingface's Transformers, Elasticsearch, or Milvus.

What to build with Haystack

- Ask questions in natural language and find granular answers in your documents.

- Perform semantic search and retrieve documents according to meaning, not keywords

- Use off-the-shelf models or fine-tune them to your domain.

- Use user feedback to evaluate, benchmark, and continuously improve your live models.

- Leverage existing knowledge bases and better handle the long tail of queries that chatbots receive.

- Automate processes by automatically applying a list of questions to new documents and using the extracted answers.

Core Features

- Latest models: Utilize all latest transformer-based models (e.g., BERT, RoBERTa, MiniLM) for extractive QA, generative QA, and document retrieval.

- Modular: Multiple choices to fit your tech stack and use case. Pick your favorite database, file converter, or modeling framework.

- Pipelines: The Node and Pipeline design of Haystack allows for custom routing of queries to only the relevant components.

- Open: 100% compatible with HuggingFace's model hub. Tight interfaces to other frameworks (e.g., Transformers, FARM, sentence-transformers)

- Scalable: Scale to millions of docs via retrievers, production-ready backends like Elasticsearch / FAISS, and a fastAPI REST API

- End-to-End: All tooling in one place: file conversion, cleaning, splitting, training, eval, inference, labeling, etc.

- Developer friendly: Easy to debug, extend and modify.

- Customizable: Fine-tune models to your domain or implement your custom DocumentStore.

- Continuous Learning: Collect new training data via user feedback in production & improve your models continuously

| 📒 Docs | Overview, Components, Guides, API documentation |

| 💾 Installation | How to install Haystack |

| 🎓 Tutorials | See what Haystack can do with our Notebooks & Scripts |

| 🔰 Quick Demo | Deploy a Haystack application with Docker Compose and a REST API |

| 🖖 Community | Discord, Twitter, Stack Overflow, GitHub Discussions |

| ❤️ Contributing | We welcome all contributions! |

| 📊 Benchmarks | Speed & Accuracy of Retriever, Readers and DocumentStores |

| 🔭 Roadmap | Public roadmap of Haystack |

| 📰 Blog | Read our articles on Medium |

| ☎️ Jobs | We're hiring! Have a look at our open positions |

💾 Installation

1. Basic Installation

You can install a basic version of Haystack's latest release by using pip.

pip3 install farm-haystack

This command will install everything needed for basic Pipelines that use an Elasticsearch Document Store.

2. Full Installation

If you plan to be using more advanced features like Milvus, FAISS, Weaviate, OCR or Ray, you will need to install a full version of Haystack. The following command will install the latest version of Haystack from the main branch.

git clone https://github.com/deepset-ai/haystack.git

cd haystack

pip install --upgrade pip

pip install -e '.[all]' ## or 'all-gpu' for the GPU-enabled dependencies

If you cannot upgrade pip to version 21.3 or higher, you will need to replace:

'.[all]'with'.[sql,only-faiss,only-milvus1,weaviate,graphdb,crawler,preprocessing,ocr,onnx,ray,dev]''.[all-gpu]'with'.[sql,only-faiss-gpu,only-milvus1,weaviate,graphdb,crawler,preprocessing,ocr,onnx-gpu,ray,dev]'

For an complete list of the dependency groups available, have a look at the haystack/pyproject.toml file.

To install the REST API and UI, run the following from the root directory of the Haystack repo

pip install rest_api/

pip install ui/

3. Installing on Windows

pip install farm-haystack -f https://download.pytorch.org/whl/torch_stable.html

4. Installing on Apple Silicon (M1)

M1 Macbooks require some extra dependencies in order to install Haystack.

# some additional dependencies needed on m1 mac

brew install postgresql

brew install cmake

brew install rust

# haystack installation

GRPC_PYTHON_BUILD_SYSTEM_ZLIB=true pip install git+https://github.com/deepset-ai/haystack.git

5. Learn More

See our installation guide for more options. You can find out more about our PyPi package on our PyPi page.

🎓 Tutorials

Follow our introductory tutorial to setup a question answering system using Python and start performing queries! Explore the rest of our tutorials to learn how to tweak pipelines, train models and perform evaluation.

🔰 Quick Demo

Hosted

Try out our hosted Explore The World live demo here! Ask any question on countries or capital cities and let Haystack return the answers to you.

Local

Start up a Haystack service via Docker Compose. With this you can begin calling it directly via the REST API or even interact with it using the included Streamlit UI.

Click here for a step-by-step guide

1. Update/install Docker and Docker Compose, then launch Docker

apt-get update && apt-get install docker && apt-get install docker-compose

service docker start

2. Clone Haystack repository

git clone https://github.com/deepset-ai/haystack.git

3. Pull images & launch demo app

cd haystack

docker-compose pull

docker-compose up

# Or on a GPU machine: docker-compose -f docker-compose-gpu.yml up

You should be able to see the following in your terminal window as part of the log output:

..

ui_1 | You can now view your Streamlit app in your browser.

..

ui_1 | External URL: http://192.168.108.218:8501

..

haystack-api_1 | [2021-01-01 10:21:58 +0000] [17] [INFO] Application startup complete.

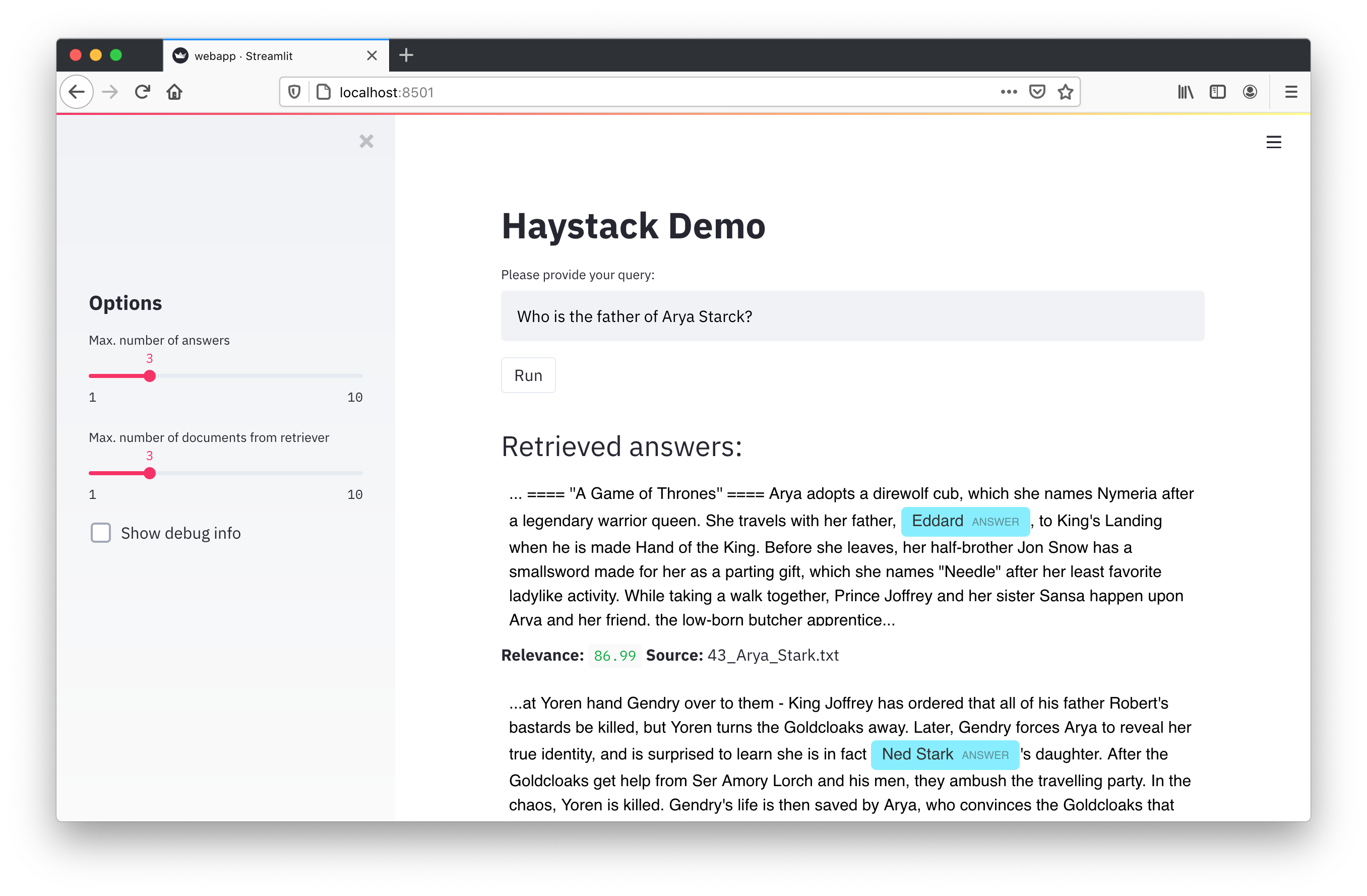

4. Open the Streamlit UI for Haystack by pointing your browser to the "External URL" from above.

You should see the following:

You can then try different queries against a pre-defined set of indexed articles related to Game of Thrones.

Note: The following containers are started as a part of this demo:

- Haystack API: listens on port 8000

- DocumentStore (Elasticsearch): listens on port 9200

- Streamlit UI: listens on port 8501

Please note that the demo will publish the container ports to the outside world. We suggest that you review the firewall settings depending on your system setup and the security guidelines.

🖖 Community

There is a very vibrant and active community around Haystack which we are regularly interacting with! If you have a feature request or a bug report, feel free to open an issue in Github. We regularly check these and you can expect a quick response. If you'd like to discuss a topic, or get more general advice on how to make Haystack work for your project, you can start a thread in Github Discussions or our Discord channel. We also check Twitter and Stack Overflow.

❤️ Contributing

We are very open to the community's contributions - be it a quick fix of a typo, or a completely new feature! You don't need to be a Haystack expert to provide meaningful improvements. To learn how to get started, check out our Contributor Guidelines first.

You can also find instructions to run the tests locally there.

Thanks so much to all those who have contributed to our project!

Who uses Haystack

Here's a list of organizations who use Haystack. Don't hesitate to send a PR to let the world know that you use Haystack. Join our growing community!